The Rise of Groq: Slow, then Fast

Distractions, Business Model Pivot, Return On Luck, and Looking Ahead

Harvard Business Case Studies were my favorite part of business school.

Groq is a case study unfolding before our very eyes. Let’s take a look together.

Introduction

Groq was established in 2016 by two former Google X engineers: Jonathan Ross, who helped invent Google’s Tensor Processing Unit (TPU), and Douglas Wightman, who worked on Project Loon. Groq set out to create a TPU-like chip for sale on the open market, launching the Groq Tensor Streaming Processor (TSP) in 2019.

Challenges

We begin our study by examining Groq's sluggish start.

What Problem Is Groq Solving?

Did Groq Know?

From the beginning, Groq was very clear that they were creating a commercially available chip with capabilities similar to Google TPU. Yet Groq struggled to articulate the customer problem its chip solves.

We see this struggle in Groq’s Oct 2019 whitepaper, which touts all sorts of technical features and benefits: Determinism! Simple architecture! More performance per transistor!

Businesses exist to solve a problem for a customer. Did Groq understand their customer and what problems they have?

Skipping The Basics

Most chips have a wide range of potential use cases, making it challenging for semiconductor startups to focus on a specific customer problem.

This potential broad applicability deceptively makes the need for the chip seem obvious. Everyone can benefit from this chip, right?

Furthermore, when the startup founder is an engineer there can be a tendency to focus on the solution and skip the customer's needs. This problem worsens when the founder has a powerful personal brand. This guy created Google’s TPU; he knows what he is talking about!

Investors aren’t immune from skipping the basics either. A Google TPU inventor is raising money to create a TPU competitor? Sign me up!

Marketing Miss

Casting A Wide Net

This tech-centric mindset and failure to understand the customer’s problem seeped into the company’s early marketing efforts.

From the first two pages of the aforementioned whitepaper, here is the grab bag of problem statements and observations Groq mentions for enterprises developing high-performance machine learning applications:

Problems:

deployment of AI models to production is challenging

achieving high-performance processing is challenging

developer experience is poor

Observations:

exponential increases in model complexity and computing throughput are needed for neural nets to achieve human-like inference performance

investments in traditional server clusters are reaching a computational cost wall

chips are increasingly complex with unnecessary bloat

inference has reached a bottleneck

Groq was casting quite the wide net. 😵💫

Surely these problems resonated with many enterprises, but Groq’s marketing failed to clarify what problem they solve and for whom.

This lack of clarity happens when a company has a solution looking for a problem.

Difficult Business Model

Basics of Groq’s Business Model

Groq was pursuing the traditional semiconductor hardware business model, which involves a fabless company selling its chips to OEMs and ODMs. This business model is challenging.

For newbies, enterprises acquire AI hardware capabilities by purchasing OEMs' pre-configured servers or renting hardware from CSPs. CSPs sometimes skip the OEM and buy from ODMs directly, so Groq’s ideal large customers are OEMs and ODMs.

To simplify the semiconductor stack, we can think of Groq competing in a “components” layer, home to companies creating the logic or memory chips that underpin modern devices. These chips are then integrated into systems and sold ultimately as servers or consumer electronic devices depending on the use case. App developers build apps on top of these systems.

Note that there are layers of intermediaries between Groq and end consumers.

Tough Business Model For A Startup

Selling hardware directly to OEMs is challenging for semiconductor startups. It's a B2B model with slow sales cycles, and the initial investment required is staggering. Unlike software startup companies, where startup costs can be relatively minimal, hardware startups must pour tens of millions into design and manufacturing before securing a single customer. This financial burden necessitates venture capital backing, a model dating back to the early days of Intel and Arthur Rock.

There’s also a Catch-22: OEMs like Dell need proof that enterprise customers want the startup’s servers, but how can a startup provide that proof without existing enterprise customers? PowerPoint presentations and simulations only go so far.

The most convincing evidence is a real chip in the hands of a customer, demonstrating real-world value. To break this cycle, startups focus on securing smaller, strategic wins that showcase their technology's potential to address customer needs.

However, securing these smaller wins proves to be a time-intensive endeavor. B2B customers understandably insist on pilot programs before committing to substantial purchases. Startups must identify a champion within the customer's organization, provide simulations or evaluation boards, assist in development, and ensure the customer’s pilot solution actually demonstrates value to the organization.

Whew. This entire sales process may take 12 months or more. Again, that’s after all the time and money spent designing and manufacturing the chip.

Even if they demonstrate a successful customer pilot, startups like Groq must still overcome the significant hurdle of convincing OEMs to take a calculated risk on their hardware. The OEMs must believe the company will have longevity, a tangible roadmap, and a rapid development cadence. Ultimately, startups must persuade OEMs that the potential rewards outweigh the inherent risks of adopting an emerging technology.

Moreover, the "components" semiconductor business model creates a dependency on a relatively small number of OEMs/ODMs. This concentrated customer base holds the keys to success, as their purchasing decisions and timelines directly impact the startup’s revenue and growth potential.

Finally, choosing the traditional business model carries significant financial implications. The first version of this chip (v1) has enormous upfront investments and a lengthy time to market. Even if successful, the initial tapeout is typically not large enough to fully fund the next iteration of the chip (v2), necessitating another round of fundraising while the sales process for v1 is still underway. It’s not easy to pitch investors on a second round to fund v2 while still finding the first customers for v1.

It’s no wonder there are substantially fewer semiconductor VCs.

Determinism Distraction

Determinism Primer

In its quest for customer wins to showcase for OEMs, Groq stumbled by chasing the wrong customers. Groq pursued a niche set of customers who needed a particular characteristic of its architecture called determinism.

This led to an overemphasis on the deterministic nature of Groq’s chips.

A quick primer on determinism from Groq’s determinism whitepaper

Unlike traditional CPUs (Central Processing Unit), GPUs (Graphics Processing Unit), or FPGAs (Field Programmable Gate Array), a GroqChip has no control flows, no hardware interlocks, no reactive components like arbiters or replay mechanisms that would perturb or permute the order of events and thereby vary processing performance on the same workload. Rather, Groq takes a software-defined approach where flow control is orchestrated by the compiler. When a tensor is read out of memory and destined to a functional unit to be operated on, the compiler knows exactly how long it is going to take. This approach allows for a simpler and more efficient hardware architecture that executes a predetermined script.

Determinism has its benefits.

In real-time systems with stringent latency requirements for safety reasons (e.g., autonomous cars) determinism is essential as it provides developers with the predictability they need to ensure timely responses. Knowing precisely how long the chip will take to process inputs allows for accurate calculations of stopping distances or evasive maneuvers.

Non-safety-critical solutions can benefit, too. To illustrate, a system might typically process a workload in under 500ms but occasionally take 5+ seconds. This is known as “tail latency” and can negatively impact customer performance and trust.

How Many Enterprise Customers Need Determinism?

Groq’s early customers cared about deterministic latency. For example, Argonne National Laboratories is a Groq customer with a particular use case where predictable inference times are important – real-time AI prediction as an input to a fusion reactor control system! (Very cool science, see here for more).

“Even if you have a highly accurate trained model that can predict any possible plasma instability before it occurs, it is only useful if it can give reactor operators and automated response systems time to act. There cannot be any possibility that the AI machine will take a day or an hour or even a minute to generate a prediction,” Felker said.

While Groq's deterministic approach is valuable, especially for certain enterprises that need determinism — it's crucial to remember that Groq’s customers are OEMs like Dell.

OEMs want to know, “How many enterprise customers are buying determinism?”

Dell doesn’t sell determinism to enterprises; they sell performance, power efficiency, TCO, ease of management, trust, and security.

At a minimum, Groq should tailor its messaging to highlight how it directly addresses these concerns with determinism under the hood. Better yet, Groq should solve a traditional enterprise customer’s latency or TCO issue and showcase that to OEMs instead of the Argonne example.

Struggles Articulated

In this 2022 episode of Moore’s Lobby, Ross gives first-hand evidence of skipping the customer problem and the resulting struggles selling determinism:

When we started meeting venture capitalists all they wanted to know about was this TPU thing, because it had actually just become public recently. It hadn't been around that long and they just kept asking questions about it and one of the questions is “Well, what do you think the problem is that should be solved?” and I'm like “They're hard to program”.

I literally designed the first TPU, literally implemented half of it by area by lines of code whatever you want to count. I was a software engineer and I couldn't program the thing. They had dedicated people programming, so it was difficult right? And GPUs, by the way, are worse — they have all these tricks to make it look easy to program, but under the hood the people that we were hiring were like “yeah GPUs are worse”. So as we were talking to VCs this just became a theme.

We see

VCs interested in the TPU founder building a TPU-like chip

Ross flexing his personal brand

Ross and investors focusing on technical problems (TPU is hard to program) rather than customer problems

Continuing on, Ross explains Groq’s subsequent challenge in selling determinism:

I think this is true for every single startup. You have a great solution in mind – you actually know a customer pain point and you have a solution and you walk in and you're like “determinism!”. And they're like “Yeah…. I gotta go…”. And what you're not doing is you're not connecting it to their need. It's about pain points and a better way to do it is to be like “OK, I understand your problem. Your problem is you think you don't have enough labeled data because you're not getting enough accuracy, right? But if you had a larger model, that could make up for that right? OK, and the reason that you don't have that is because it wouldn’t run in production, right? So if we were able to run a larger model, in less time, and we don't have to margin it because it's deterministic – that would actually help you build a bigger model and be more accurate without having to get more data”.

We see Groq shoehorning the characteristics that customers actually care about—latency and performance—into their determinism worldview.

When all you have is a hammer, it’s tempting to treat everything as a nail.

This, again, is the fallout of a solution looking for a problem.

The Right First Step Wouldn’t Have Been Enough

It’s clear that Groq started with a solution, which led them down the determinism rabbit trail. What alternative path could Groq have pursued from Day 1?

Let the TPU guide you

Let’s rewind to “Groq is making a TPU for the open market” and consider the first principles of TPUs for a minute: What are TPUs, and why do they exist?

The TPU is an AI accelerator designed to significantly outperform GPUs in speed and energy efficiency when running AI workloads.

Borrowing from TPU's first principles, Groq could have honed in on customers who needed faster and less power-intensive AI processing.

In 2016, were potential customers struggling to deliver seamless, AI-powered user experiences due to excessive latency and sluggish UI response times? Did the limitations of existing hardware hamper their innovative ideas? Or companies burdened by skyrocketing capital and operational expenses caused by AI workloads?

Hyperscalers quickly come to mind as obvious customers with significant expenses. But, like Google, wouldn’t hyperscalers just build their own chips? Why choose Groq?

(The answer was ultimately yes — see Meta MTIA, Microsoft Maia, AWS Inferentia).

Who else needs AI workloads to be an order of magnitude faster? High-frequency trading firms come to mind, but that’s not a simple nut to crack either (interesting read here), and it’s unclear if the size of that market is enough to excite OEMs.

What about a large consumer app market?

When Groq emerged in 2016, machine learning was already prevalent, particularly in areas like recommendation systems. Many of these applications relied on batch processing or offline inference, meaning results could be generated over time (say nightly), like Spotify's Weekly recommendations.

Low latency would benefit on-demand machine learning inference, which was already integrated into daily life significantly with Siri and Alexa. However, the problem with these assistants was their ability, not their speed. While Groq's chips could have offered faster inference speeds and enabled multiple inferences per interaction, this wouldn’t have addressed Siri and Alexa’s core issue of model quality. For a truly improved user experience, advancements in model capabilities were needed, and we didn’t have a clear line of sight there.

Therefore, even with the right value prop out of the gate, Groq's chip did not have obvious large consumer use cases.

Until LLMs came along.

You saw that coming, didn’t you?

Good Fortune Enables Business Model Pivot

Turning Point

The world changed in late 2022 with the launch of ChatGPT. While transformer-based LLMs had existed since 2017, OpenAI’s simple user interface brought the technology to the masses. But there was one problem: LLM inference on large Nvidia GPU clusters was way too slow.

Suddenly, Groq's chips had a killer use case: speed up LLM inference and enable better user experiences.

Groq pounced on this opportunity and rebranded its chip as an LPU (Language Processing Unit) to signal to the market that it could run large language models.

Another Catch-22 emerged: Groq needed a large language model to demonstrate the value of their chips, but these models were proprietary and prohibitively expensive to train. Even with a promising consumer use case identified, Groq could not showcase its technology without investing significant time and resources into developing its own LLM.

Mark Zuckerberg indirectly swooped to the rescue only a few months later, in late February 2023, when Meta open-sourced its Llama LLM.

Both of Groq’s Catch-22s were solved.

Groq had a useful model to showcase its significant LLM latency superiority

Significant LLM latency superiority is a compelling use case for OEMs

This marks the beginning of Groq’s pivot, and it’s not an understatement to say that Meta saved Groq.

Ross would agree.

Return on Luck

Hats off to Groq for seizing the moment. Were they lucky? Sure. But Groq took action and earned a significant return on their luck. From Jim Collins,

Return on Luck is a concept developed in the book Great by Choice. Our research showed that the great companies were not generally luckier than the comparisons—they did not get more good luck, less bad luck, bigger spikes of luck, or better timing of luck. Instead, they got a higher return on luck, making more of their luck than others. The critical question is not, Will you get luck? but What will you do with the luck that you get?

GroqChat

In Sep 2023, Groq publicly demonstrated GroqChat, a ChatGPT-like interface running Llama2 70B on Groq’s LPUs. Groq’s ChatGPT clone was incredibly snappy, responding with significantly faster time-to-first token latency and significantly higher tokens-per-second throughput than ChatGPT. Groq made this publicly available in December, and consumer interest skyrocketed.

Groq's rapid rise to prominence was propelled by its inaugural investor, Chamath Palihapitiya, whose highly visible online presence shared Groq's LLM latency superiority with hundreds of thousands of followers.

By February 2024, the world had noticed Groq.

Ben Thompson’s Feb 20th edition of Stratechery captured the moment perfectly:

[Groq’s] speed-up is so dramatic as to be a step-change in the experience of interacting with an LLM; it also makes it possible to do something like actually communicate with an LLM in real-time, even halfway across the world, live on TV

One of the arguments I have made as to why OpenAI CEO Sam Altman may be exploring hardware is that the closer an AI comes to being human, the more grating and ultimately gating are the little inconveniences that get in the way of actually interacting with said AI. It is one thing to have to walk to your desk to use a PC, or even reach into your pocket for a smartphone: you are, at all times, clearly interacting with a device. Having to open an app or wait for text in the context of a human-like AI is far more painful: it breaks the illusion in a much more profound, and ultimately disappointing, way. Groq suggests a path to keeping the illusion intact.

Hints of a New Value Prop

GroqChat showed signs of a new value proposition:

Customer Pain: LLM-powered AI assistants are frustratingly slow on modern AI hardware

Underlying Problem: The most performant AI hardware, Nvidia H100 GPUs, are general purpose and therefore have inefficiencies that slow down LLM inference

Solution: An AI accelerator architected from the ground up to improve the LLM user experience with ultra-low latency

This value proposition suggests a new business model: a GroqChat subscription.

However, despite its speed on LPUs, Llama2's quality fell short of competitive proprietary models, making it unlikely that paying ChatGPT subscribers would consider switching to a paid GroqChat service.

Finding the Right Business Model

New Customers

If GroqChat wasn’t where product-market fit would be found, what next? Who could benefit from ultra-low latency inference even with a moderately useful LLM? Developers!

Build New Experiences, Bank On Model Improvements

Previously, when GroqChat competed directly with ChatGPT using the same interface and AI assistant value proposition, the quality of the model was a key differentiator. However, when app developers create previously impossible experiences, the model's lesser abilities may be fine for the specific use case.

Developers can bank on the continuous improvement of open-source model quality. Build the killer experience now and let the model improve over time.

This is analogous to riding Moore’s Law improvements. From a recent Ben Thompson and Kevin Scott chat:

Is there a bit where tech has forgotten what it was like to build on top of Moore’s Law? You go back to the 80s or 90s and it took a while to get the — you needed to build an inefficient application because you wanted to optimize for the front end of the user experience, and you just trusted that Intel would solve all your problems.

The difference now is that we trust that the hardware will get better and the model will improve.

Groq API: Inference-As-A-Service

Groq’s LPUs shift LLM latency from painful to usable, enabling developers to build new user experiences and applications. If Groq’s target customers are app developers, let’s update the value proposition one more time:

Customer Pain: App developers struggle to leverage LLM inference because AI compute is too slow.

Underlying Problem: The most performant AI hardware, Nvidia H100 GPUs, are general purpose and therefore have inefficiencies that slow down LLM inference

Solution: An inference API powered by Groq LPUs with extremely low latency and high throughput

Groq's value proposition would be best realized through an API. Groq did just that, launching their Groq Cloud API in January 2024.

The product can be considered "Inference as a Service" — specifically, ultra-fast inference. Groq hosts open-source models on its hardware and provides access via an API. Developers can purchase usage-based access.

Groq enters a crowded market of inference-as-a-service providers, including OpenAI API, Amazon Bedrock, Azure OpenAI Service, Google Gemini API, and others.

However, Groq offers a unique value proposition: hyperspeed.

(That’s more fun to say than ultra-low latency.)

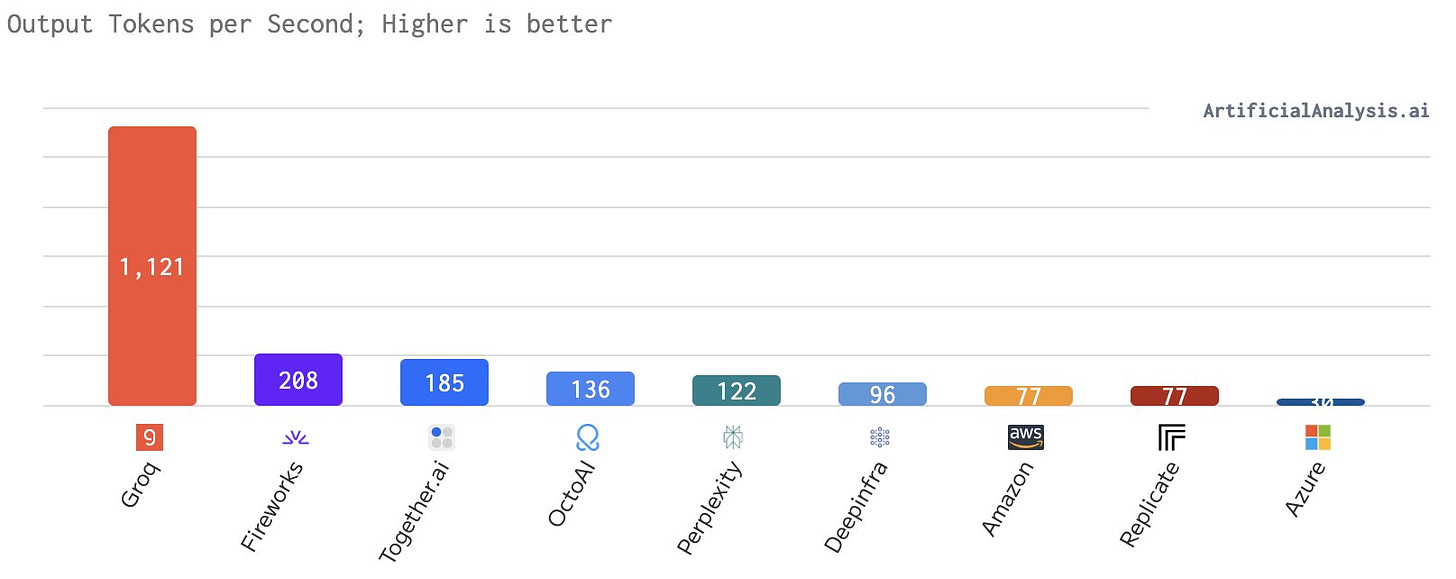

This speed manifests to the user as high output tokens per second. (For a discussion of how to think about LLM performance, see this.)

This speed advantage is a direct result of their LPUs, surpassing their competitors' GPU- and TPU-based solutions.

Today's app developers face a trade-off: Groq delivers unmatched speed and user experience, while competitors excel in model quality.

Developers sure seem interested:

Benefits of the New Value Prop & Business Model

Advantages for Developers

Inference as a service is a natural fit for app developers. By abstracting the machine learning backend and providing a simple API interface, developers can incorporate AI capabilities into their applications without touching the complexities of model training and deployment.

The consumption-based business model reduces costs by eliminating the need to purchase or rent dedicated GPUs.

A Useful Abstraction for Groq

There are arguments floating around about the struggles of building on top of Groq’s LPUs. From Irrational Analysis:

VLIW architectures have the following attributes:

Excellent area efficiency.

Extremely low latency.

Enables low-power (< 1 watt) designs.

Frees up significant power budget for other blocks. (matrix unit)

Historical success in DSP markets.

A nightmarish compiler problem that effectively forces expert end-users to hand-write assembly code in a soul-crushing manner.

My formal education is on digital signal processing. This market needs low-latency and low power and tends to have intrinsically parallel workloads. Because of the severe constraints, the end-users of DSP-world are willing to hand-code assembly.

VLIW compilers are notoriously useless. So bad that most folks view the compiler output as a starting point for the required, labor-intensive hand-optimization process.

…

Here is recent, hard evidence that the Groq compiler is just like all the other (non-Google) VLIW compilers. From their own marketing doc:

Take a moment to carefully read this snippet and form your own opinion/understanding.

They do not specify what “today” means so I extrapolated via linear fit.

I believe the following are reasonable assumptions:

The Groq team achieved optimal performance of Llama-2 70B approximately 48 days after the model was released.

They have not acheived meaningfully better results since then, otherwise the marketing document would have been updated.

All the compiler, software, and systems engineers spent 100% of their time crunching to get this one model working, because they have no customers and nothing else to do.

What conclusions can we make based on this first-party information Groq so helpfully provided?

It took the entire team of foremost experts of Groq architecture 5 days just to get the compiler to spit something out that functions.

The 144-wide VLIW compiler completely failed at its job, delivering 1/30th (3.3%) of the optimal performance for that particular model.

The super-expert team spent approximately 43 days hand-writing assembly code to optimize performance and improve average VLIW bundle occupancy.

This is standard VLIW stuff.

This is what happens when you try to compile AI models to 144-wide VLIW.

This time is not different.

The compiler is practically useless. (not the compiler team’s fault)

If true, this compiler problem is a show-stopper in the old business model. OEMs won’t buy LPUs if enterprises can’t write software for them. However, the problem is abstracted away with the inference as a service approach. App developers aren’t exposed to the complexities of the compiler and writing software on the chips. They simply get fast LLM latency, and it gets better over time. Groq does the heavy lifting to wring performance out of the model on their hardware.

Challenges for Groq

Can Groq scale?

Does Groq have a plausible path to future performance improvement?

Some folks suggest Groq can’t scale but have reached the limits of its architecture. Memory capacity is the main concern, as Groq’s chips have no DRAM and only 230MB of SRAM. Supporting large models like Llama 70B requires 70GB at 8-bit precision and, therefore, needs a massive Groq system if each chip only has 230MB of memory. According to Next Platform,

Groq required 576 chips to perform Llama 2 inference for benchmarking (8 racks * 9 servers/rack * 8 chips/server)

On the other hand, Groq is only on a 14-nm foundry process. Groq’s next tapeout on 4nm promises to help alleviate some of their current bottlenecks, namely increased memory size and even more compute. Again from Next Platform,

Thinking ahead to that next-generation GroqChip, Ross says it will have a 15X to 20X improvement in power efficiency that comes from moving from 14 nanometer GlobalFoundries to 4 nanometer Samsung manufacturing processes. This will allow for a lot more matrix compute and SRAM memory to be added to the device in the same power envelop – how much remains to be seen.

How long can they sustain their current economics?

SemiAnalysis suggests that Groq (and most other inference API providers) provide inference-as-a-service below cost.

Using venture capital to subsidize a loss-leader strategy is a common practice for fueling growth, especially in software businesses with relatively low product development costs. However, it raises concerns when the venture capital raised was already deployed for the significant upfront cost of chip manufacturing. Furthermore, the size of Groq’s systems suggests fast inference will always be very CapEx heavy for Groq.

Questions for the Reader

Should Groq simultaneously pursue both business models?

As developers find success building apps on Groq’s API, Groq has tangible use cases to pitch to OEMs — the Catch-22 from earlier is gone.

Should Groq pursue both inference-as-a-service AND try to sell their chips to OEMs?

Groq is signaling they are open to both business models:

The traditional business model provides Groq with a pathway to significantly higher production volumes, enabling them to achieve economies of scale.

However, Groq’s inference API business is differentiated solely by its LPUs.

What’s preventing an upstart from buying Groq hardware and building an inference-as-an-API competitor?

Should Groq pursue both business models or double down on one?

Did Groq unlock the traditional business model for other companies?

Groq worked hard to show OEMs why AI accelerators are necessary for hyperfast LLM inference.

Did they just make competition easier? Many other startups are creating AI inference accelerators, such as Tenstorrent, Etched, MatX, UntetherAI, etc. Will these competitors now have a much easier time selling to OEMs?

Note that competitors have yet to demonstrate LLM inference in production.

How defensible is this hyperspeed inference as a service business?

What’s stopping AI accelerator upstarts from building an API and copying this business model? And what if their architecture is more scalable, resulting in a better roadmap and economics?

Would they quickly pull away a significant number of Groq’s customers?

After all, the cost of switching for developers is essentially swapping API keys and tweaking some code.

Cadence Pressures

Groq’s only tapeout was four years ago — a 14nm GlobalFoundries process from 2020. Groq is looking to ramp their next chip on 4nm with Samsung sometime in 2025.

Meanwhile, Nvidia and AMD are on a remarkable single-year cadence for GPU-based AI accelerators. Moreoever, competitive AI accelerator startups will be taping out in the meantime, possibly before Groq’s next batch is back.

Might the latency of GPU-based AI accelerators or competitive startup offerings close in on Groq? How might that impact their business model decisions?

Open-Source Models

Groq is currently offering open-source, less performant models than proprietary alternatives. Should Groq spend time trying to partner and share revenue with proprietary model-builders, or should they simply wait as open-source offerings' abilities continue to improve? Does it matter if the open-source alternatives are never the same or higher quality than proprietary offerings?

Lessons for other cloud AI hardware startups

Finally, here are some quick takeaways for aspiring startups. None of these are new; they just reinforce the importance of getting the basics right.

Start with the customer problem

A “solution looking for a problem” can take you down the wrong path

Ask if you need to take the traditional route-to-market and corresponding business model or if other business models will work

Think hard about how you’ll differentiate and defend your business

Don’t let the founder’s brand/reputation cause you to skip the basic steps

When a step-change happens around you, it could be an opportunity for you. Make the most of your luck!

Conclusion

Groq’s journey has been inspiring, enlightening, and entertaining.

I’m sure we’ll be back for several follow-ups here on Chipstrat, as Groq has many important decisions ahead.

If you enjoyed this post, please subscribe and share it with other like-minded individuals!