Amazon's Nvidia Entanglement

AWS will continue buying Nvidia GPUs even as competition improves. AMD and AI ASICs like Groq can compete for a spot in AWS's portfolio.

My previous article suggested that AI ASICs will eventually dethrone GPUs for inference and training. Of course, eventual victory is easy to claim; it’s much harder to say when. Timing the future is hard, so I won’t even try. Instead, let’s study Nvidia’s largest customers to understand who might cut back on Nvidia spending soonest.

We’ll start with Amazon.

Amazon and Nvidia

It’s helpful to think of AWS, Amazon’s cloud computing business, as a landlord that buys Nvidia GPUs and leases them to customers. Anthropic and HuggingFace are two examples of marquee AWS AI tenants.

It’s a prosperous time to be in this AI hardware rental business.

To begin, Nvidia's supply limitations force many companies to rent, at least temporarily. Moreover, AWS leveraged its buying clout as a hyperscaler to obtain an early and large allocation of H100 GPUs, solidifying its position as a dominant player in the limited rental market.

The hefty initial investment needed for Nvidia AI hardware also works to AWS's advantage, as rentals offer companies a "try before you buy" option. For reference, the smallest practical H100-based system a company can buy is the DGX server with 8 interconnected GPUs, which can cost ~$300K. Renting is a pragmatic approach, especially given the unclear economic payoff of Generative AI for certain industries.

While AI hardware rentals is a great business for AWS, acquiring Nvidia GPUs is still incredibly expensive. Some sources speculate that ~$4 billion of AWS's $8 billion CapEx in Q3 2023 was dedicated to AI hardware1. As an aside, it’s mind-boggling to imagine signing off on the acquisition of several billions of dollars of hardware in a single quarter!

What if Amazon could supply their own infrastructure? Could they cut out the middleman’s margin and pass the savings on to the customer?

It turns out Amazon is working on just that, partnering with ASIC design company Alchip to build custom chips for training and inference with the self-explanatory names Trainium and Inferentia.

Here we see a concrete example of AI ASICs creeping into the mix to compete against GPUs, underscoring what we’ve been discussing in previous posts.

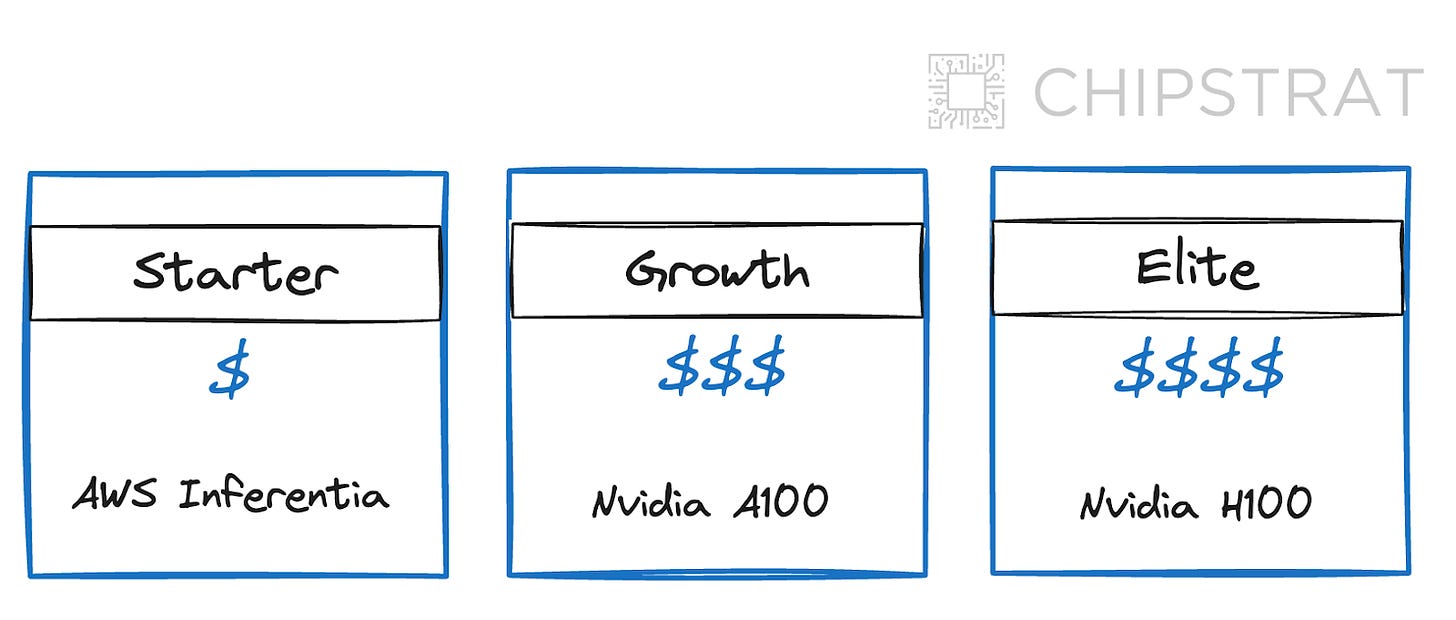

In an overly simplified way we can think of AWS with a portfolio of AI hardware rentals, for example:

Trainium and Inferentia introduce a cost-effective alternative to Nvidia within AWS's portfolio, expanding the potential customer base.

Additionally, Inferentia and Trainium likely exist to reduce Amazon’s e-commerce AI costs like generating product recommendations. Amazon probably runs these workloads on Inferentia and Trainium, especially in situations where ultra-low latency isn’t necessary like pre-computing recommendations2. If this is true, Amazon would use Inferentia and Trainium indefinitely unless a competitor emerges with a significantly lower TCO. Even then, sunk cost fallacy and internal politics are real and make it hard to sunset a homegrown project.

The simple portfolio illustration above raises the question: how much demand is Inferentia pulling away from Nvidia? I can’t find public information on it, so let’s reason from first principles. The main question is whether Inferentia’s performance and price are competitive enough with the A100 and H100. If they are, we would expect to see Nvidia GPU erosion.

SemiAnalysis reports that Trainium and Inferentia are not competitive for LLMs despite significant discounts. AWS's customer success stories fail to counter this criticism, merely showcasing applications involving smaller models (Airbnb) or conventional machine learning (Snap)3. In the absence of supportive anecdotes, I’m skeptical that AWS Inferentia diverts meaningful share from Nvidia’s GPUs.

Yet Inferentia’s existence alone is enough to validate the idea that AI ASICs will eventually compete with GPUs on performance and cost, beginning with batch-processing situations that don’t require the lowest latency.

One could argue that Inferentia’s inability to support LLMs can be traced back to initial v1 designs likely occurring before modern LLMs came into existence — “Attention is All You Need” was published in 2017, and Inferentia was announced in 2019. It can take 2-3 years from design to tapeout, so it’s possible Inferentia was designed before or right around the time Google’s seminal work was published.

Looking ahead, it's reasonable to speculate that AWS’s AI rental business will continue to make sizable next-gen AI GPU purchases from Nvidia to ensure a broad range of choices for its clientele. This trend will continue, even as competitors emerge.

That said, it’s valid to wonder if the total amount of next-gen Nvidia purchases will decline, especially if AWS’s customers struggle to convince their CFOs to continue renting AI hardware.

Amazon and AMD

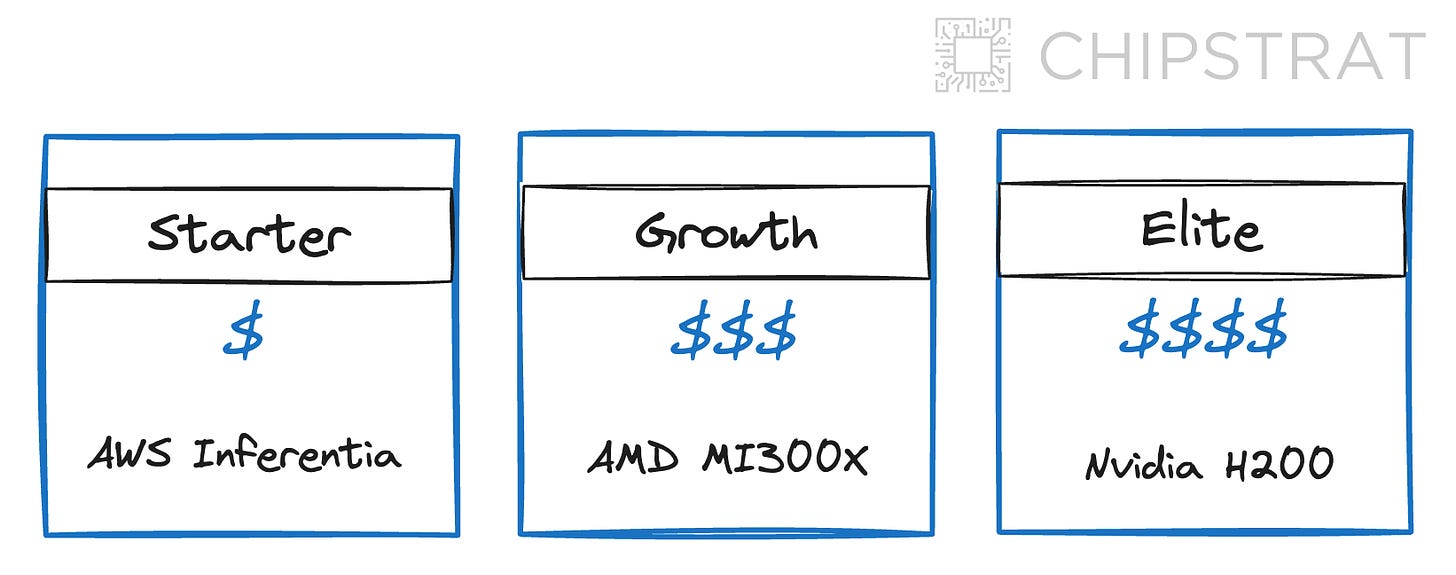

Our mental framing of “AWS as a landlord” showcases the opportunity for AMD to compete as a high-performance yet affordable alternative to Nvidia.

Nailing price and performance is critical for AMD to truly compete in AWS’ portfolio, yet reducing developers' switching costs is equally important. AMD must ensure popular inference and training libraries like PyTorch and vLLM run optimally on MI300X to make it simple for prospective customers to switch vendors.

AMD has a lot of catching up to do.

Beyond providing an ecosystem of optimized libraries (CUDA) for their parallel processors, Nvidia launched Nvidia AI Enterprise to ease the development, deployment, and maintenance burdens of AI applications on their hardware.

Nvidia’s CEO Jensen Huang explains on the recent 4Q23 earnings call,

Software is fundamentally necessary for accelerated computing.

This is the fundamental difference between accelerated computing and general-purpose computing that most people took a long time to understand.

And now, people understand that the software is really key. And the way that we work with CSPs [cloud service providers], that's really easy. We have large teams that are working with their large teams.

However, now that generative AI is enabling every enterprise and every enterprise software company to embrace accelerated computing, it is now essential to embrace accelerated computing because it is no longer possible, no longer likely anyhow to sustain improved throughput through just general-purpose computing. All of these enterprise software companies and enterprise companies don't have large engineering teams to be able to maintain and optimize their software stack to run across all of the world's clouds and private clouds and on-prem.

So we are going to do the management, the optimization, the patching, the tuning, the installed-base optimization for all of their software stacks. And we containerize them into our stack. We call it Nvidia AI Enterprise. And the way we go to market with it is — think of that Nvidia AI Enterprise now as a runtime like an operating system. It's an operating system for artificial intelligence.

And we charge $4,500 per GPU per year. And my guess is that every enterprise in the world, every software enterprise company that are deploying software in all the clouds and private clouds and on-prem, will run on Nvidia AI Enterprise, especially obviously for our GPUs. And so this is going to likely be a very significant business over time. We're off to a great start. And Colette mentioned that it's already at $1 billion run rate and we're really just getting started.

As stated, Nvidia AI Enterprise allows companies to offload the management of AI development and deployment, including integration, performance tuning, and scaling.

Simply put, it reduces the barrier to entry for companies and their developers.

This ought to worry AMD — Nvidia is making it easier for prospective customers to hop aboard the Nvidia/CUDA train. This enterprise offering is so useful that early adopters have already signed up to collectively pay $1B/year for it. Contrast that with the software community’s view of AMD’s ROCm, which (as of Feb 2023) was not good and lacks any sort of white-glove enterprise support.

AMD must reduce the burden on developers if they want meaningful adoption. Imitation of Nvidia AI Enterprise’s customer-centric approach should be considered, especially given ROCm’s current adoption woes.

Nvidia’s marketing sleight-of-hand

A quick aside on Jensen’s commentary.

In industry parlance, “accelerated computing” broadly refers to systems that offload inherently-parallel algorithms to parallel processors like GPUs — see this explanation of accelerated computing, ironically from AMD.

In a clever sleight-of-hand, Nvidia refers to their GPUs as “accelerated computing” and CPUs as “general-purpose computing”. This anchors Nvidia’s “accelerated” hardware against yesteryear’s “general-purpose” CPUs, which keeps the audience looking backward in the rearview mirror and feeling good about how quickly Nvidia is speeding ahead.

This adoption of the “accelerated” moniker combined with the positioning of GPUs against CPUs distracts listeners from the main forward-looking challenge, namely that Nvidia’s GPUs are actually general-purpose hardware and must compete with truly accelerated hardware competition in the long run — AI ASICs.

In my experience, the easiest way to comprehend Jensen during earning calls is to mentally replace any mention of “accelerated computing” with “Nvidia GPUs” and “general-purpose computing” with “CPUs”.

Amazon and AI ASIC vendors

Can AI ASIC startups like Groq break into the AWS AI portfolio?

Theoretically yes, but they’ll need to compete on performance, price, and developer experience.

Groq’s current inference system costs are arguably uncompetitive, especially if the customer can’t keep the system fully utilized.

Groq's upcoming jump from 14nm transistors (GlobalFoundries) to 4nm (Samsung) could lead to performance gains and cost reductions from increased transistor density and decreased power consumption. Yet the competitiveness of their next-generation chips hinges on more than transistor density. With foundation models growing, success requires addressing memory and networking at a systems-level to effectively challenge Nvidia's dominance.

Fellow AI ASIC competitor Tenstorrent took a similar manufacturing approach as Groq, first launching a demo chip on an older node and showcasing that chip to raise funding for a cutting edge process run. Tenstorrent used GlobalFoundries’ 12nm in 2020 and raised $100M in 2023 for a Samung 4nm production run.

Regarding developer experience, there’s a long and interesting article suggesting Groq’s compiler is unusable and thus Groq will never shift from their inference-as-a-service business model. I don’t know if it’s true, but if it is this would be a barrier to adoption of Groq chips on AWS.

Time will tell - it will be fun to watch Groq and Tenstorrent’s next-gen chips ship and see how the economics, performance, and developer experience turn out.

Looking ahead to the future where multiple AI ASICs are competitive on performance, cost, and developer experience with Nvidia GPUs — it’s easy to imagine customers shifting inference and training workloads to these offerings. Yet that doesn’t mean AWS will stop buying GPUs entirely, as they will still have a wide variety of customers wanting to run inherently parallel tasks on general-purpose parallel processors.

AWS will continue to buy Nvidia GPUs, but customers may simply not need as many nor be willing to pay as much.

Summary

In summary, Amazon will likely always purchase GPUs, although the mix should eventually shift to AI ASICs in the long run. For Nvidia’s publicly available roadmap through 2025, AWS will certainly continue to buy every successive chip to meet market demand and have a high-end offering in their portfolio. How many they acquire depends on the success of AWS customers turning AI hype into profitability.

AMD could compete for a spot in the AWS lineup and pull share away from Nvidia, but doing so will require significant investment in the developer experience and should consider an enterprise-focused solution like Nvidia’s AI Enterprise.

Given AMD’s economies of scale they will likely compete on performance, price, and developer experience sooner than AI ASIC startups who may struggle with cost.

Amazon is a huge internal customer of AWS’ infrastructure and will help feed Inferentia demand for some time. This workload will likely not erode AWS’s Nvidia GPU purchases.

My takeaway: Is Nvidia’s revenue from AWS at risk due to ASICs? Yes, but not so much in the near-term.