AMD's Earnings: Playbook Strength and New Strategic Update

Strategy update, Microsoft wins, Llama 3.1 assist, Demand concerns, Training worries, Client flex, Semi-custom drag

Did you know Block is designing a 3nm Bitcoin mining ASIC?

Last week, I took a short breather from traditional semiconductor coverage to write a mini-series researching Block, the fintech company behind Square and Cash App. The first post explains Block’s hardware strategy and the second zooms in on Block’s Bitcoin hardware.

Moving on to AMD’s Q2 earnings call, which included an interesting strategic update.

AMD’s Earnings

As context for the quarter, let’s recap AMD’s strategy.

AMD’s Strategy

Long Game

AMD is playing the long game with Instinct; MI300X is simply the first at-bat.

From Lisa Su’s June updates,

Lisa Su: We are at the beginning of what AI is all about. This is like a 10-year arc we’re on. We’re through maybe the first 18 months.

AMD’s plan centers around a roadmap that consistently delivers strong performance and undeniable cost advantages, beginning with MI300.

Ring any bells? This approach mirrors the playbook AMD used to establish a foothold in the data center CPU market.

As with CPUs, the AI accelerator long game is not won on the first product, but by successfully better at-bats. And no mistakes!

As Lisa recounted from their EPYC journey,

Ben Thompson: You have to win the roadmap.

Lisa Su: You have to win the roadmap and that was very much what we did in that particular point in time.

BT: And there are customers coming along that actually will buy on the roadmap.

LS: That’s right and by the way, they’ll ask you to prove it.

In Zen 1, they were like, “Okay, that’s pretty good”, Zen 2 was better, Zen 3 was much, much better.

That roadmap execution has put us in the spot where now we are very much deep partners with all the hyperscalers, which we really appreciate.

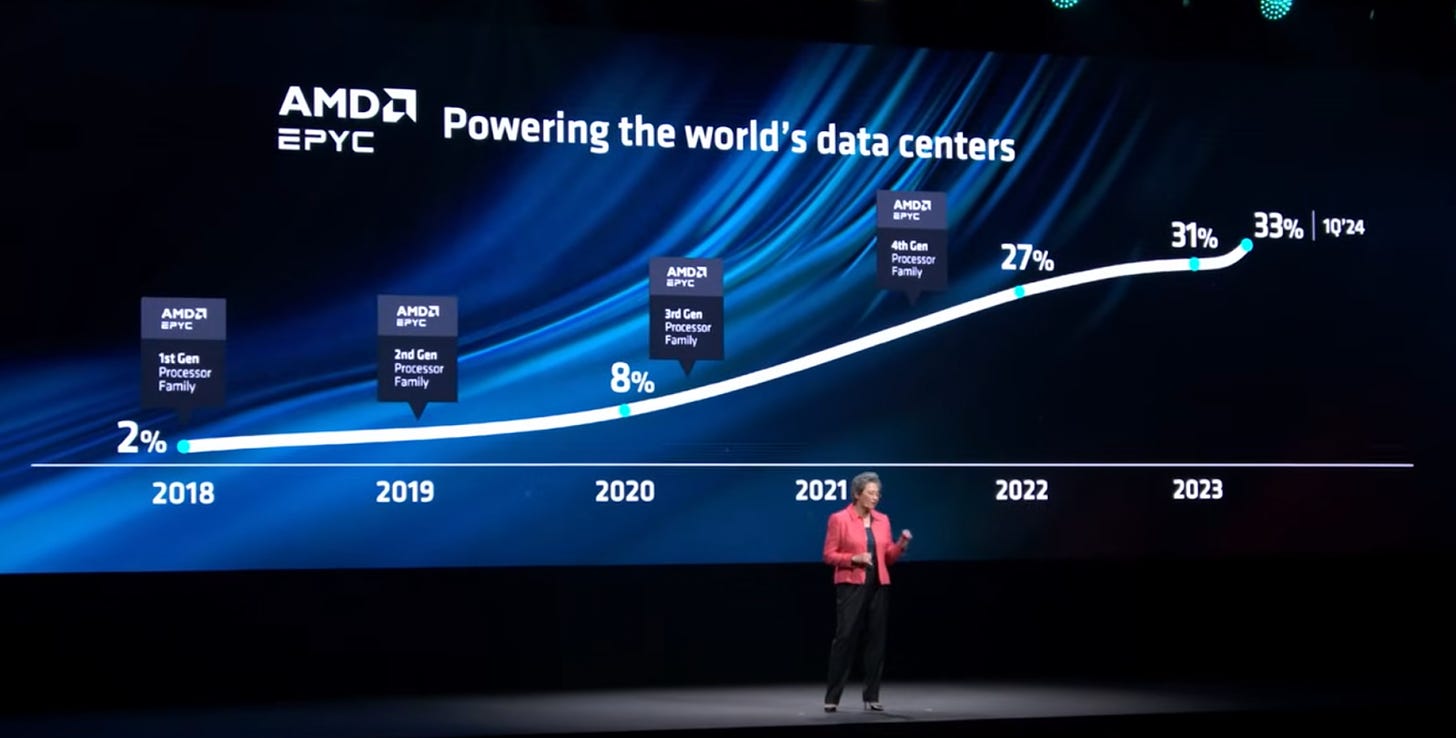

EPYC’s market share gains were the culmination of years of consistent execution.

Lisa then connected the dots for Ben (and us) regarding AMD’s AI strategy:

LS: And as you think about the AI journey, it is a similar journey.

If there’s one takeaway, remember this: Enterprise customers invest in a roadmap, not a product.

Customers Want Choice

During these early innings of the Gen AI explosion, customer choice was limited to a single product from a single company, hamstrung by limited supply. Price was seemingly of no concern.

However, cost optimization is coming, especially given the unclear near-term ROI of Generative AI. The biggest companies will continue to invest as long as investors can stomach, but enterprise CFOs will increasingly ask IT to slow the growth of Gen AI spending.

AMD has signaled its desire to give companies more choice over their spending by offering affordable alternatives.

Lisa Su: When you look at what the market will look like five years from now, what I see is a world where you have multiple solutions. I’m not a believer in one-size-fits-all, and from that standpoint, the beauty of open and modular is that you are able to, I don’t want to use the word customize here because they may not all be custom, but you are able to .… tailor the solutions for different workloads, and my belief is that there’s no one company who’s going to come up with every possible solution for every possible workload.

LS: At some point, you’re going to get to the place where, “Hey, it’s a bit more stable, it’s a little bit more clear”, and at the types of volumes that we’re talking about, there is significant benefit you can get not just from a cost standpoint, but from a power standpoint.

As we’ll discuss below, AMD is delivering on that value proposition this quarter:

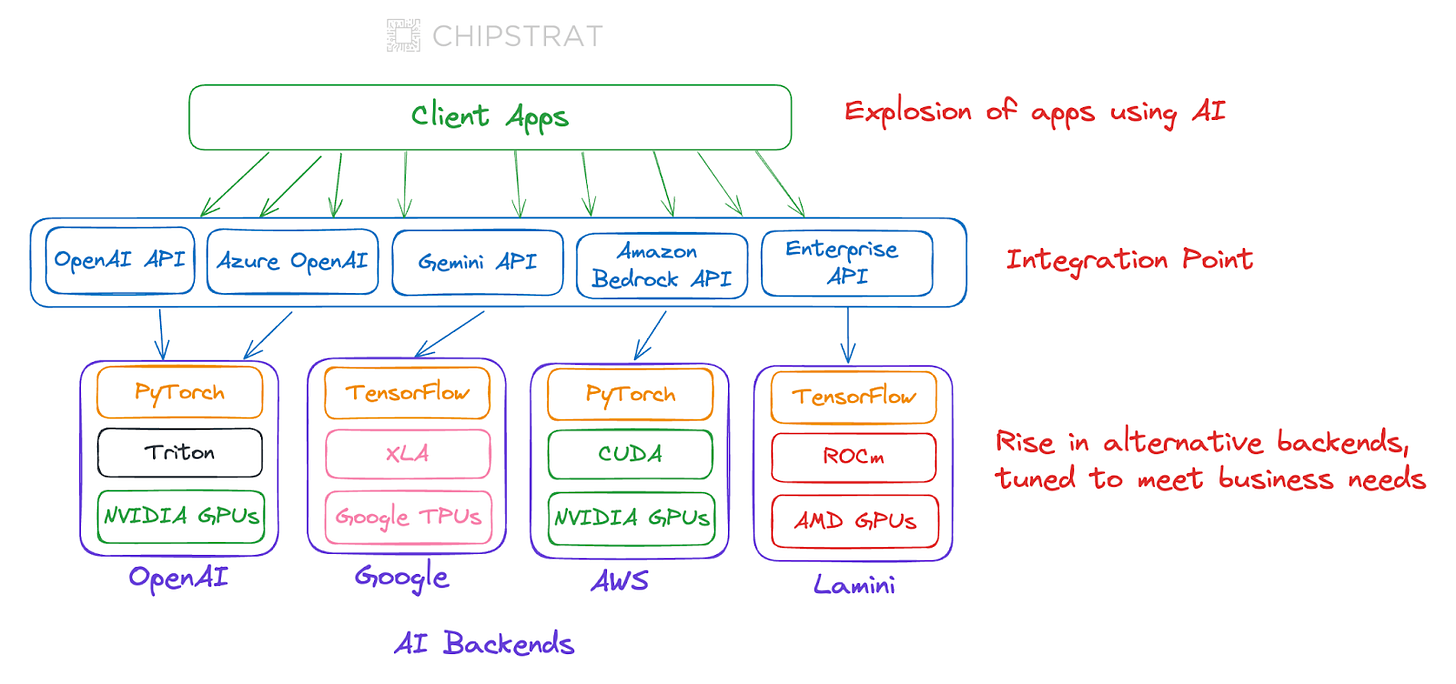

Choice Is Possible With Software Abstractions

Early in the Gen AI explosion, CUDA was the integration point for AI software developers. But now, higher levels of abstraction layers are the point of integration, increasingly enabling enterprises flexibility in their hardware provider.

Client apps are increasingly being built on top of inference APIs, abstracting away Nvidia and even opening the door for competition from startups like Groq. ML developers can increasingly come up for air from CUDA land and write code at a higher level of abstraction with libraries like PyTorch.

Over the past year, AMD has been prioritizing ROCm and supporting higher-level open-source ecosystems like OpenXLA, PyTorch, etc.

Strategy Summarized

We can summarize AMD’s AI accelerator strategy as

expand customer choice

compete on price and certain performance characteristics

deliver a consistent roadmap

minimize developer switching costs

The first three bullets are the EPYC playbook, and the fourth is required for AI.

So, how’s the strategy playing out for EPYC and Instinct?

Is the Playbook Working?

We’ll start with EPYC. Did Q2 demonstrate if the playbook still works?

EPYC

Data center segment revenue increased 115% year-over-year to a record $2.8 billion, driven by the steep ramp of Instinct MI300 GPU shipments and a strong double-digit percentage increase in EPYC CPU sales.

Those are promising gains. Are they from a rising tide lifting all boats, or is AMD’s boat rising while others are sinking?

According to AMD, it’s the latter. Hyperscalers and enterprises are leaving Intel for EPYC.

We are seeing hyperscalers select EPYC processors to power a larger portion of their applications and workloads, displacing incumbent offerings across their infrastructure with AMD solutions that offer clear performance and efficiency advantages.

Importantly, more than one-third of our enterprise server wins in the first half of the year were with businesses deploying EPYC in their data centers for the first time, highlighting our success attracting new customers, while also continuing to expand our footprint with existing customers.

Could this be AMD cherry-picking a few anecdotes, or is it broadly representative of true market share gains? Let’s see what Intel has to say. From Intel’s 10Q,

Server volume decreased 22% in Q2 2024 due to lower demand in a competitive environment

Ouch.

Notice Intel didn’t call out lower market demand. That’s lower Intel demand in a competitive market (AMD and Arm).

So yeah, it looks like EPYC’s playbook is still working.

Building Toward Choice in AI

Is the playbook showing early signs of working for Instinct?

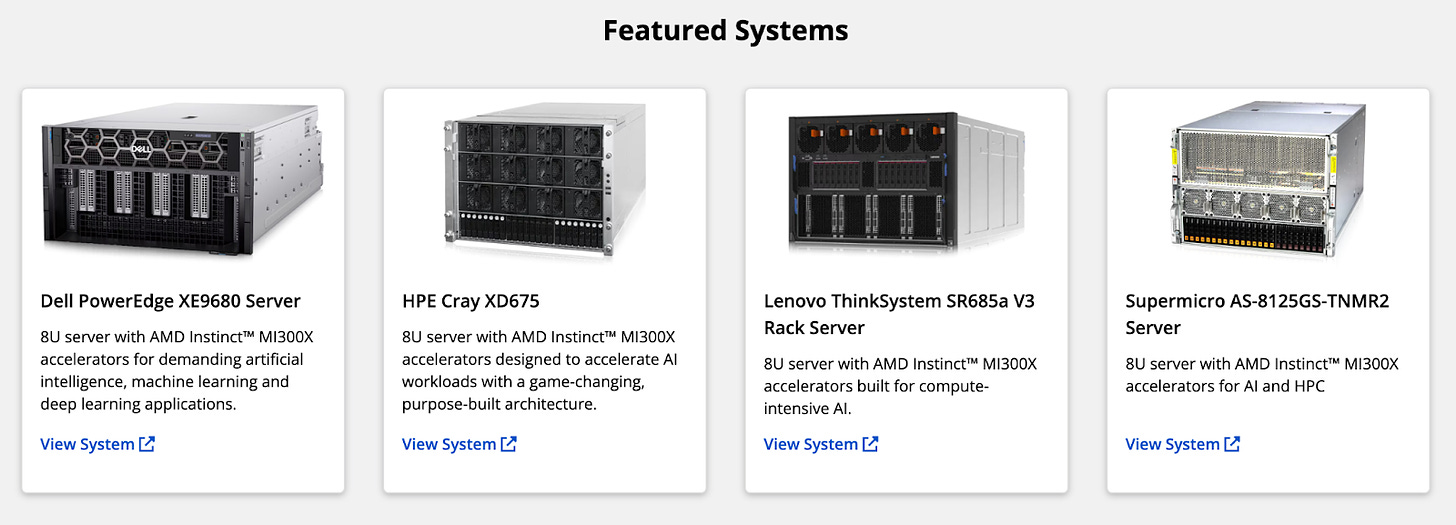

An important first baby step for anyone bringing an alternative to market is convincing the channel to sell it.

Our enterprise and Cloud AI customer pipeline grew in the quarter, and we are working very closely with our system and cloud partners to ramp availability of MI300 solutions to address growing customer demand. Dell, HPE, Lenovo, and Supermicro all have Instinct platforms in production, and multiple hyperscale and tier-2 cloud providers are on track to launch MI300 instances this quarter.

The channel is primed.

OEMs are enabling enterprises to host their own AI, while cloud providers are renting Instinct to customers who want to rent.

But if the channel builds it, will they come?

It’s looking promising — if success with one of the biggest buyers in the world is any indication.

The Microsoft Home Run

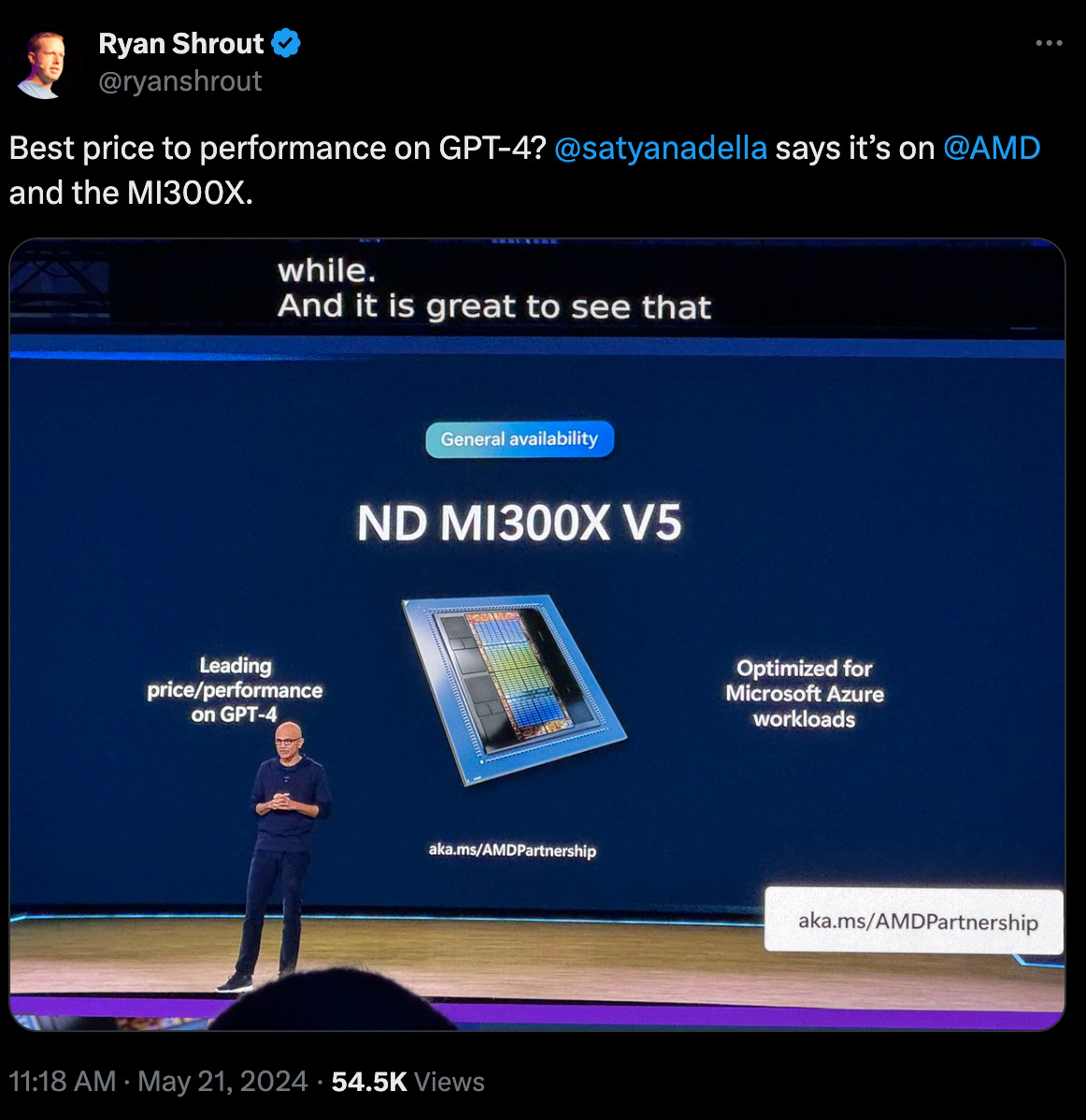

The best sign of the playbook unfolding for Instinct this quarter comes from Microsoft, arguably the company capitalizing the most on Generative AI so far.

Microsoft became the first large cloud service provider to announce the general availability of public MI300X instances in the quarter.

This is an important signal that validates the idea that AI accelerator customers want choice. Microsoft runs a hardware rental business (Azure) that specializes in serving enterprise customers, and Microsoft is signaling that choice is important for its enterprise tenants.

Even more encouraging is Microsoft's use of MI300X to power their own products.

Microsoft expanded their use of MI300X accelerators to power GPT-4 Turbo and multiple copilot services, including Microsoft 365 Chat, Word, and Teams.

This is huge for AMD.

Microsoft is one of the few companies in the world with a clear ROI from Generative AI, and it is entrusting this revenue-generating AI inference to MI300X.

Products like Microsoft 365 Copilots and GPT-4 Turbo need reliable, fast inference hardware that can handle serious traffic. MI300X is apparently up to the challenge.

Why does Microsoft use AMD?

Why did Microsoft entrust some of its traffic to MI300X?

The most bullish argument is that Microsoft wants to benefit from MI300X’s lower cost of ownership, as MI300X is reportedly much cheaper than H100. If true, we should expect to see an increase in Microsoft's Instinct fleet.

Alternatively, Microsoft’s AI accelerator diversification could simply be a negotiating tactic with Nvidia. That’s still bullish for AMD, as Instinct performance has to be “good enough” to be a negotiating chip. This also suggests Microsoft would continue to expand its AMD fleet.

More Than The Last Pick At Recess?

The least bullish idea is that Microsoft used up all of its H100s to meet inference demand, and MI300X was the only available backup option.

Even if this is true, AMD can still be proud that Instinct works in production at Microsoft. This still signals to the ecosystem that AMD’s technology roadmap is on the right track.

That said, this line of reasoning suggests Instinct is the last kid to be picked for the team at recess. Well, we need one more player, and you’re all that’s left; I guess we pick you.

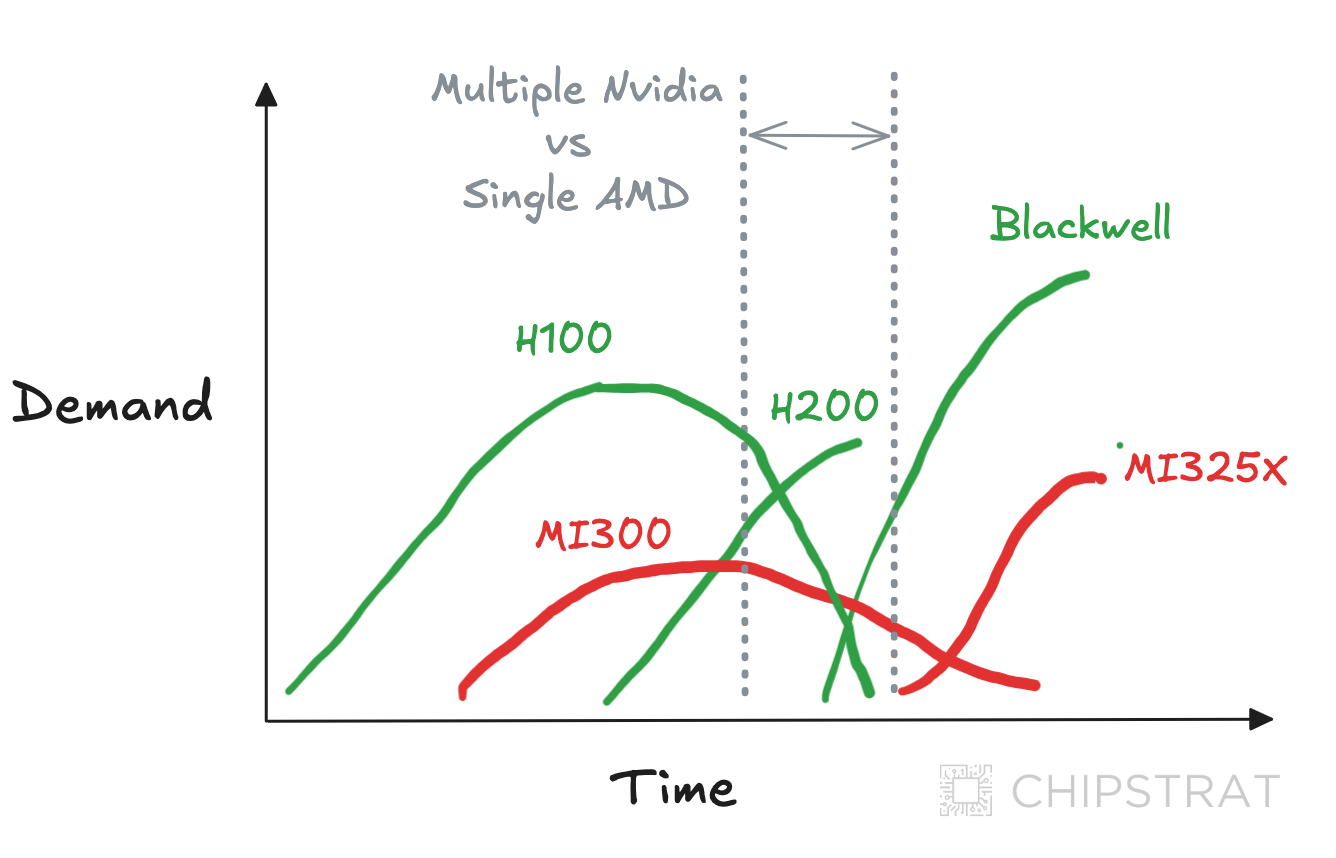

In other words, when it comes to the hierarchy of AI chips, it appears to be Nvidia’s upcoming Blackwell chips > Nvidia’s still-ramping H200 chips > Nvidia’s still-shipping H100 chips > AMD’s MI300 chips; as H100s become plentiful it is MI300 sales that will be gated by demand instead of supply.

Even if Instinct was the last kid Microsoft picked at recess, they still saw game time.

Improvement doesn’t happen on the bench (Gaudi, looking at you). Improvement happens on the field, in front of onlookers, under the big lights.

It’s Not Going To Get Any Easier for AMD

The impact of H200 availability on MI300 sales will be a telling sign of Instinct's desirability, even more so when Blackwell ships.

Will any teams pick MI300 when both H100 and H200 are available? And what if Blackwell enters the scene before MI325X is widely available? That could be a rough period for AMD.

Fortunately for AMD, The Information reports a 3-month delay for Blackwell. If true, MI300 should see more game time, and MI325X might get on the field before Blackwell.

For now, MI300X being picked last isn’t necessarily a death knell for Instinct. As long as MI325X, and especially MI350 and MI400, ship increasingly higher volumes, AMD’s playbook should remain intact.

Customer Choice: The Llama 3.1 Assist

The earnings call validated MI300X as a lower-cost alternative to Nvidia for an important workload.

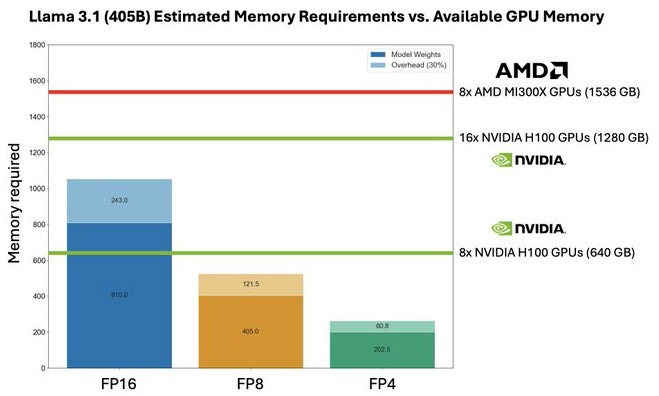

Lisa Su: Last week, we were proud to note that multiple partners used ROCm and MI300X to announce support for the latest Llama 3.1 models, including their 405 billion parameter version that is the industry's first frontier-level open source AI model. Llama 3.1 runs seamlessly on MI300 accelerators, and because of our leadership memory capacity, we're also able to run the FP16 version of the Llama 3.1 405B model in a single server, simplifying deployment and fine-tuning of the industry-leading model and providing significant TCO advantages.

What Lisa (appropriately) left out of the call is the punchline: thanks to MI300X’s large memory capacity, running Llama 3.1 405B FP16 only requires a single server with 8 MI300Xs, compared to two servers (16 H100s) on the Nvidia platform.

The Next Platform illustrates:

Of course, AMD gets to compare backward against H100 right now. Hopper’s H200 will have enough memory to fit this model in a single 8 GPU server, and Blackwell will have even more memory and way more FLOPs.

As an aside, Zuck’s AI benevolence continues to help the semiconductor industry; he already saved Groq and can now add an AMD assist to his résumé.

Strategic Update

This earnings call revealed a new priority for AMD’s AI accelerator playbook: reduce customer time to value.

Measure What Matters

AMD is making time to value (TTV) a strategic priority, focusing on how quickly customers can experience the benefits of their investment.

The faster customers can move from purchase order to production inference, the more successful AMD's Instinct roadmap will be.

Why is time to value a priority for Instinct but not for EPYC? The average enterprise has no experience deploying generative AI, let alone creating value from it. The benefits of the underlying AI accelerator infrastructure risk being overshadowed by enterprises’ failure to deploy Gen AI quickly and realize ROI.

What can AMD do to hold customers’ hands as they stand up new infrastructure and deploy Gen AI, possibly for the first time? How can AMD ensure the customer’s software is running on ROCm as smoothly as it would CUDA? Most importantly, how might AMD help customers uncover Gen AI value creation opportunities?

AMD has an active role to play in its customers’ success, and this sounds familiar… a lot like… consultants.

From NYT’s The A.I. Boom Has an Unlikely Early Winner: Wonky Consultants,

The next big boom in tech is a long-awaited gift for wonky consultants. From Boston Consulting Group and McKinsey & Company to IBM and Accenture, sales are growing and hiring is on the rise because companies are in desperate need of technology Sherpas who can help them figure out what generative A.I. means and how it can help their businesses.

AMD needs to build out a consulting group, not just with MBAs but with deeply technical sales engineers who are AI experts.

And that’s exactly what AMD announced.

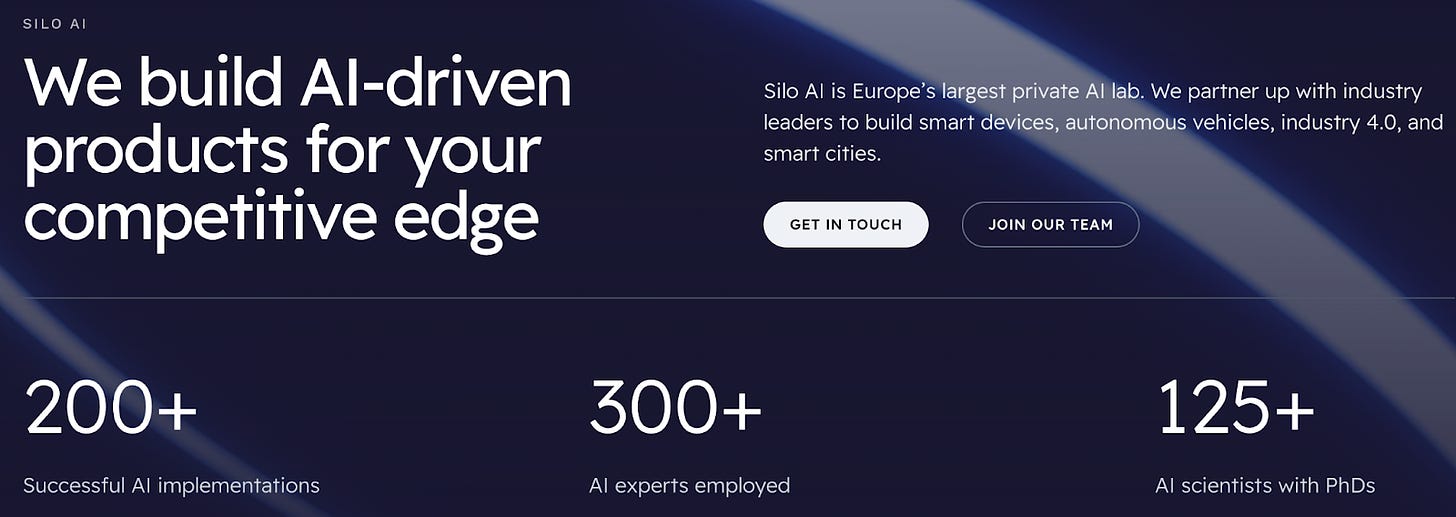

Silo AI acquisition

Silo AI’s website trumpets their 125+ PhDs and 300+ AI experts with 200+ successful AI implementations.

That sounds like a lot of experienced sales engineers to me.

Lisa explained during her opening remarks,

Earlier this month, we announced our agreement to acquire Silo AI, Europe's largest private AI lab with extensive experience developing tailored AI solutions for multiple enterprise and embedded customers, including Allianz, Ericsson, Finnair, Korber, Nokia, Philips, T-Mobile, and Unilever.

The Silo team significantly expands our capability to service large enterprise customers looking to optimize their AI solutions for AMD hardware.

Silo also brings deep expertise in large language model development, which will help accelerate optimization of AMD inference and training solutions.

Not only is AMD acquiring talent — they’re also acquiring a book of business. These are exactly the types of customers to whom AMD wants to sell the Instinct roadmap.

Lisa expanded further in the Q&A,

Blayne Curtis

What is the biggest hurdle for you to get that next wave of customers to ramp?

Lisa Su

… What we look at is out of box performance, how long does it take a customer to get up and running on MI300? And we've seen, depending on the software that companies are using, particularly if you are based on some of the higher-level frameworks like PyTorch, et cetera, we can be out-of-the-box running very well in a very short amount of time, like let's call it, very small number of weeks. And that's great because that's expanding the overall portfolio.We’re going to continue to invest in software and that was one of the reasons that we did the Silo AI acquisition. It is a great acquisition for us, 300 scientists and engineers. These are engineers that have experience with AMD hardware and are very, very good at helping customers get up and running on AMD hardware. And that's -- so we view this as the opportunity to expand the customer base with talent like Silo AI, like Nod.ai which brought a lot of compiler talent. And then we continue to hire quite a bit organically.

Notice how Lisa emphasized higher levels of software abstraction are important for quickly onboarding customers and reducing time to value.

AMD’s other recent acquisitions (Nod.AI and Mipsology) also align with reducing enterprise time to value.

Updating AMD’s Playbook

We can update AMD’s AI accelerator strategy accordingly,

expand customer choice

compete on price/performance

deliver a consistent roadmap

reduce enterprise time to value

minimize developer switching costs

leverage AI PhD sales engineers as consultants

Follow Up Thoughts and Questions

A few other things that were said or left unsaid gave me pause.

Training

One lingering question: is anyone meaningfully training with MI300X?

Microsoft’s workloads are inference. The Llama 3.1 win is inference. In fact, pretty much all public MI300X examples are inference.

Lisa even says as much on the call.

Joe Moore: I wonder if you could talk about training versus inference.

Do you have a sense -- I know that a lot of the initial focus was inference, but do you have traction on the training side? And any sense of what that split may look like over time?

Lisa Su: As we said on MI300, there are lots of great characteristics about it. One of it -- one of them is our memory bandwidth and memory capacity is leading the industry.

From that standpoint, the early deployments have largely been inference in most cases, and we've seen fantastic performance from an inference standpoint.

We also have customers that are doing training. We've also seen that from a training standpoint, we've optimized quite a bit our ROCm software stack to make it easier for people to train on AMD. And I do expect that we'll continue to ramp training over time.

The belief is that inference will be larger than training from a market standpoint. But from an AMD standpoint, I would expect both inference and training to be growth opportunities for us.

They have customers doing training, but what does that mean?

After all, training has more demanding software and networking requirements compared to inference.

Are we talking about thousands of MI300X all wired together, training large LLMs for weeks on end? Or something much simpler?

Could it be that no customers are willing to attempt large-scale training with MI300X yet? Maybe because ROCm is still improving?

This could be a concern, as training seems to be what’s been selling massive volumes of H100s to date. On the other hand, I agree with Lisa that the market will be driven by inference in the long run, and inference is an easier place to compete, so it’s still strategically sound for AMD to focus on inference right now.

That said, AMD can still own their destiny here. If customers are afraid of being the first guinea pig, AMD should incentivize them.

For example, AMD Ventures’ portfolio company Lamini already offers lightweight finetuning on AMD MI250. Could they make the leap to large scale training on 300s? It could be a proof of concept project for marketing publicity. AMD and SiloAI could do some heavy software lifting and provide hardware. This is also the type of marketing projects another portfolio company HuggingFace does, they would be another great guinea pig.

System-Level Solutions and Networking

It’s smart that AMD recognizes that enterprise time to value is important, and AMD is clearly investing in the software and people needed to execute this priority.

Yet AMD's customers need more than chips and software — they need systems. Networking, power, and cooling matter to system performance. But AMD has been much less vocal in these areas, and Q2 was no different.

Surely, helping customers piece together the end-to-end system is an important part of reducing time-to-value?

Publicly available information about AMD’s specific network implementations and cost/performance trade-offs remains sparse. Yet, their networking arsenal includes a wide array of components and know-how, including Infinity Fabric, Pensando DPUs, Ethernet, and SerDes. There a lot of details AMD could provide to speed up data center system design.

Maybe AMD is quiet publicly, but shares this information privately?

They’ve also been vocal supporters of related industry standards like the Ultra Ethernet Consortium and UALink, but those are long-term plays.

On the earnings call, we got a hint that we the public might hear more from AMD on this front:

Harsh Kumar: From my rudimentary understanding, the large difference between your Instinct products and the adoption versus your nearest competitor is kind of rack level performance and that rack level infrastructure that you may be lacking. You talked a little bit about UALink. I was wondering if you could expand on that and give us some more color on when that might -- when that gap might be closed. Or is this a major step for the industry to close that gap? Just any color would be appreciated.

Lisa Su: If I take a step back and just talk about how the systems are evolving, there is no question that the systems are getting more complex, especially as you go into large training clusters, and our customers need help to put those together. And that includes the sort of Infinity Fabric-type solutions that are the basis for the UALink things as well as just general rack level system integration.

First of all, we're very pleased with all of the partners that have come together for UALink. We think that's an important capability. But we have all of the pieces of this already within the AMD umbrella with our Infinity Fabric, with the work with our networking capability through the acquisition of Pensando.

You'll see us invest more in this area. So this is part of how we help customers get to market faster is by investing in all of the components, so the CPUs, the GPUs, the networking capability as well as system-level solutions.

Supply

The earnings call contained some comments about constrained supply, which I found a bit hard to interpret.

Toshiya Hari: Are you currently shipping to demand or is the updated annual forecast of $4.5 billion is in some shape or form supply constrained? I think last quarter you gave some comments on HBM and CoWoS.

Lisa Su : On the supply side we made great progress in the second quarter. We ramped up supply significantly exceeding $1 billion in the quarter. I think the team has executed really well. We continue to see line of sight to continue increasing supply as we go through the second half of the year. But I will say that the overall supply chain is tight and will remain tight through 2025.

So, under that backdrop, we have great partnerships across the supply chain. We've been building additional capacity and capability there. And so, we expect to continue to ramp as we go through the year.

And we'll continue to work both supply as well as demand opportunities, and really that's accelerating our customer adoption overall, and we'll see how things play out as we go into the second half of this year.

Is this just a gentle reminder that supply chain issues persist, potentially hindering adoption? Or are there underlying concerns Lisa is signaling?

APUs

I was hoping we’d hear a success story involving AMD's MI300A this quarter, as MI300A has architectural advantages over Nvidia's Grace Hopper.

However, AMD's announcements regarding MI300A have been limited. We really only hear about its use in supercomputers by national labs. To be fair, Nvidia hasn't disclosed enterprise examples of Grace Hopper either.

Timothy Prickett Morgan sums it up in AMD’s Long And Winding Road To The Hybrid CPU-GPU Instinct MI300A

What we want to know is whether companies will want to buy MI300A servers or use discrete Epyc CPUs paired to the discrete MI300X GPUs.

We want to know how MI300A compares in terms of price, price/performance, and thermals with a Grace-Hopper or Grace-Blackwell.

Other Businesses

AMD is more than the data center business, so let’s unpack the earnings call for those segments, too.

Don’t Forget About Client!

AMD’s client business had a great quarter. As a reminder, the client segment encompasses CPUs, APUs, and chipsets for laptops and desktops.

Client segment revenue was $1.5 billion, up 49% year over year and 9% sequentially, driven primarily by AMD Ryzen processor sales.

The gains were driven by an increase in units and an increase in average selling price, as noted in the 10Q:

The increase in both periods was driven by a recovery from weak PC market conditions and inventory corrections across the PC supply chain experienced in the first half of fiscal year 2023.

Lisa said the client business expects a good rest of 2024 thanks to back-to-school and holiday seasonality. I’m not sure if this is a continued market recovery or if AMD will outperform the competition. Lisa suggested the latter, crediting upcoming launches and launch timing.

Ross Seymore: Lisa, can you talk about the AI PC side of things, how you believe AMD is positioned? Are you seeing any competitive intensity changing with the emergence of Arm-based systems? Just wanted to see how you're expecting that to roll out and what it means to second-half seasonality.

Lisa Su: … As we go into the second half of the year, I think we have better seasonality in general, and we think we can do, let's call it, above-typical seasonality, given the strength of our product launches and when we're launching.

Lisa also makes the point that partners will bring AMD-powered offerings to market across a broad range of price points, from entry-level to high-end, which should drive revenue as well as they reach a wider customer base.

And then into 2025, you're going to see AI PCs across sort of a larger set of price points, which will also open up more opportunities. So, overall, I would say the PC market is a good revenue growth opportunity for us. The business is performing well. The products are strong.

Maybe it’s surprising that AMD can gain share in client, especially with the recent entrance of Windows on Arm with Qualcomm’s Snapdragon-powered laptops.

But Lisa doesn’t seem fazed:

Ross Seymore: And is the Arm side changing anything or not really?

Lisa Su: I think at this point, the PC market is a big market, and we are underrepresented in the market. I would say that we take all of our competition very seriously. That being the case, I think our products are very well-positioned.

Arm sees it differently and claims they will be best positioned across all price points with the thing customers care deeply about—battery life.

From Arm’s Q1 ‘25 earnings call,

Charles Shi: Just want to use this opportunity to ask you about your comments you made at Computex in terms of the 50% PC market share in five years. My understanding, we already have Arm-based PCs already, right? The Mac is one, Chromebook another. Some of your peers, I mean, the x86 peers seems to disagree with what you said about that 50% market share in five years. And they basically argue whether you have a good AI PC or not, really is not ISA dependent. And wondering what's your response here. Can you provide a little bit more thoughts on why this time is different, why Arm can really take the market share up to 50% in a short period of time? Thanks.

Rene Haas: Yes, I would be a little worried if they agreed with my comment that we're going to take 50% in five years. So, the base of the question is, why is this time different?

One of them, Apple's operating system and their environment has moved over 100%. And that is obviously a pretty significant indication of the value proposition that they get.

I think what's changed this time in the Windows ecosystem, there's a number of things that have changed. First is that the products that are out today are using the most advanced Arm technology. They are optimized with Microsoft for the most effective battery life on the planet.

… So, when you combine a supply base that has multiple vendors, also incredible battery life, and then no-compromise performance, I don't see really any reason why what happened in the Mac ecosystem can't happen in the Windows ecosystem.

It will be interesting to see AMD and Arm compete head-on in client over the coming years. Of course, Intel needs to compete there, too, very badly, but we won’t go there today.

What’s Gaming’s Playbook?

Gaming had a rough quarter.

Gaming segment revenue was $648 million down 59% year-over-year and 30% sequentially. The decrease in revenue was primarily due to semi-custom inventory digestion and the lower end-market demand.

Semi-custom demand remains soft as we are now in the fifth year of the console cycle, and we expect sales to be lower in the second half of the year compared to the first half.

The gaming division consists of two main divisions: discrete GPUs (for desktops and laptops) and semi-custom products (PlayStation 5 and Microsoft Xbox Series S and X).

Semi-custom used to be more of a lifeline for the company, but with the success of data centers and clients, AMD is less dependent on this revenue stream.

What should AMD do with a struggling semi-custom business that has little control over its destiny (i.e., is dependent on the customer’s product refresh cycle)? I’m trusting they are already working on the next products.

I’m on the fence about this business. It doesn’t seem like AMD needs semi-custom, and it probably reduces overall margins. On the other hand, it still generates profit and will continue to do so even as demand tapers until the next product refresh.

As long as semi-custom is not a distraction for AMD and continues to generate profit that can be reinvested elsewhere in the company, I guess it’s a net benefit?

Embedded… Might be OK?

From AMD’s Q1 earnings call back in April,

Turning to our embedded segment. Revenue decreased 46% year over year and 20% sequentially to $846 million as customers remain focused on normalizing their inventory levels.

Given the current embedded market conditions, we're now expecting second-quarter embedded segment revenue to be flat sequentially with a gradual recovery in the second half of the year.

And in this quarter

Embedded segment revenue was $861 million, down 41% year-over-year, as customers continue to normalize their inventory levels.

Embedded struggled, but AMD told us it would.

To be fair, everyone struggled with embedded. Intel reported Altera’s quarterly revenue at $361M, down 57% YoY. Lattice (lower-end FPGAs) was down 35% YoY “as customers continue to reduce their inventory levels.”

When these boom-bust cycles play out in embedded markets like automotive and industrial, it’s hard to get a sense of who is actually losing market share, as the broad lack of demand hides it. Everyone was down, but who was down more than expected?

Looking Forward

What should we watch for over the next quarter or two?

The big question is how MI300X demand withstands H200.

The timing of Blackwell and MI325X also matters.

I’d love to see if AMD’s new emphasis on time to value plays out, ideally with success stories from faster enterprise onboarding boosted by Silo AI talent. These things take time, especially with an acquisition, so I doubt we’ll hear anything in the next quarter or two.

Will AMD share more system-level and networking details? Nvidia is expanding beyond Infiniband to Ethernet, so they’re playing the choice card too. How will AMD respond?

Will we hear details of large-scale training on MI300s? If not from AMD directly, could we see this level of detail from a startup on AMD infrastructure?

I’d love to see an industry use case of MI300A and bonus points if it’s not super niche.

Most importantly, let’s see if AMD can deliver on its roadmap. No mistakes!