Apple is the best company to bring about mass adoption of AI-enabled experiences — should they rise to the occasion. Let’s study why Apple can win, what hurdles they must overcome, and possible next steps.

A Trip Through Time

The Future

First, let’s picture what a generative AI future could look like. Don’t worry about the specifics of the examples; they aren’t meant to showcase any particular “killer app” but simply to demonstrate the underlying principles of what AI can do for us and why Apple can win.

Imagine a world where every consumer device — phones, glasses, watches, automobiles (consumer devices now, right?) — can run gen AI locally.

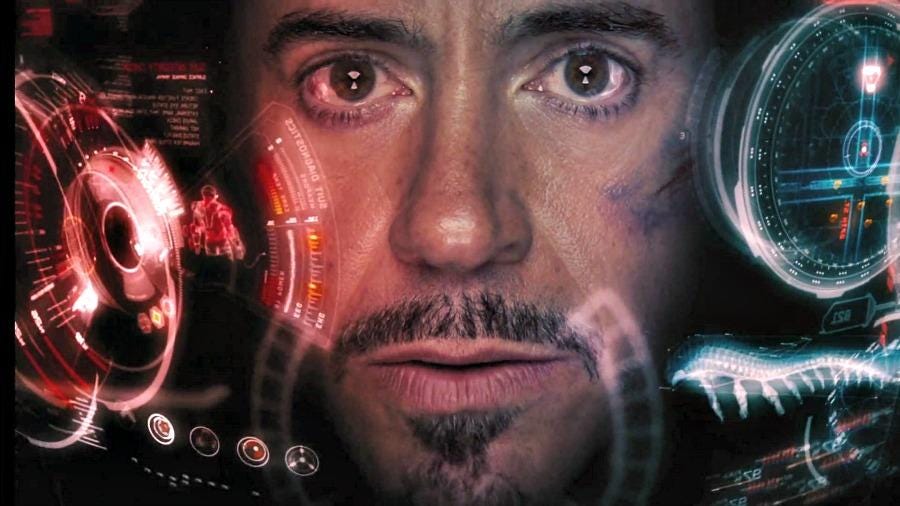

Let’s start high-level with our thought experiment. Imagine Jarvis from Iron Man — a helpful assistant you can call on anytime, regardless of form factor. Just talk to Jarvis from your car, phone, watch, earbuds, … or perhaps your exoskeleton helmet.

Remember that Jarvis is an “AI agent” and will do work on Tony’s behalf.

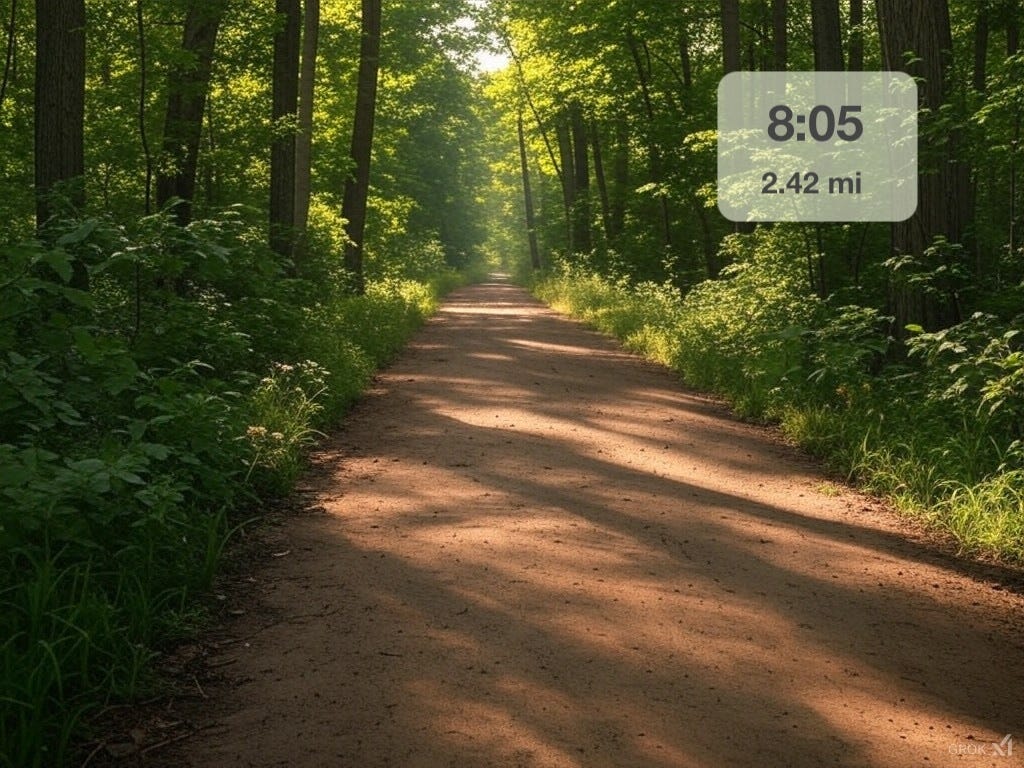

Of course, Tony Stark, as our thought experiment's centerpiece, isn’t very realistic (unless you’re Elon). So I’ll use myself as an example. Specifically, we’ll focus on my love of long-distance running. Thus, my eccentric version of an exciting consumer AI future is one where Jarvis can manage my watch and playlist:

Jarvis, let’s go on an 8-mile run. I’m going on a 2-mile warmup, then 4 miles at 6:40 min/mile, then a 2-mile cool-down. During those 4 miles, I’d like you to verbally notify me if I’m falling off the pace. Let’s also fire up Spotify — please play the most recent Stratechery podcast during my warmup and then switch to my upbeat songs for the harder 4-mile effort.

Without AI, I can accomplish this same outcome today, but with much more effort and time; I manually program my run into the Garmin app on my phone and then sync it over Bluetooth to my watch. Next, I curate a Spotify playlist on my phone then have my Garmin watch connect to my home WiFi to sync with my Spotify account 😂😭. It takes tens of taps across the Garmin and Spotify iPhone apps and several minutes to accomplish. The ends justify the means for me — but it doesn’t have to be this cumbersome!

Why is it so painful today?

Touch is a natural sensor modality for humans to control machines; we pointed and clicked in the early days, and now we tap and scroll.

However, these touch interfaces require a programmer to create them first, which means the consumer is constrained by the functionality and user experience the programmer enables.

Moreover, the smaller the form factor, the harder this becomes. Many app developers recognize this and force users to configure the app using the phone’s larger screen.

Even the well-designed Nike Run App is hard to manipulate when I’m dripping sweat during a run. I tried over and over to use Siri instead, but she’s not Jarvis (yet) and doesn’t seem capable or interested in helping; more likely, I don’t know the magic keyword incantation.

Sidebar: When I meet my kids’ teachers at the start of the year, they often say, “I recognize you—I see you out running all the time!” And I’m mortified because they probably saw me running with my wrist held near my mouth shouting, “HEY SIRI NEXT SONG! HEYYYY SIIIRI…. DANGIT SIRI. OH HEY!? SIRI? YES! SKIP! NEXT! … SIRI?”

I’ve given up on my Apple Watch while running and am back to my barebones Garmin watch. No touchscreen. No voice control. No texts. Only a simple UI and some buttons; imperfect, but more usable than the Apple Watch.

Lessons

What broader insights does this use case demonstrate?

Generative AI as Programming with Natural Language

In a GenAI world, I can spend less time fiddling and more time running.

I can just speak instructions. I don’t need to use phone apps to program my watch.

Generative AI is computer programming using natural language:

I can even break free of hard-coded user interfaces to generate the interface I want on the fly:

Hey Jarvis, please display “elapsed distance” in big, bold font during my warmup. Once I start that hard 4 miles, can you switch to showing my current pace in the top 2/3rd of the screen and put the elapsed distance at the bottom?

Software is supposed to be endlessly configurable; in theory, we can all tailor our experiences to our exact needs. It’s just software! But in reality, we have to live within the confines of whatever user interface and controls we’re given.

But we shouldn’t accept that!

Quite often, we’re more of a domain expert than the UI designer on the other end of the device. What if we could have it our way?

Software That Adapts to You, Not the Other Way Around

Generative AI promises a future that’s more adaptive to users’ specific needs.

We can choose the best form factor and modality for the task at hand.

Why should I look at a screen on my wrist while running? Why should I have to push buttons on that wrist screen while running?

I should be keeping my eyes up. I should just use voice to interact with my device.

Why not wear running sunglasses with augmented reality so I can access my pace and distance within my current field of view? And put Jarvis in there give me directions or keep me company?

Our pocket screens have liberated us from our desks, yet in our new freedom, they continue to shackle us. Just go for a drive and notice how many motorists are staring at their laps.

With multimodal assistants that truly understand our context and can follow arbitrary instructions, we can stick with whatever input modality and form factor is most helpful for a given situation.

By the way, yesterday Mark Gurman gave hints that AR functionality for runners like me could be on horizon from Meta, from Bloomberg:

Meta is broadening its smart glasses technology to other fashion brands owned by partner Luxottica Group SA. That includes a new version — dubbed “Supernova 2” — that is based on Oakley’s Sphaera glasses. This model, which shifts the camera to the center of the glasses frame, will be aimed at cyclists and other athletes.

The biggest upgrade this year will be a new higher-end offering that uses a design that’s closer to the current Ray-Ban glasses. Code-named “Hypernova,” this model will include a display on the bottom portion of the right lens that projects information into a user’s field of view. People would be able to run simple software apps, view notifications and see photos taken by the device — capabilities that get a bit closer to the long-promised AR experience.

We Can Just Do Things

I know, this specific running example doesn’t resonate with you. Dude, Austin, just run.

But you can probably relate in some other aspect; most everyone has some unique interest they can go on and on about, and they can point out shortcomings they’d like to fix so they could spend more time doing that thing they love and less time fussing around with the nonsense constraints forced upon them.

What if you had a Jarvis and could work around those constraints to build a better solution to your problems? If not you, why not your kids?

What if they could just poke life, and something would pop out the other side?

Steve Jobs: The thing I would say is, when you grow up, you tend to get told that the world is the way it is, and your life is just to live your life inside the world. Try not to bash into the walls too much. Try to have a nice family life, have fun, save a little money. But life, that's a very limited life.

Life can be much broader once you discover one simple fact, and that is: Everything around you that you call life was made up by people that were no smarter than you.

And you can change it. You can influence it. You can build your own things that other people can use.

And the minute that you understand that you can poke life, and actually something will — if you push in, something will pop out the other side.

You can change it. You can mold it.

That's maybe the most important thing is to shake off this erroneous notion that life is there and you're just going to live in it, versus embrace it.

Change it. Improve it. Make your mark upon it.

I think that's very important. And however you learn that, once you learn it, you'll want to change life and make it better. Because it's kind of messed up in a lot of ways. Once you learn that, you'll never be the same again.

With generative AI, it’s easier to build than ever. We can help more of the next generation change world — faster than ever.

Apple can make this possible.

Enter Apple

Over a billion people have iPhones. Hundreds of millions have Macs, iPads, or AirPods. Tens of millions have Apple Watches. And many millions have some combination of many of these.

Agent Siri could come with us from home to the office, from the car to the gym and grocery store. But a truly helpful Siri is just one tiny slice of what consumer-device generative AI could unlock. There are countless innovations waiting to be birthed by AI developers for consumers.

And who already has the most talented and creative developer ecosystem? Apple.

Apple is the company who can make this future — nay, a massively better future than I can possibly describe — if they want to.

They have the right ingredients, and they’ve had them since their earliest days.

Apple Is Built for This Moment

Generative AI on consumer devices isn’t just about hardware or software—it’s a full-stack challenge. It’s not enough to make powerful hardware if the software isn’t great. It’s not enough if the software works but the user experience has friction.

Said another way, we’re pushing past hardware-software codesign and into end-to-end integration of product design, AI models, app software, and efficient hardware. This is Nvidia-style systems thinking, but applied to consumer devices.

Only companies that care about the entire experience, from hardware and software, to digital and industrial design will succeed in consumer device AI.

That’s Apple, to the core. Walter Isaacson illustrates this for us via his wonderful Steve Jobs biography.

From hardware / software co-design:

He believed that for a computer to be truly great, its hardware and its software had to be tightly linked. The best products, he believed, were “whole widgets” that were designed end-to-end, with the software closely tailored to the hardware and vice versa.

To industrial design:

He [Jobs] repeatedly emphasized that Apple’s products would be clean and simple. “We will make them bright and pure and honest about being high-tech, rather than a heavy industrial look of black, black, black, like Sony,” he preached. “So that’s our approach. Very pure, very simple, and we’re really shooting for Museum of Modern Art quality.”

To digital experience:

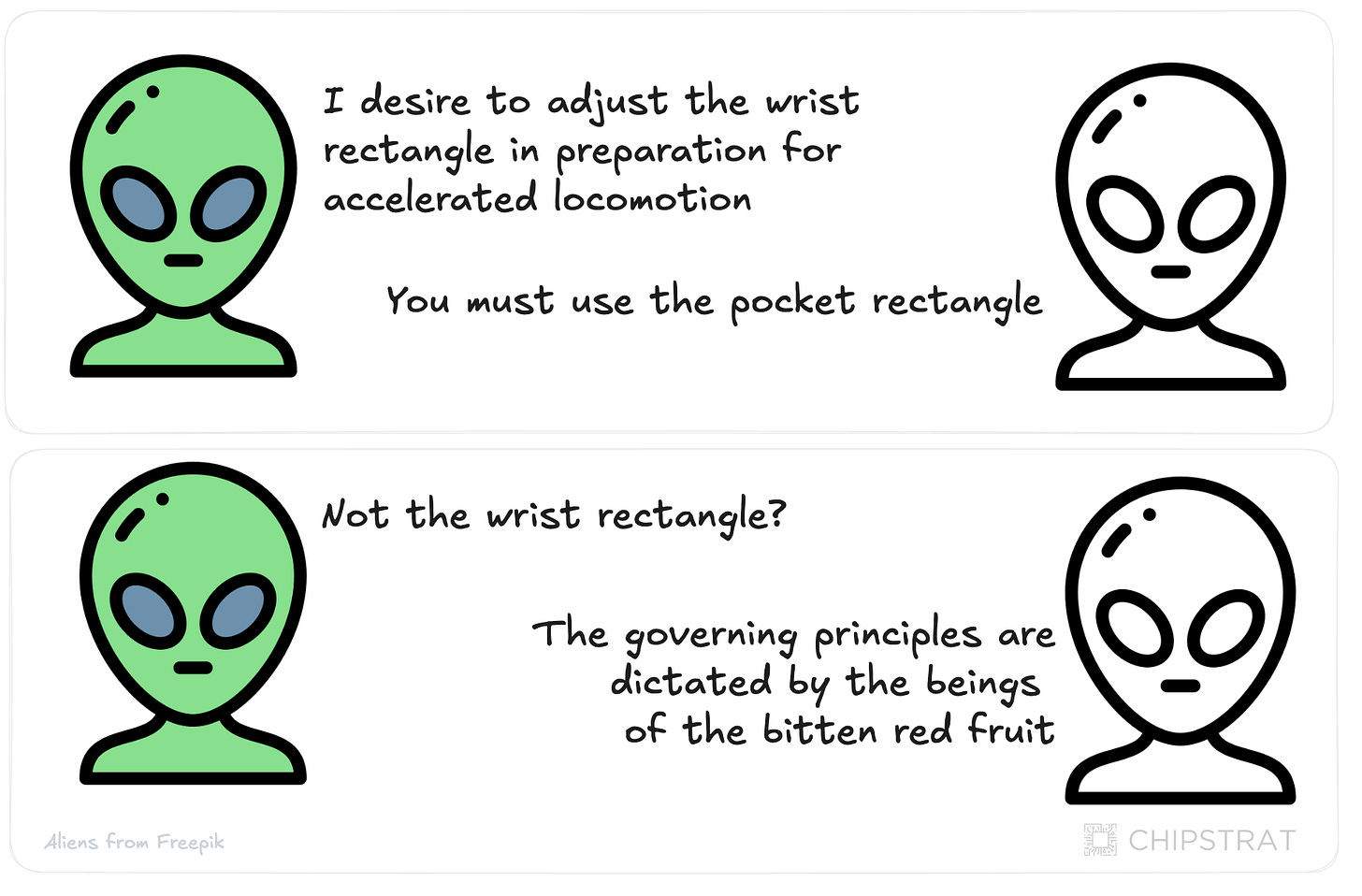

Jobs obsessed with equal intensity about the look of what would appear on the screen. One day Bill Atkinson burst into Texaco Towers all excited. He had just come up with a brilliant algorithm that could draw circles and ovals onscreen quickly. Hertzfeld recalled that when Atkinson fired up his demo, everyone was impressed except Jobs. “Well, circles and ovals are good,” he said, “but how about drawing rectangles with rounded corners?”

“I don’t think we really need it,” said Atkinson.

“Rectangles with rounded corners are everywhere!” Jobs said, jumping up and getting more intense. “Just look around this room!” He pointed out the whiteboard and the tabletop and other objects that were rectangular with rounded corners. “And look outside, there’s even more, practically everywhere you look!”

He dragged Atkinson out for a walk, pointing out car windows and billboards and street signs. “Within three blocks, we found seventeen examples,” said Jobs. “I started pointing them out everywhere until he was completely convinced.”

“When he finally got to a No Parking sign, I said, ‘Okay, you’re right, I give up. We need to have a rounded-corner rectangle as a primitive!’” Hertzfeld recalled.

Bill returned to Texaco Towers the following afternoon, with a big smile on his face. His demo was now drawing rectangles with beautifully rounded corners blisteringly fast. The dialogue boxes and windows on the Lisa and the Mac, and almost every other subsequent computer, ended up being rendered with rounded corners.

This “whole-widgets” approach, from hardware and software to design is embedded deep within Apple to this day. We can hear it in interviews with Apple executives, for example in this conversation with Tim Millet (VP of Platform Architecture) and Tom Boger (VP of iPad and Mac Product Marketing).

When Ben Bajarin asks why Apple prioritizes hardware with an in-house Apple Silicon team, their response is telling:

Tim Millet: The way we think about it from the silicon side is that we are a perfect complement to the Apple’s values.

If you look at our products on the outside, they have beautiful form factors.

Why does that matter? It matters because when people see them, they want to hold them. They want to interact with them and touch them in the different ways that the products support.

They don't want to have a short battery life experience. They don't want to have a Mac with loud fans. They don't want to have uncomfortable thermal. They don't want a laptop that burns them as they put it on their lap.

How do you merge this vision of a beautiful form factor and a user experience with the performance that drives the software to enables that magical experience?

It's the computer that delivers the horsepower. When you design it with a focus on energy efficiency, it’s perfectly in concert with the other tenants that make Apple products so beloved by their customers.

Tom Boger: To your point, Ben, it's the ultimate example or culmination of that tight integration.

Tim and his team works with our various software teams that are thinking about what we're going to be doing two, three, four years from now. We make sure that our silicon can support the ambitions that our software and design teams have.

As we're envisioning new technologies across the company, whether it be in hardware or software like Apple Intelligence, we have to make sure that we're designing for the future now.

From day one, Apple’s core philosophy has been about perfecting all the details on behalf of customers.

That ethos drives everything: performance-per-Watt silicon, OS optimization, app layer polish, digital and industrial design. Apple’s products embody an end-to-end integration unmatched in the industry.

And this integration is precisely the key inhibitor of today’s nascent consumer device GenAI experiences.

On-Device Tutor

As a very simple demonstration, LLMs are super helpful as a tutor that can explain a paragraph of something I’m reading but struggling to understand. But there’s friction in doing so.

Prior to Apple Intelligence, I’d have to take a screenshot of the paragraph I’m reading and have more questions about. Next, I would open ChatGPT, attach the image, and ask more about it.

Today, I can just ask Siri to “explain this highlighted paragraph to me” and it will shuttle it to and from ChatGPT on my behalf.

Yes, this is a baby step, but it’s progress: Apple is removing friction for me.

But why not use an on-device LLM to remove the remaining friction — privacy and latency? I just want to chat with my teacher quickly and without OpenAI in the room.

To date, a teacher with wide-ranging knowledge is too big to fit within the memory of an iPhone; given this technical constraint, the current status quo is to small local models focused on task-specific applications, leaving general-purpose needs to cloud-based models. That’s why Apple brings ChatGPT into the conversation right now (with your permission).

But we’ll overcome this technical constraint; useful GenAI models will shrink to fit on consumer devices, while memory capacity and bandwidth will expand to hold more model weights. Yes, we’ll always have the biggest and best models, agents, and orchestration layers in the cloud; but on-device general purpose models are coming.

DeepSeek: The Edge AI Future Is Now!

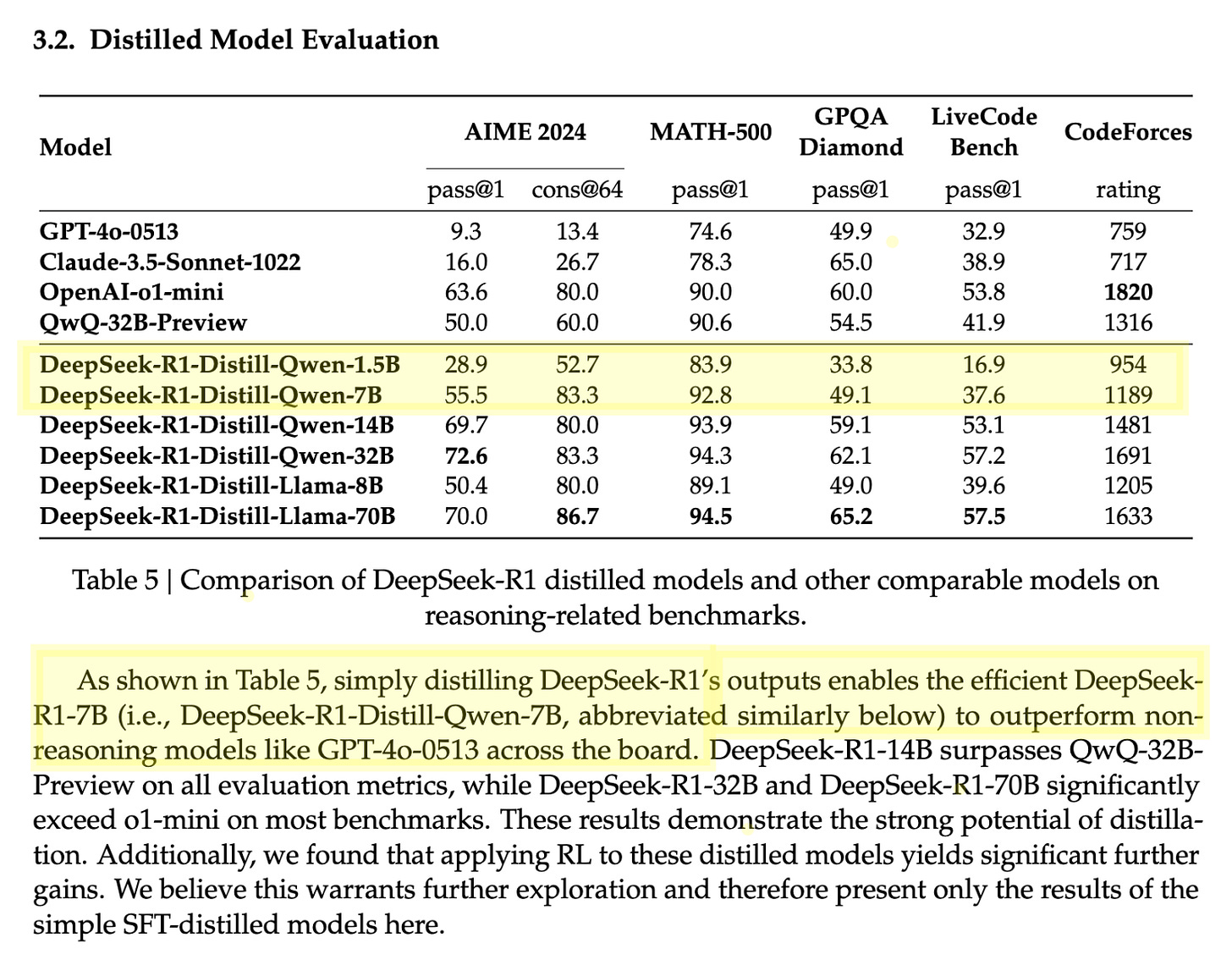

In fact, just a few days ago DeepSeek announced they’ve got a 1.5B reasoning model that outperforms non-reasoning models like GPT4o! It’s a distilled verision of Qwen from Alibaba.

This reasoning language model could fit in a few GBs of RAM on an iPhone!

Reasoning models will go a long way in making on-device assistants useful for more agentic tasks.

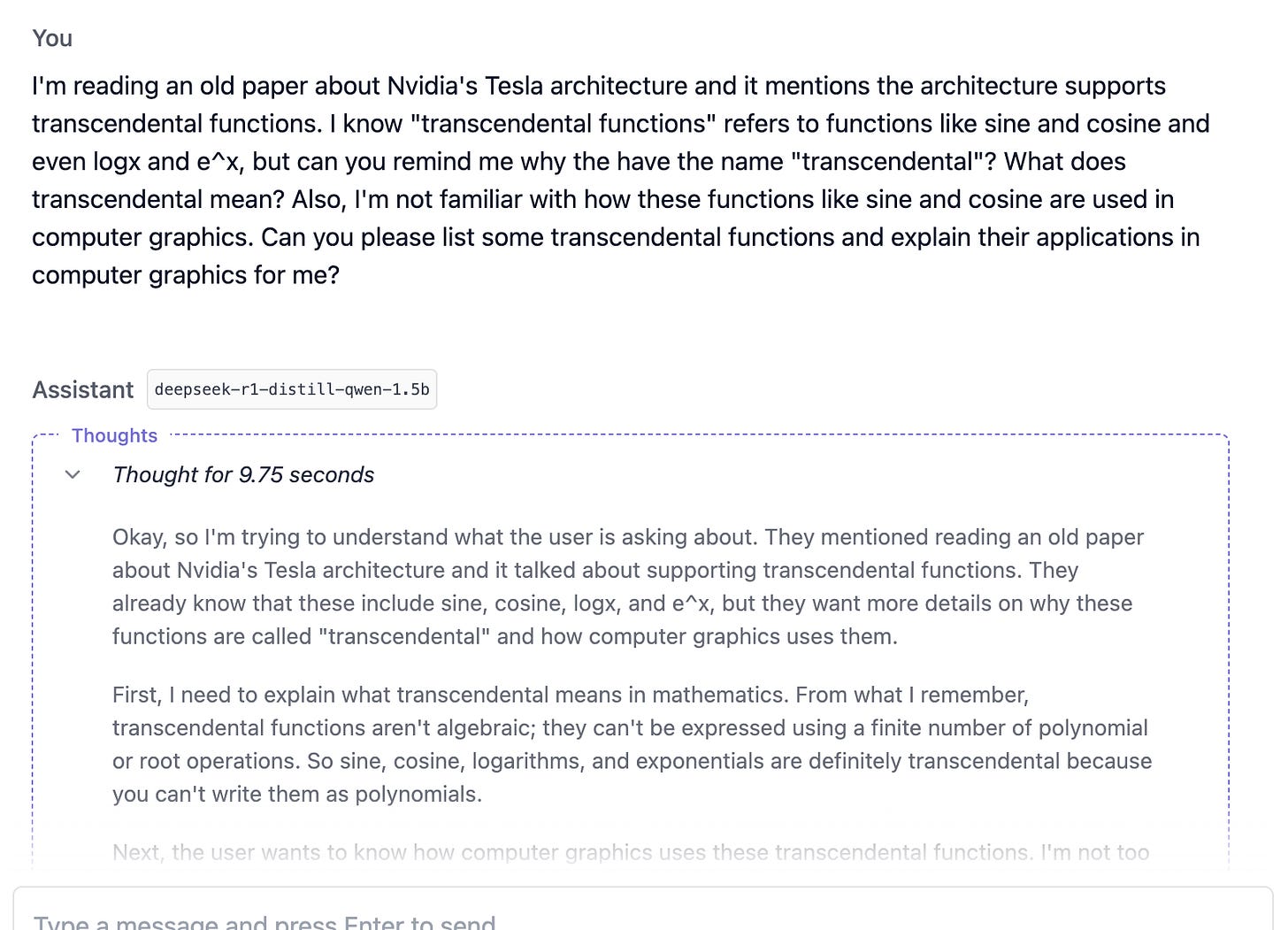

My educational use case doesn’t necessarily need a reasoning model, but to try it out anyway I loaded DeepSeek’s smallest R1 Qwen locally on my MacBook Pro with the base M4 chip and 16GB RAM and asked a question:

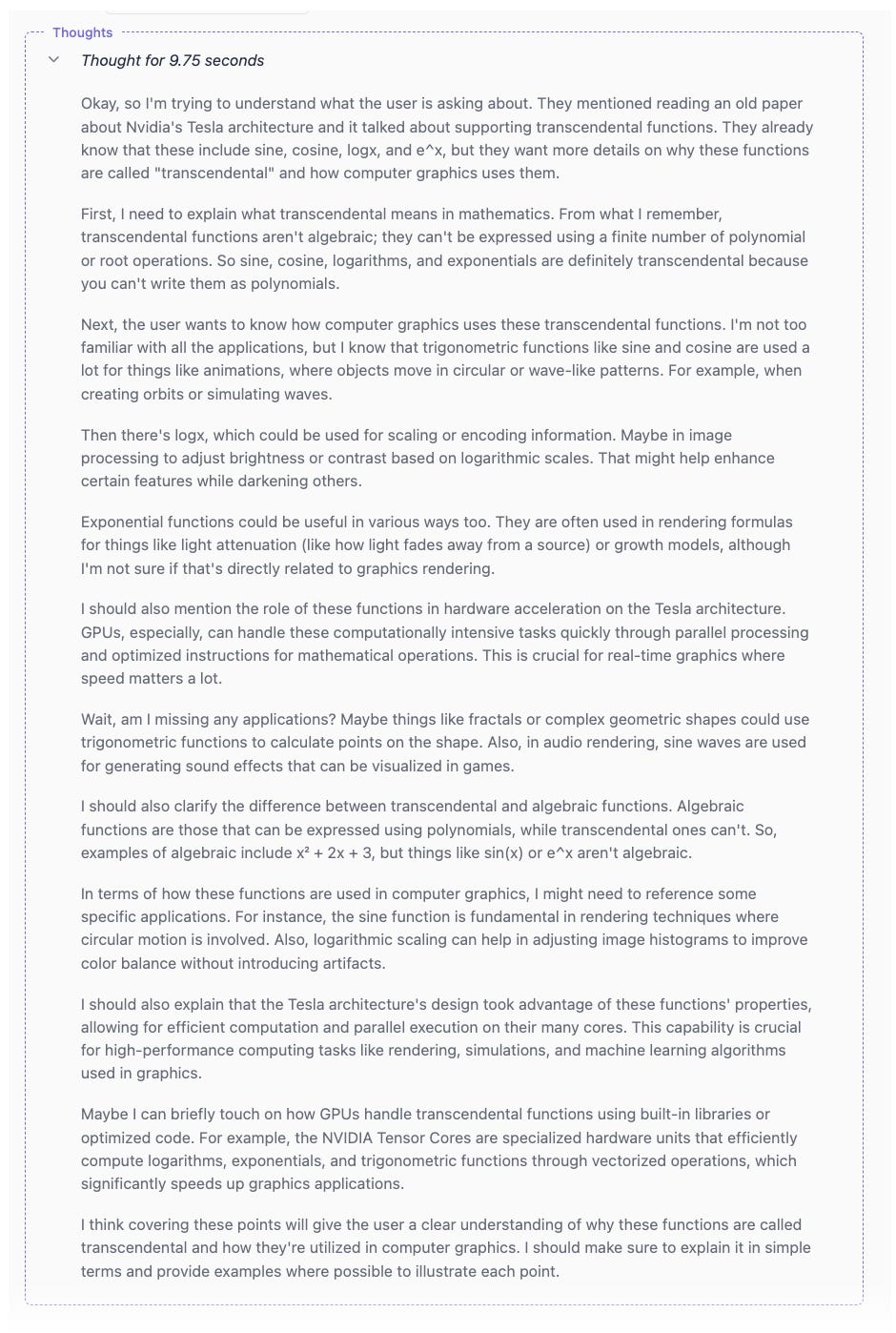

DeepSeek thought for 10 seconds and streamed it’s verbose chain-of-thought so I could see it “thinking”.

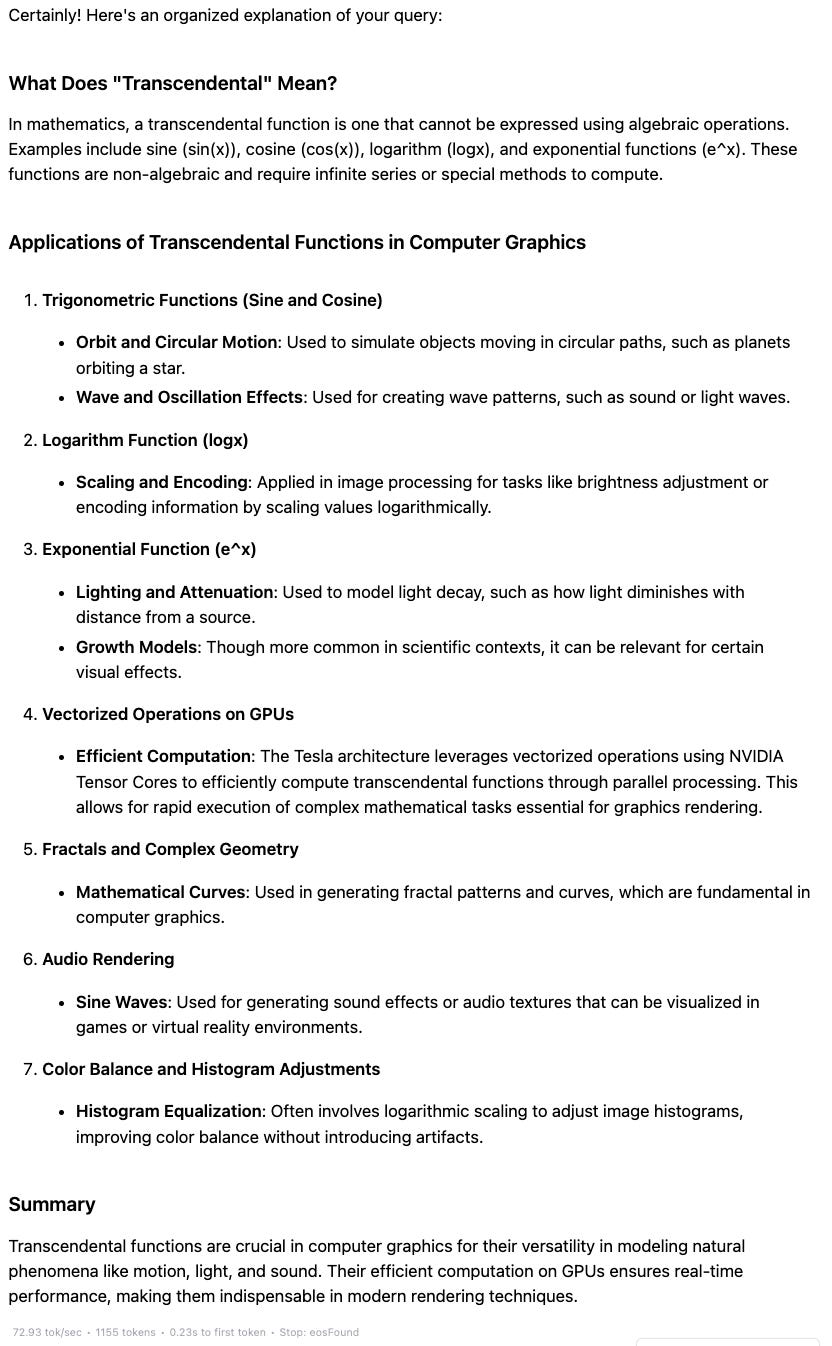

Not bad for a small on-device assistant. After these internal thoughts, the model streamed an answer at ~70 tokens/sec on my Mac, which was plenty fast for this use case.

The answer is not bad. It’s helpful enough to satiate my curiosity. And I see no reason why it won’t get better. These DeepSeek models come from a small team open-sourcing their work and even sharing details about experiments that didn’t work! It’s reasonable to assume local reasoning model progress will increase given DeepSeek’s success and sharing of their tacit knowledge.

General-purpose (GPT4o style) models and reasoning models are coming to a device near you.

Yet there are still technical constraints; certain form factors (glasses) and lower cost consumer devices won’t afford tons of RAM. But as we’ll explore later in this post, there are methods to keep AI models small while still extendable enough to support specific use cases.

Apple’s AI Strategy Credit

On-device AI needs a champion, and it won’t be the foundation model labs, whose subscription business models are predicated on cloud inference; they don’t want you to run local AI. You’ll get the best answers from their state-of-the-art models, but you’ve got to send your query to their cloud. Implicit in this is that you trust them with any data you send.

OpenAI chooses an opt-out policy; by default, they’ll train on your data. I wonder how many of the 300M weekly active users realize this?

When you use our services for individuals such as ChatGPT, DALL•E, Sora, or Operator, we may use your content to train our models.

You can opt out of training through our privacy portal by clicking on “do not train on my content.”

On the other hand, Apple generates revenue through hardware sales and traditional software services and therefore has no problem with on-device inference that maintains your privacy and gives you the best experience possible. So if I want to take a picture with my kids and generate a cartoon version of us for fun, I don’t have to send our likeness to OpenAI. Yes, I told OpenAI they can’t train on my content, but do I truly know what they’re doing with it? I’m not paranoid — I trust the word of many leaders like Satya, Sundar, and Tim Cook. But OpenAI?

This privacy-first benefit of on-device AI is a strategy credit for Apple. As Ben Thompson defines it,

Strategy Credit: An uncomplicated decision that makes a company look good relative to other companies who face much more significant trade-offs.

As a consumer device company, Apple already wants to give you on-device AI for the best experience. Moreover, on-device AI is best for Apple’s capital and operational expenses; the consumer pays for the inference hardware and electricity. So the on-device AI choice is already an easy one for Apple. Privacy comes with the decision, which perfectly aligns with Apple’s existing stance.

Hence, Craig Federighi taking a strategy credit victory lap:

Apple’s AI Challenges

While Apple has all the ingredients to win in consumer AI, success isn’t inevitable.

Behind the paywall, we’ll analyze Apple’s current progress and upcoming challenges. Is Apple Intelligence the right approach? What should Apple do next?