CPU Server Growth, Enterprise AI Agent Adoption Challenges

A Case for CPU Server Growth, Enterprise AI Agent Usability, Business Model and Process Challenges

AI Agents = More CPUs

Jensen recently implied a rise in server CPU demand. From Episode 100 of The Circuit,

Ben Bajarin: Jensen said something at the financial analyst QA that I didn't pick up on before…Jensen pointed out that the AI server factories are another software layer above your general-purpose servers. So you've got all your enterprise software, your Amazon web apps, your Netflix, etc., running on these general-purpose servers. His point is that doesn't go away.

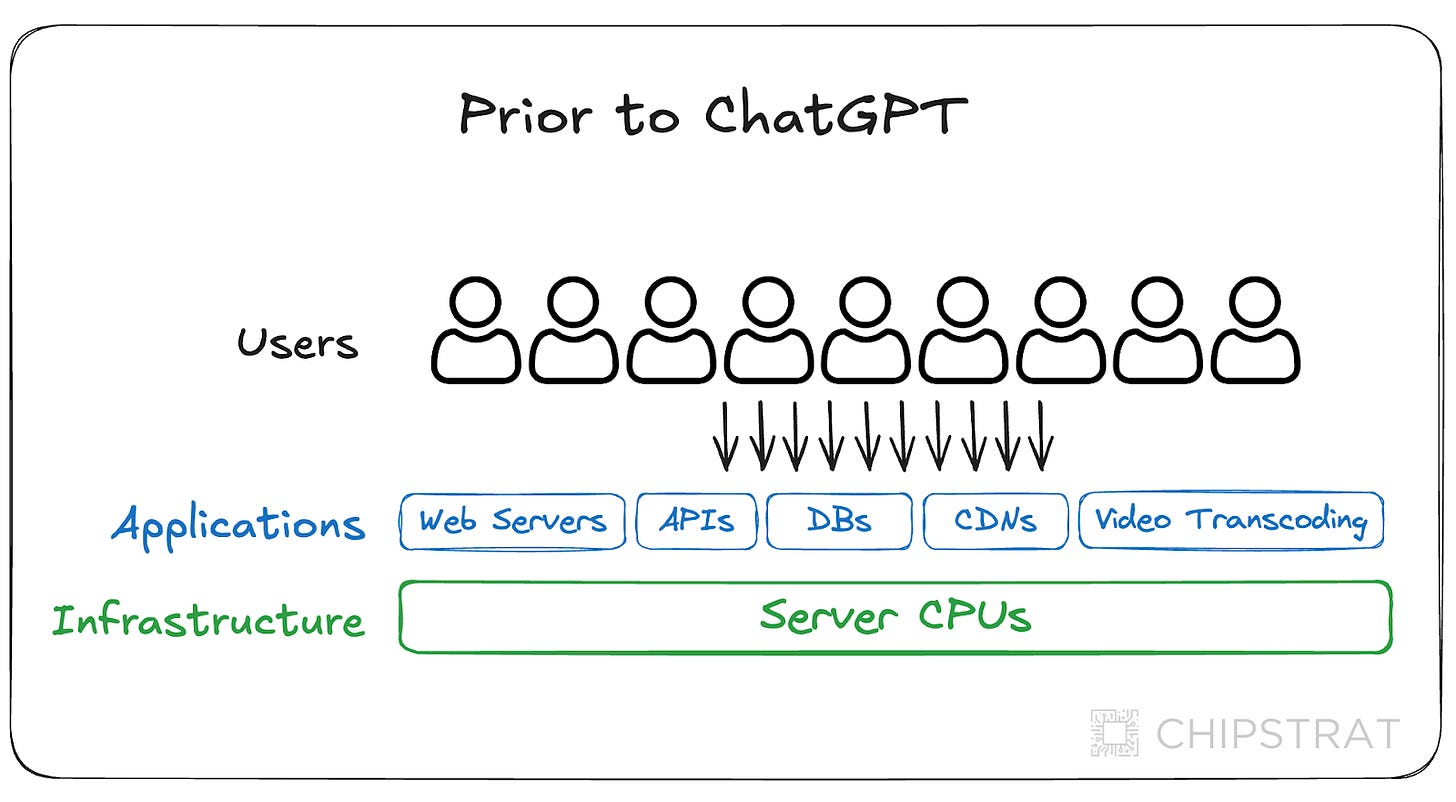

I’ve been saying all along that most traditional workloads won’t be ported to run on GPUs. Microsoft won’t rewrite Outlook to run on GPUs, Oracle won’t port databases to GPUs, and the open-source community won’t port Apache or Nginix web servers to GPUs. The less technical investment community interprets Jensen’s excited “everything will be accelerated!” claims as the death of CPU servers.

But Jensen is now articulating the opposite, which I totally agree with: agents will increase the amount of CPU compute needed!

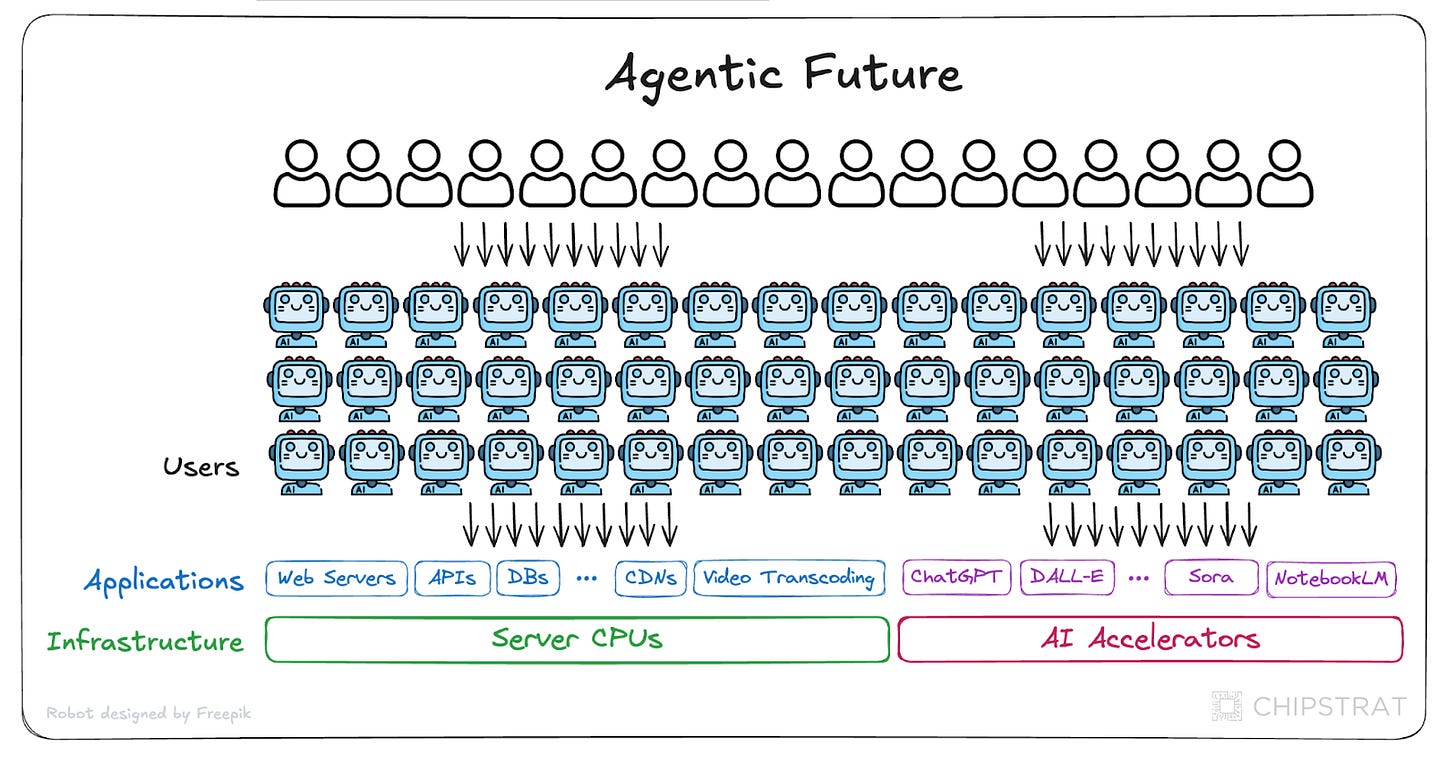

Said another way, there will be many, many more “workers” doing traditional workloads on CPUs. GPU-powered digital agents will do digital work, which is primarily CPU-powered workloads.

To illustrate: before ChatGPT, humans ran traditional workloads, mostly on cloud CPUs.

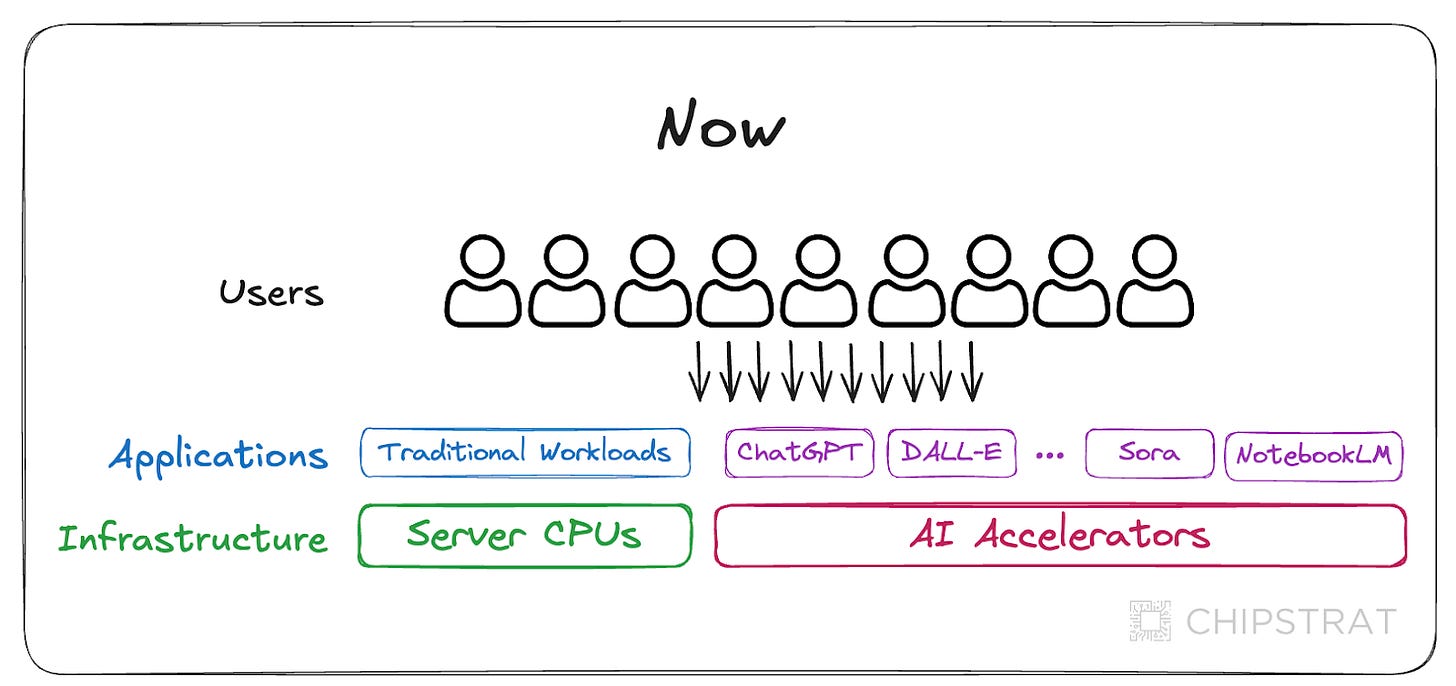

Over the past two years, we’ve witnessed the rise of generative AI-powered workloads running on AI accelerators like Nvidia GPUs and Google TPUs. These applications are being used in addition to the aforementioned traditional workloads.

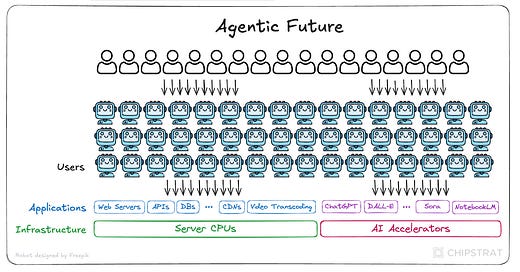

However, in the coming agentic era, a single person may have many agents working for them. So we’ll see an influx of compute consumption from agents:

Think of this as having access to many interns at your bidding.

If I had interns, I would do a lot more work! I could and should do a lot of spreadsheet-based analysis to support my decision-making, but I don’t have the time. However, with agents, just like interns, I could give high-level directives and send them off.

What’s the implication for the semiconductor industry? Access to more workers means more traditional work gets done, which increases the load on conventional and accelerated infrastructure.

This is a bull case for CPU-server TAM expansion!

An agentic world means more CPUs are needed because more workers are doing traditional CPU-based tasks.

Again, many of these workloads aren’t going to move to GPUs. For example, these digital robot interns will do a ton of Microsoft Excel and Outlook work, and probably a bunch of X and Facebook spamming too.

Agent Usability

Of course, this is predicated on agents being useful. Some early adopters have struggled with these early agent tools. I recommend reading Thoughts On A Month With Devin from a company that tried to use a coding agent.

Working with Devin showed what autonomous AI development aspires to be. The UX is polished - chatting through Slack, watching it work asynchronously, seeing it set up environments and handle dependencies. When it worked, it was impressive.

But that’s the problem - it rarely worked. Out of 20 tasks we attempted, we saw 14 failures, 3 inconclusive results, and just 3 successes. More concerning was our inability to predict which tasks would succeed. Even tasks similar to our early wins would fail in complex, time-consuming ways. The autonomous nature that seemed promising became a liability - Devin would spend days pursuing impossible solutions rather than recognizing fundamental blockers.

This reflects a pattern we’ve observed repeatedly in AI tooling. Social media excitement and company valuations have minimal relationship to real-world utility. We’ve found the most reliable signal comes from detailed stories of users shipping products and services. For now, we’re sticking with tools that let us drive the development process while providing AI assistance along the way.

AnswerAI points out that usefulness —using the agent to actually complete tasks — is missing. We’ve only seen possibilities — cherry-picked demos that paint what the future could be.

AnswerAI’s journey is just one data point; I’m sure others have had more success. But their pain is also a red flag—programming should be easier for agents than many other jobs. If coding agents aren’t ready yet, 2025 will not be the year of mainstream agentic AI adoption.

Yet I see no fundamental reasons we can’t get to useful AI agents — we’re just early. Maybe 2025 is the year of improved AI usefulness for early adopters.

Behind the paywall, let’s explore challenges to widescale enterprise AI agent adoption.