Intel CPU follow-up, Nvidia Superchips, AMD MI300A

Intel Inside (AI data centers), Grace Hopper & Grace Blackwell, MI300A

Last week, we asked if Intel CPUs were undifferentiated in AI data centers. This post continues that analysis and investigates the influence of Nvidia's Superchips and AMD's MI300A on data center CPU usage.

CPUs in the AI server

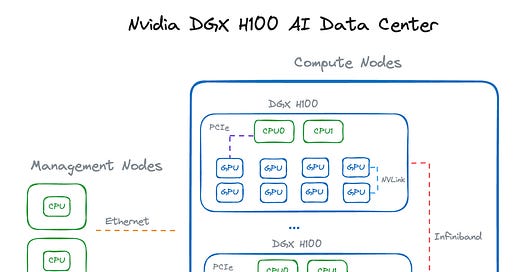

Understanding the role and quantity of x86 CPUs within AI data centers is key to accurately sizing the incremental CPU TAM. Let’s look at some examples.

Imbue

Imbue recently wrote about their 4,092 H100 cluster with a 8:1 GPU-to-CPU ratio. This LLM training cluster uses more than 500 CPUs.

The CPUs in this particular training cluster are used for many tasks, including executing host-level health checks and monitoring scripts to maintain system stability, managing the InfiniBand fabric via provisioning and recovery scripts, orchestrating the machine learning workflow and data transfers, and coordinating the distributed training process across GPUs and nodes.

The article doesn’t call out which brand of CPU servers they use. It might be Intel, as the article mention…