Paid subscribers recently examined ZML's Hardware-Agnostic Distributed Inference and then explored Intel’s challenges with Intel Skips 20A and IDM Death Spiral.

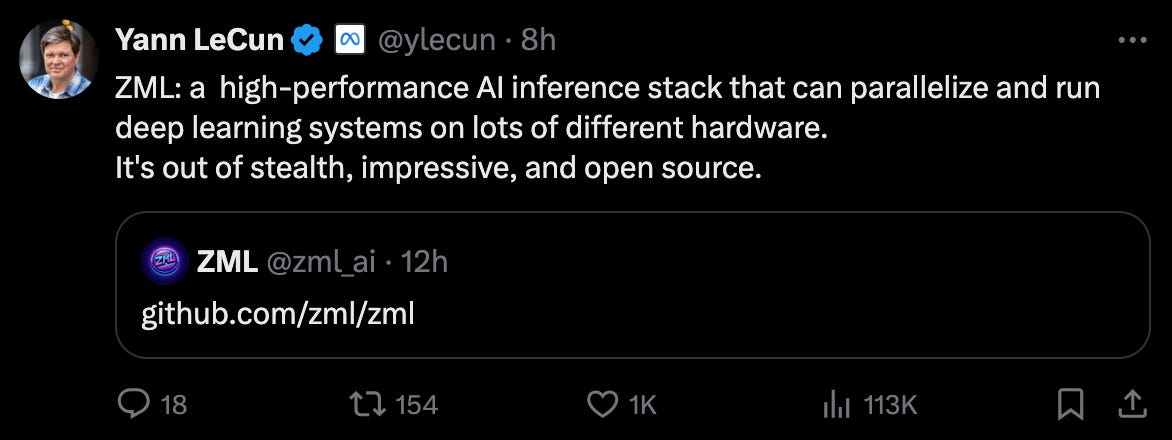

In related news, ZML launched yesterday.

The AI PC Mirage

Intel’s Journey

First, a recap for new subscribers.

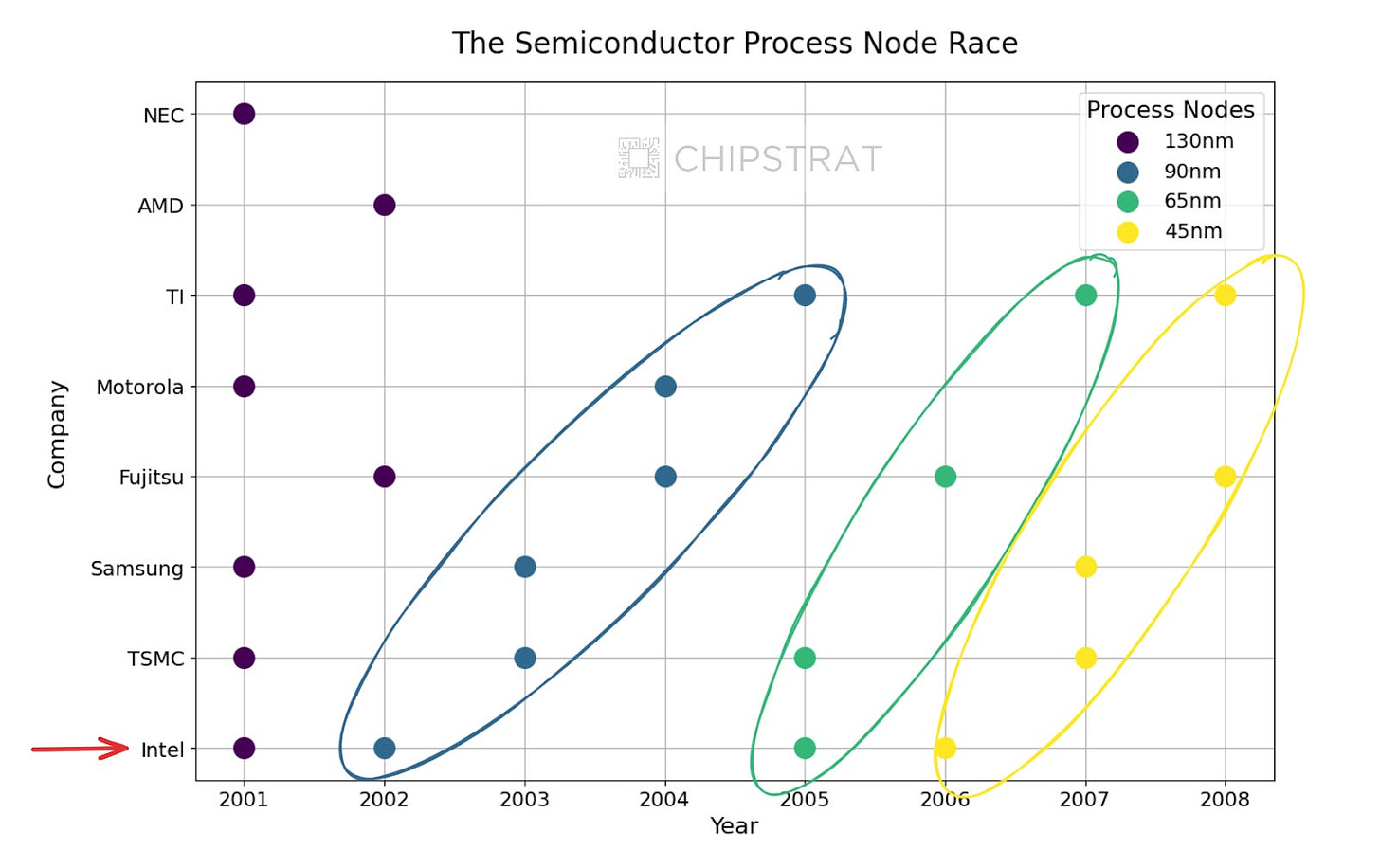

Our first deep dive identified Intel’s main advantage: its ability to invent and manufacture smaller transistors faster than competitors. This head start allowed Intel’s chip design team to launch CPUs that outperformed others.

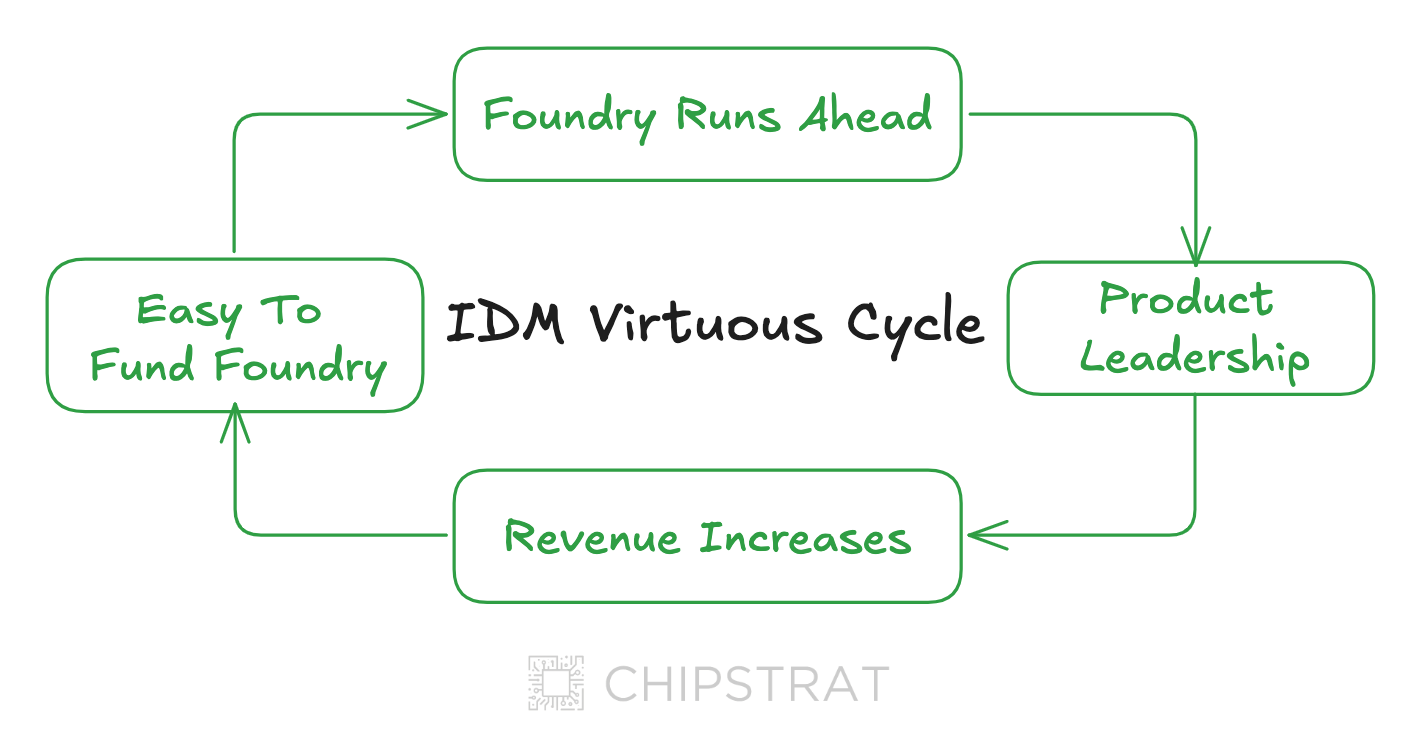

Intel’s consistent semiconductor manufacturing leadership fueled a virtuous cycle. Smaller transistors led to product strength, which commanded greater market share and profits. These profits were channeled back into R&D to win the next node. Intel’s IDM business model worked well throughout the 2000s.

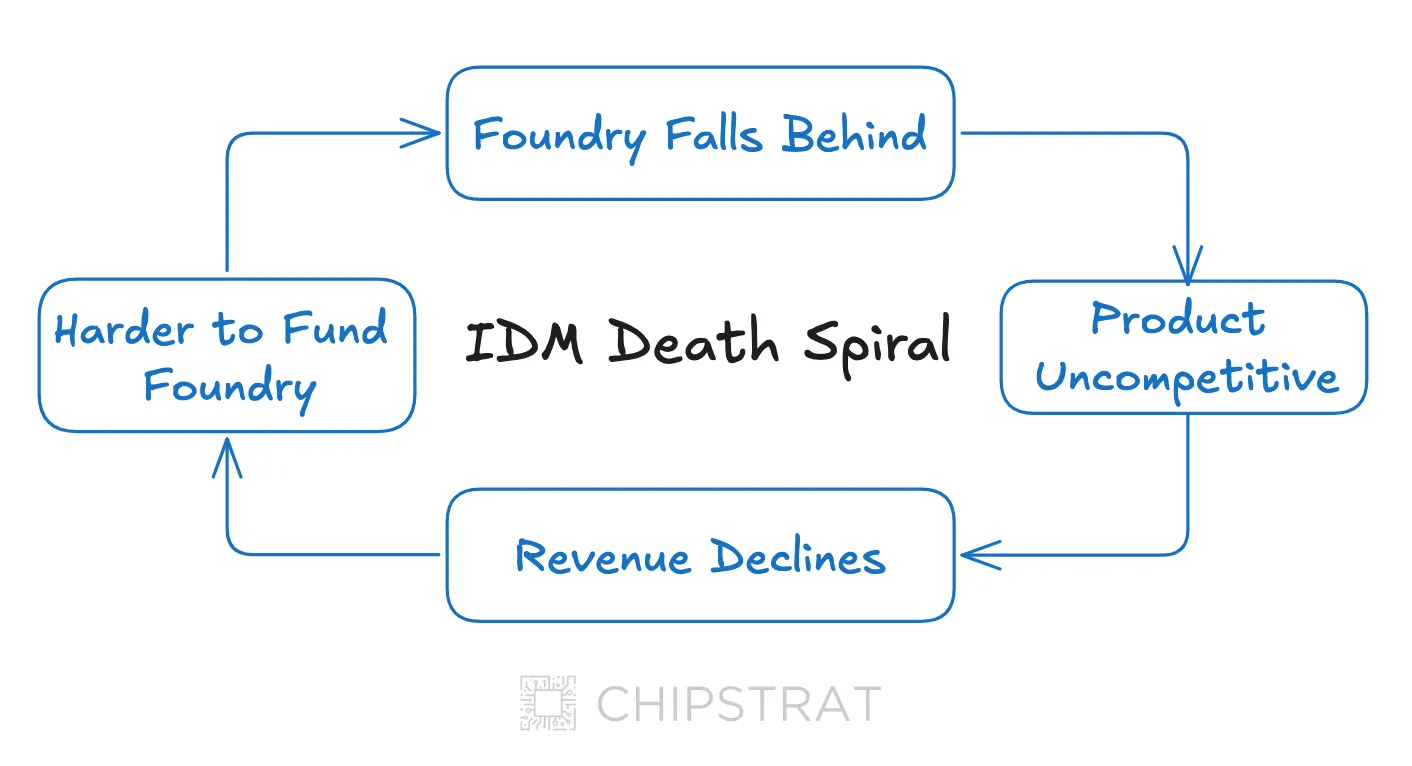

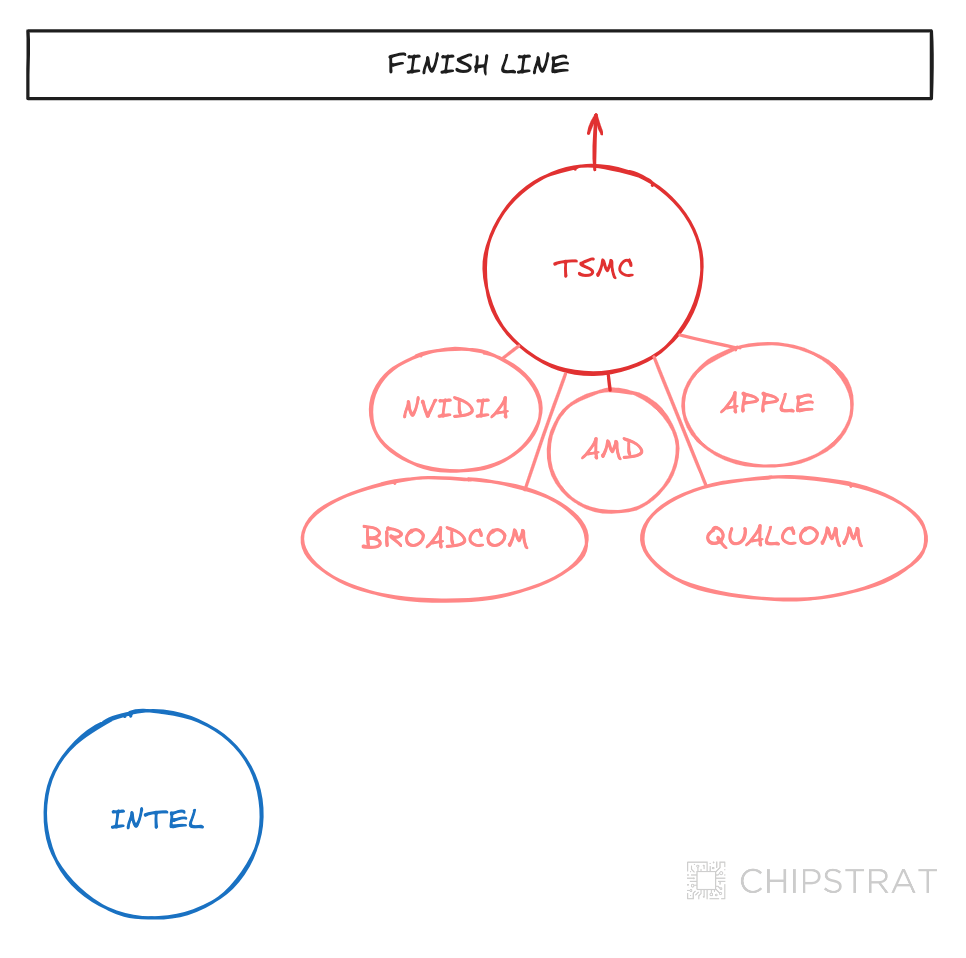

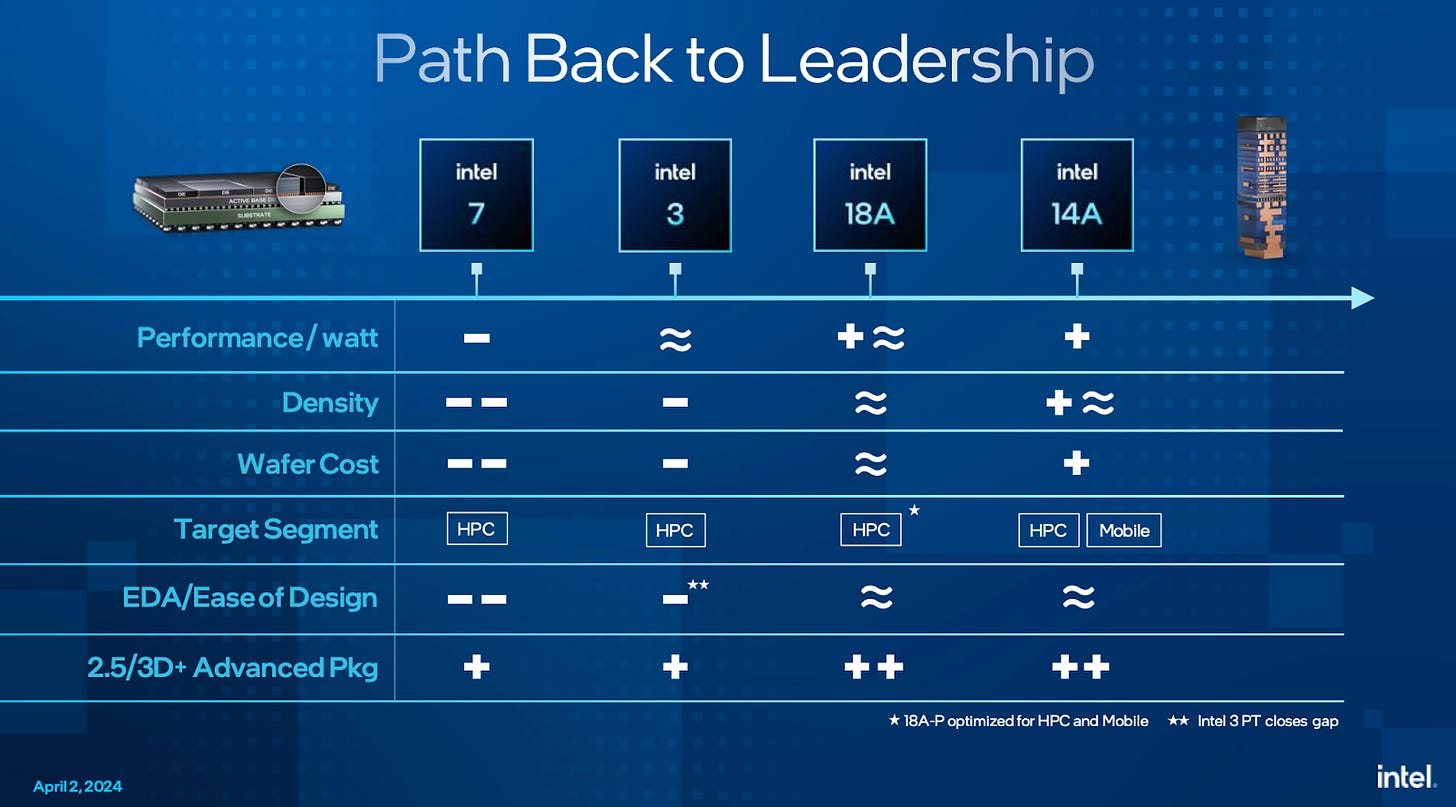

However, in the 2010s, Intel lost its manufacturing lead and fell into the IDM Death Spiral. Intel’s lagging foundry resulted in weaker CPU performance, reduced sales, and narrower margins. Consequently, Intel had fewer funds to reinvest in Foundry, while facing increasing pressure to catch up.

Industry consolidation amplified Intel’s problem. The entire fabless design industry was pulled ahead while Intel’s fab and products were left behind.

Adding to Intel's woes, Arm rivals have been aggressively chipping away at the x86 lock-in that once served as a key competitive moat for Intel's Product group.

Fast forward to today.

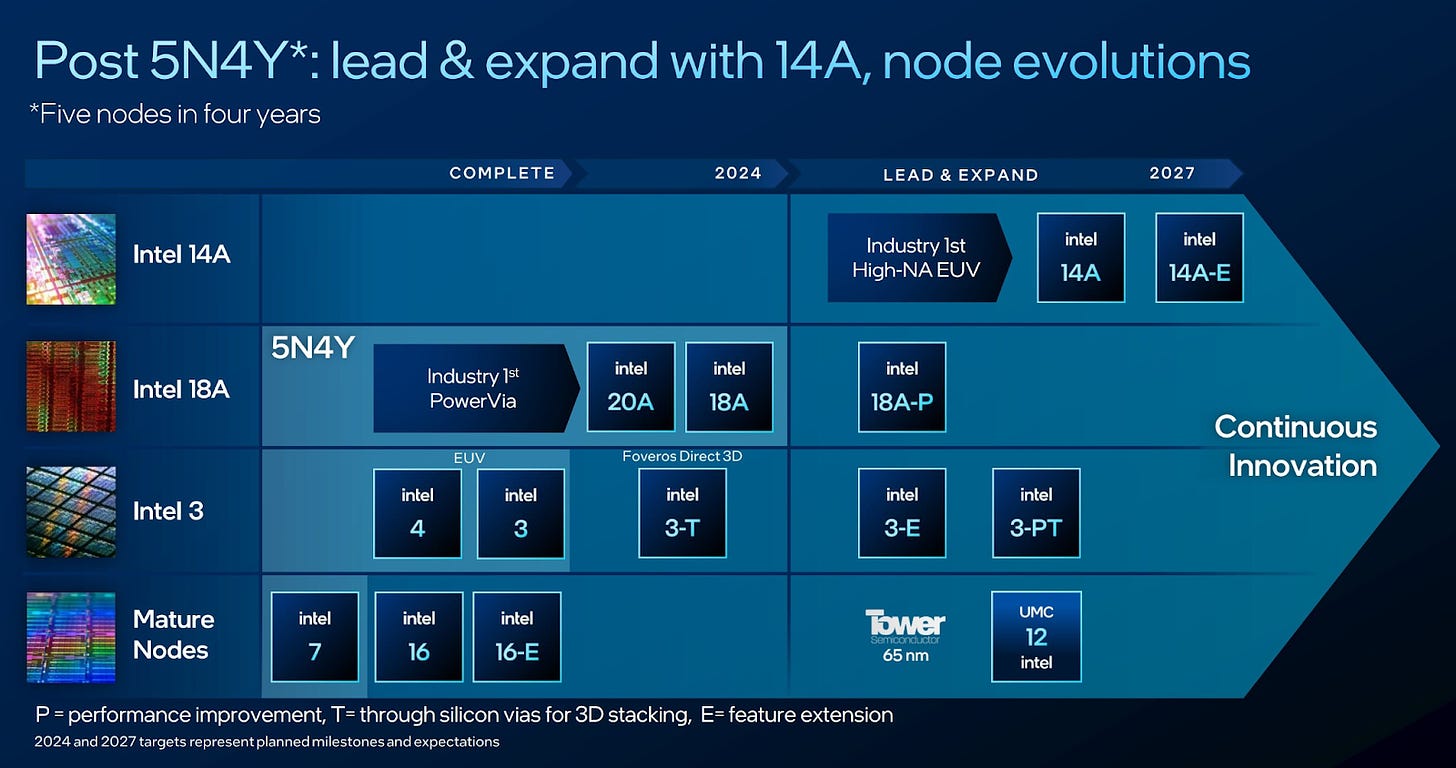

Intel is trying to break out of this downward spiral by speedrunning through several semiconductor manufacturing process iterations (5N4Y) in a financially disciplined manner (IDM 2.0).

Delivering the final step of this journey (18A) will prove that Intel’s catchup strategy worked. Equally as important, it should convince fabless designers to view Intel Foundry as a technically viable contender for wafer production. Whether Intel will actually be an economically viable contender is a topic for a later post.

However, Intel’s CPU business is seeing a concerning drop in yearly revenues, and 18A alone can’t solve that. Intel believes it will regain leadership at 14A, revitalizing CPU revenues and jumpstarting its nascent external Foundry business.

18A will begin production ramp in 2025, but 14A might not ramp until late 2026 or early 2027.

The question is whether Intel will have enough money to see 5N4Y and 14A through.

Here’s where the AI PC comes in.

The AI PC Market Expansion

What if the CPU market grows, lifting Intel’s revenue even as its market share shrinks?

The Generative AI Boom

ChatGPT ignited a Generative AI boom in November 2022, driving massive demand for Nvidia GPUs.

Intel mostly missed out, as its accelerator (Gaudi) and ecosystem (OpenVino) are not competitive with Nvidia’s Hopper and CUDA.

Ironically, this lack of AI competitiveness contributed to Nvidia’s decision to choose Intel's Xeon CPUs as the preferred head node for AI accelerator systems. Yet Intel’s incremental revenue from AI may not last, as there’s every reason to believe Intel’s CPU role in AI systems will diminish as Nvidia’s ARM CPU (Grace) sees growth with next-gen Grace Blackwell systems.

How about client CPUs, Intel’s forte? Could demand skyrocket for laptops and desktops that run generative AI locally (“AI PCs”)?

This seems plausible at first glance, as generative AI shows signs of productivity growth, and PCs are primarily used for productivity tools like Microsoft Office.

Intel quickly fell in love with this exact idea and committed to creating an AI PC market. The goal was to develop a multi-year CPU market expansion, which might generate the incremental revenues needed to ease 5N4Y funding concerns.

Casting Vision

In 2023, Intel started talking a lot about AI PCs. Here’s CEO Pat Gelsinger at Intel’s 2023 Innovation Day, sowing AI PC seeds.

Pat Gelsinger: I'll tell you what's even cooler is if we put it [AI] in the hands of every human on Earth, making it useful with nimble models that can run on your PC that enable these models to be literally everywhere offering personal, private, secure AI capabilities that infuses every aspect of our daily lives.

Pat then teased the forthcoming launch of the AI PC and hinted at an oncoming refresh cycle.

As we bring this age of the AI PC, we're you know thrilled by the momentum that we're seeing. We're going to bring millions of AI enabled PCs, ramping to tens of millions, to hundreds of millions to enable tens of billions of TOPs of capabilities.

Wow, Pat is promising hundreds of millions of AI PCs. How exciting! Great timing for Intel!

Yet many of Pat’s use cases from this demo don’t actually need NPU inference. They can run locally on the integrated GPU or send the inference request to the cloud.

Here’s a link with videos demonstrating many of these use cases Pat mentioned.

Watch the Zoom video. Do Zoom consumers need an Intel AI PC to run features like background blur locally? No. The video mentions that Zoom’s app offloads the AI processing to the NPU if possible, which helps with battery life. But it’s not required. It could run on the GPU.

Maybe there are Zoom background blur lovers who are in Zoom meetings all day from locations where they can’t plug into an outlet—if that’s you, give the AI PC a try and see if it boosts your battery life! But that’s a pretty narrow use case, and it’s unclear what kind of battery life benefits we’re even talking about.

2024 - The Year of the AI PC

Intel stayed the course and worked hard to realize its vision of an AI PC market. In December 2023, it hosted an "AI Everywhere" special event to generate buzz and announce the imminent arrival of AI PCs.

From AI Everywhere in December 2023,

Pat Gelsinger: We’ve been seeing this excitement of generative AI, the star of the show for 2023, but we think ‘24 marks the AI PC. That will be the star of the show in this coming year.

Intel’s OEM partners wanted this future to exist, too. Here’s HP’s CEO touting 2024 as the year of the AI PC:

Enrique Lores: Together, we will personalize the way people everywhere live, work, and play like never before. We're going to make 2024 the year of the AI PC.

Lenovo caught the AI PC bug, too:

Luca Rossi: Congratulations to the Intel team for leading the industry into this new exciting AI PC era.

However, merchant silicon companies and OEMs can’t make the AI PC happen without software support. Fortunately, Microsoft’s CEO Satya Nadella is on board!

Satya Nadella: At Microsoft, we are committed to helping people and organizations adapt and thrive to this new age of AI. That's one reason our longstanding partnership with Intel is so important… From our work on confidential computing to bringing the latest Intel Xeon CPUs with advanced matrix extensions to our cloud, to our collaboration on this new era of AI PCs starting with Copilot on Core Ultra.

There’s just one problem. The AI PC refresh is a mirage.

Way Too Early

There’s no compelling reason for the average PC user to run local generative AI on a laptop—not yet anyway. Most consumers in 2024 aren’t using generative AI yet, and if they do, it’s in the cloud.

Here’s a short anecdote. I wanted to hear what others thought about AI PCs, especially folks outside the Silicon Valley echo chamber. I figured I’d start with my wife.

Me: Random question. Do you have any interest in buying an AI PC?

Wife: long pause as she was thinking about what that term even means

Wife: Why would I need an “AI PC”? I have Google Gemini.

My wife uses her phone's cloud-based Gemini assistant if she needs generative AI. Why reach for a laptop? Who cares if it runs locally?

That’s just one cherry-picked anecdote, so let’s look at the data and see if an AI PC refresh is genuinely desired or simply a mirage.

Generative AI Usage

Big Enough to Matter?

First, would an AI PC refresh be big enough to matter? If not, this whole AI PC hype is moot.

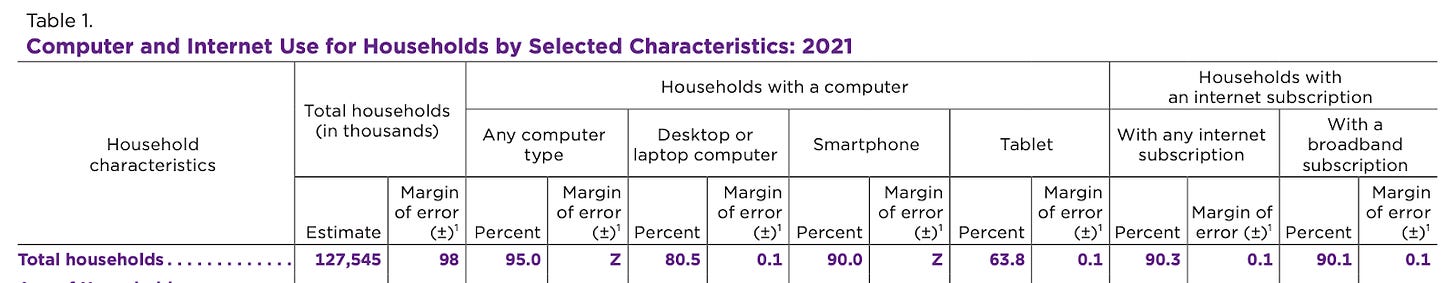

In 2021, the US Census Bureau reported that 81% of American households had desktops or laptops.

That’s a lot of households with PCs! Sure, that was three years ago, but nothing has materially changed about the competing form factors (phones, tablets, watches, glasses, etc) to cause that number to plummet massively in just three years.

Given that the US has more than 100 million households, an AI PC refresh could potentially drive millions or even tens of millions of incremental purchases alone—and that’s not even including workplace PCs.

This is a significant enough volume to add billions of dollars in annual revenue for Intel.

On the other hand, the same survey shows that more households had smartphones (90%) than PCs (81%). Given smartphones' mobility and utility, might we see local generative AI flourish on phones before laptops and desktops? My wife thinks so!

Furthermore, smartphone players like Apple and Google are vertically integrated and can deliver the software and hardware needed for local AI. In contrast, Intel is beholden to Microsoft for use cases incorporating AI into the operating system.

So, even if local AI use cases materialize, it’s unclear if they will first come to PCs.

The Majority Aren’t Using Gen AI Yet

While lots of US households have PCs, very few use Generative AI.

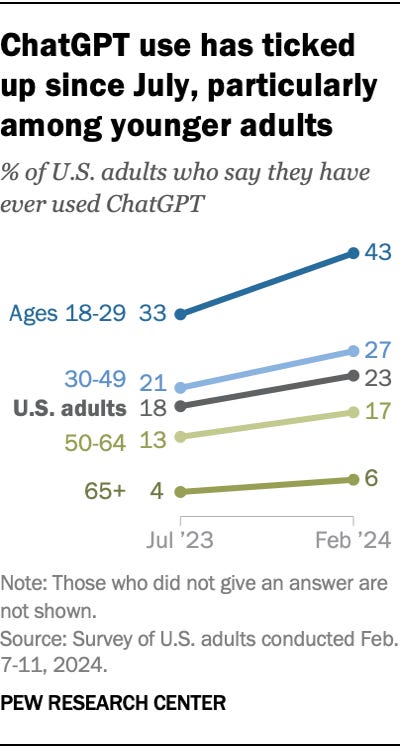

A Pew Research study from Feb 2024 says less than 1 in 4 Americans have ever used ChatGPT. Ever.

Unless an amazing PC feature or app is launched soon, it’s safe to assume the majority of Americans aren’t going to rush out to buy an AI PC if they haven’t even tried ChatGPT.

Some Are, But Not Many

What about the early adopters who do use Generative AI?

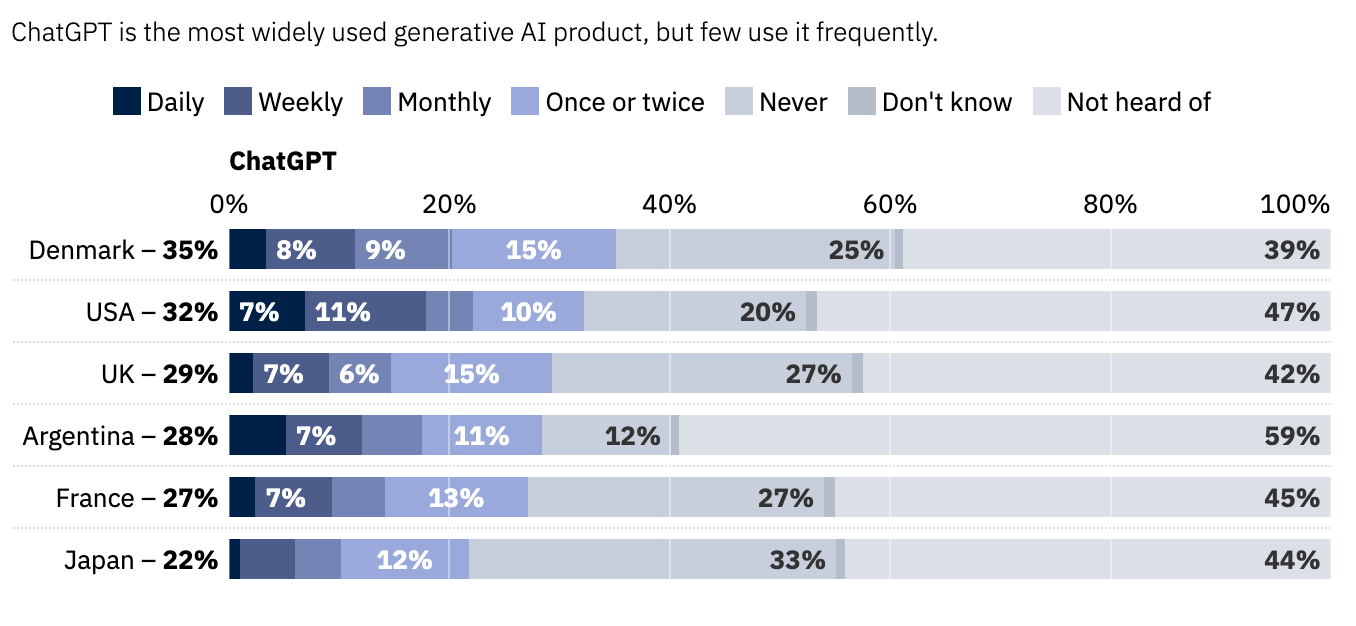

Reuters + YouGov conducted an online study of 2,000 Americans in May 2024 and found that 18% of respondents use ChatGPT weekly, and only 7% use it daily. Even if ChatGPT could run locally on an AI PC (which it can’t), would that be enough to drive widespread AI PC adoption?

This adoption rate is too low. Even if Pat were betting on the darling of Generative AI (ChatGPT) to drive a 2024 refresh cycle, we'd be talking about volumes in the mere tens or hundreds of thousands. To reach volumes that matter, Pat must also assume adoption rates will quickly ramp.

Can Gen AI Even Fit on PCs?

Lastly, the most intelligent models are too big to run locally on a single PC.

Yes, exciting research is happening to bring small LLMs to low-power, compute-constrained edge devices. But that’s a far cry from commercial use cases that will sell PCs to typical folks today.

Recently demonstrated chain-of-thought models like OpenAI's o1 might save the day. Although its size is unknown, the o1 mini model suggests we might finally move away from the old strategy of dumbing down large world models to fit on PCs and instead pursue small, efficient reasoning engines.

In other words, the value of a dumbed-down world knowledge model that spits out the first answer it thinks of is dubious, but a small agentic assistant tailored to PC workflows seems promising. The latter can always call out to bigger and smarter cloud-based world models.

Of course, this promising idea of “reasoning-engine models on the PC” only became possible with the revelation of o1, which happened nine months into Pat’s “year of the AI PC.” 🤔

Data-Driven Conclusion

So here’s what we’ve established with a cursory glance at relevant data:

Millions of Americans have laptops/PCs

Even more households have smartphones

Most Americans have never used ChatGPT, and many have never even heard of ChatGPT

Less than 10% of Americans find enough value from ChatGPT to use it daily

ChatGPT runs in the cloud, not on the PC

This data casts significant doubt on the demand for AI PCs. Yet Intel drew the opposite conclusion, believing there’s pent-up demand for AI PCs that would manifest as soon as an AI PC hit the market.

What explains Intel’s folly? Desperation? Or was there something else Intel saw?

Banking on Windows

Intel was surely banking on Windows Copilot+ to create demand for local PC inference.

After all, consumers aren't always logical, and most buyers don't know the difference between local and cloud AI. If Microsoft released something useful and said, "You need an AI PC for this," many people would probably buy one, even if the feature didn’t need local AI but used the cloud instead.

Unfortunately, the Windows Copilot+ was a flop. Ironically, the feature with the most buzz, Recall, was recalled due to security concerns.

As an aside, Microsoft Paint Cocreator looks pretty fun. It's not “run out and buy a new laptop” cool, but more like “if I had access to it, I’d use it to get some laughs on occasion” cool.

Regardless, even Microsoft’s Windows Copilot+ fails to demonstrate compelling features that would drive a widespread refresh cycle.

Yet Intel keeps believing in an AI PC supercycle.

Maybe they had a fundamentally incorrect view of the world based on prior experiences?

The Misguided “Centrino Moment”

When Intel started talking about the AI PC, I appreciated their hustle. Here was my thinking: There’s no real use case here, but Intel is trying to create a market and see if anything materializes in the interim. Good on them. Sometimes, companies get lucky. Maybe developers will see the potential before consumers and create AI PC apps that drive demand.

However, as 2023 unfolded, it became clear to me that Intel wasn’t just hopeful this future would come true but absolutely convinced it would happen—in 2024, no less!

I suspect Intel's conviction regarding the imminent future of AI PCs comes from the false premise that the AI PC age will mirror Intel’s Centrino period from the early 2000s. An internal echo chamber likely reinforced this faulty conclusion.

From the “AI Everywhere” event,

Pat G: I like to say it's like a Centrino moment. Now for those of you who don't remember Centrino, it helped to create WiFi. What happened when we created WiFi? Pretty much nothing for three years. And then – Centrino. A platform that drove in volume into the industry, and all of a sudden, every coffee shop, every hotel room, and every business needed to become connectivity, internet-enabled. New form factors started to emerge as a result. And we think of the AI PC as that kind of moment, driving this next generation of the platform experience. Andy Grove called the PC the ultimate Darwinian device. The next major evolutionary step of that device is underway today. It's called the AI PC.

This Centrino moment analogy is wrong.

In the early 2000s, on-the-go internet activities were out of reach until the availability of WiFi-enabled laptops and hotspots in public spaces increased.

As Intel aptly stated in a 2003 press release:

With notebooks based on Intel Centrino mobile technology, a business traveler can check office email or read the hometown newspaper online while waiting for a flight at the airport, and still have battery life left to watch a DVD movie on the plane ride home. A real estate agent can check the latest listings wirelessly while dining with prospective home-buyers. A financial planner can check the market and activate client orders while at a seminar without compromising on the performance necessary to run the most demanding office applications. Students can register for next semester's classes or seek a part-time job from the college library, all on a sleek, light-weight system that won't drag them down.

Think back to 2003. People were browsing the web and sending emails but were chained to their desks. Centrino helped change that, allowing users to go online wherever they wanted.

If the AI PC indeed parallels Centrino, the generative AI user experience must be somehow restricted, requiring the AI PC to step in and unlock it. Customers would then rush to buy the AI PC because it enhances the user experience, making generative AI more accessible and valuable.

Unfortunately, that’s not the case with AI PCs.

Local inference will not drive significant usage, as PC inference does not unlock a better customer experience. At least, not yet.

What are the constraints of the Generative AI user experience?

First, it’s too slow. AI PCs won’t solve slow LLM inference. Compared to the best AI models running on large GPU clusters in the cloud, AI PCs run inference on computationally weak CPU/GPU/NPUs using much smaller models.

The AI PC is a degraded experience.

Second, LLMs are too foolish.

OpenAI’s o1 reasoning engine, launched last week, is a promising path to overcoming LLM’s perceived foolishness.

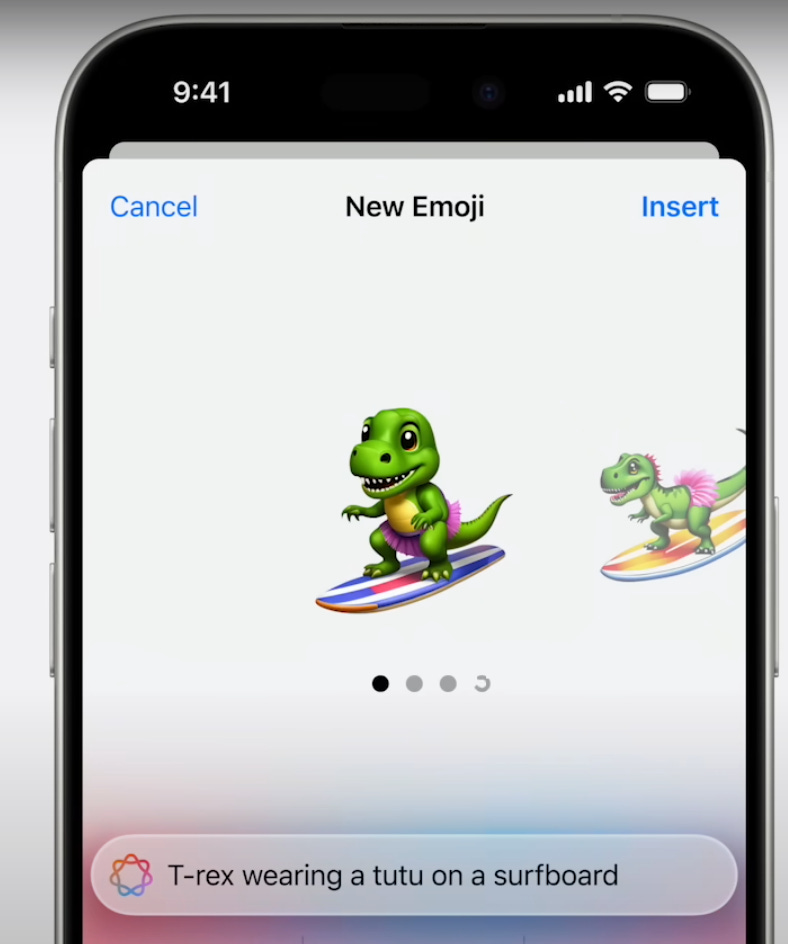

Apple Intelligence also demonstrates the usefulness of local, small models fine-tuned for various use cases that don’t require world knowledge. Take Genmoji for example:

Features like Genmoji wrap small models in user experiences that matter, removing the perception of foolishness.

Similar features powered by small models don’t exist on Windows PCs yet.

Recent revelations like Apple Intelligence and o1 should open PC app developers' eyes to what’s possible, so the future of PC inference should get more interesting soon. Of course, building these features takes time. And, by the way, o1 and Apple Intelligence are proprietary. Progress might be limited until developers have small reasoning engines from the likes of Meta.

Let’s pause to appreciate Apple’s vertical integration, which gives it a distinct edge in driving the adoption of on-device AI. This contrasts with Intel’s dependence on Microsoft and ISVs to create and deploy compelling generative AI experiences.

Even More Folly

In addition to the false Centrino premise, Pat shared additional mistaken beliefs during the 2023 Intel Innovation Day:

Pat G: Why is [AI inference] gonna happen at the edge? First is the laws of economics, my device versus a rented cloud service I pay for. Second is physics – round trip to the cloud, or do it right here immediately? And finally, the laws of the land, my data, all the geographies that we operate in, we're gonna operate locally on models on the edge and on the device.

Pat says running locally will be cheaper, faster, and more secure than the cloud.

Granted. But for what use cases?

Faster

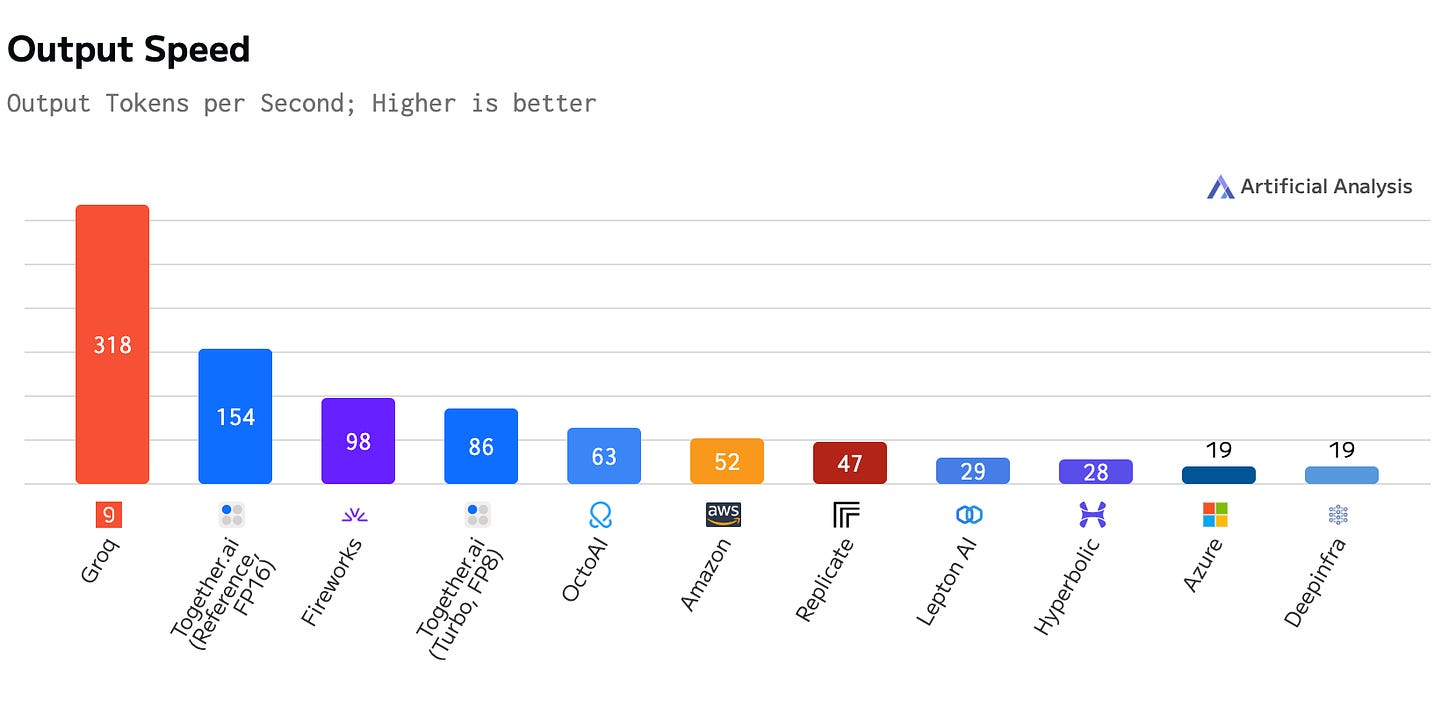

Suppose a PC user wants to invoke an intelligent world model locally. In that case, running locally on Intel’s NPU or GPU generally won't be faster than a cloud AI accelerator, even without the networking round-trip latency to the cloud.

The user must trade off intelligence to recapture speed, using quantization and smaller models to even fit on a PC.

Here’s an example of Llama 70B with 4-bit quantization running on the iGPU of an Intel Core Ultra 5 (Meteor Lake). It achieves 1.4 tokens per second. Sure, the example could probably be optimized to squeeze out a few more tokens, but the point stands when compared to cloud APIs running Llama 70B with 16 and 8-bit quantization.

Cheaper

Will it be cheaper to run inference locally?

It’s reasonable to envision a future where large companies like Microsoft push as much generative AI inference to the edge as possible. Might as well let the user pay for the electricity and compute.

But that’s not a 2024 scenario. I have yet to encounter any examples of software pricing that nudges users towards offloading inference from the cloud to their PC. Maybe it's because companies are still in the "we need to increase the utilization of all those GPUs we bought" phase. 😅

Also, the definition of cheaper is fuzzy. Microsoft has already spent the CapEx on those huge GPU clusters, so they’re really only saving OpEx when pushing inference to the customer.

Ironically, Microsoft's challenges in scaling its AI infrastructure—namely, the shortage of AI accelerators and data center capacity—could be the catalyst for moving AI inference to the edge. Instead of Microsoft’s CFO driving a cost-optimization shift, the technical constraints they face in meeting consumer demand may force it upon them.

Evidence of Intel’s Mistakes

Unfortunately, Intel was banking on AI PC to increase CPU revenue in 2024-2026.

Intel erroneously anticipated that 2024 would usher in AI PC upgrades, boosting sales volume. It also assumed consumers would be willing to pay a premium for these AI-enhanced devices, driving up average selling prices. This belief in increased ASPs and volumes would, therefore, drive higher client CPU revenue.

Let’s check the public record for evidence.

Q2 2023 Earnings Call

Intel’s Q2 2023 earnings call in late July 2003 was the first earnings call during which Pat discussed the AI PC. He briefly mentioned the AI PC and introduced the Centrino analogy.

When asked to explain during the Q&A, Pat gave a much longer answer in which he argued that local inference is absolutely necessary and advocated for an increased PC refresh rate and expanding TAM.

Pat Gelsinger: Our client business exceeded expectations and gained share yet again in Q2 as the group executed well, seeing a modest recovery in the consumer and education segments, as well as strength in premium segments where we have leadership performance. We have worked closely with our customers to manage client CPU inventory down to healthy levels. As we continue to execute against our strategic initiatives, we see a sustained recovery in the second half of the year as inventory has normalized. Importantly, we see the AI PC as a critical inflection point for the PC market over the coming years that will rival the importance of Centrino and Wi-Fi in the early 2000s.

And here’s the follow-up question from Ben Reitzes.

Ben Reitzes -- Melius Research -- Analyst

Yeah. Thanks a lot. I appreciate the question. Pat, you caught my attention with your comment about PCs next year or with AI having a Centrino moment.

Do you mind just talking about that? And what -- when Centrino took place, it was very clear we unplugged from the wires and investors really grasped that. What is the aha moment with AI that's going to accelerate the client business and benefit Intel?

Such a great question. Why AI PC, and why Intel?

Pat Gelsinger -- Chief Executive Officer

Yes. And I think the real question is what applications are going to become AI-enabled? And today, you're starting to see that people are going to the cloud and goofing around with ChatGPT, writing a research paper and that's like super cool, right? And kids are, of course, simplifying their homework assignments that way. But you're not going to do that for every client becoming AI-enabled. It must be done on the client for that to occur, right? You can't go to the cloud.You can't round trip to the cloud. All of the new effects, real-time language translation in your Zoom calls, real-time transcription, automation, inferencing, relevance portraying, generated content and gaming environments, real-time creator environments being done through Adobe and others that are doing those as part of the client, new productivity tools being able to do local legal brief generations on clients, one after the other, right, across every aspect of consumer, of developer and enterprise efficiency use cases. We see that there's going to be a raft of AI enablement and those will be client-centered. Those will also be at the edge.

You can't round trip to the cloud. You don't have the latency, the bandwidth or the cost structure to round trip, let's say, inferencing in a local convenience store to the cloud. It will all happen at the edge and at the client. So with that in mind, we do see this idea of bringing AI directly into the client immediately, right, which we're bringing to the market in the second half of the year, is the first major client product that includes native AI capabilities, the neural engine that we've talked about.

Pat says there will be a lot of AI use cases for PCs, and they can’t be enabled by cloud inference. It’s not true, but carry-on. Why Intel?

And this will be a volume delivery that we will have. And we expect that Intel, as the volume leader for the client footprint, is the one that's going to truly democratize AI at the client and at the edge.

Intel believes it will win AI PCs because they have the largest PC market share. OK. There is no differentiation, but if the TAM expansion happens, it’s reasonable to assume that Intel will capture its fair share.

And we do believe that this will become a driver of the TAM because people will say, "Oh, I want those new use cases. They make me more efficient and more capable, just like Centrino made me more efficient because I didn't have to plug into the wire, right? Now, I don't have to go to the cloud to get these use cases.

What? PC-based LLM inference will make users more efficient and capable?

I'm going to have them locally on my PC in real time and cost effective.

Pat makes the case for an optimized future where inference runs locally to overcome costs and networking latency. But we’re far from that future.

Let’s talk cost optimization.

As we saw with SaaS and cloud computing, inference adoption will outrun cost optimization. Companies will first try to find product-market fit with inference-enabled features, and only after these features take off will companies be incentivized to optimize cost, which might include pushing inference to the edge.

But again, edge inference will come with the trade-off of a less intelligent model. For the foreseeable future, users and companies will prefer the added network latency of cloud inference over the constraints and efforts of developing a small model at the edge.

So Pat already started on the wrong foot with this AI PC mirage—Centrino is not the correct analogy, and the edge-optimized future is not imminent.

We see this as a true AI PC moment that begins with Meteor Lake in the fall of this year.

For the record, Pat says the AI PC moment began in the fall of 2023.

Q3 2023 Earnings Call

In October 2023 Pat again mentions Centrino. He also describes how Intel has primed the channel ecosystem.

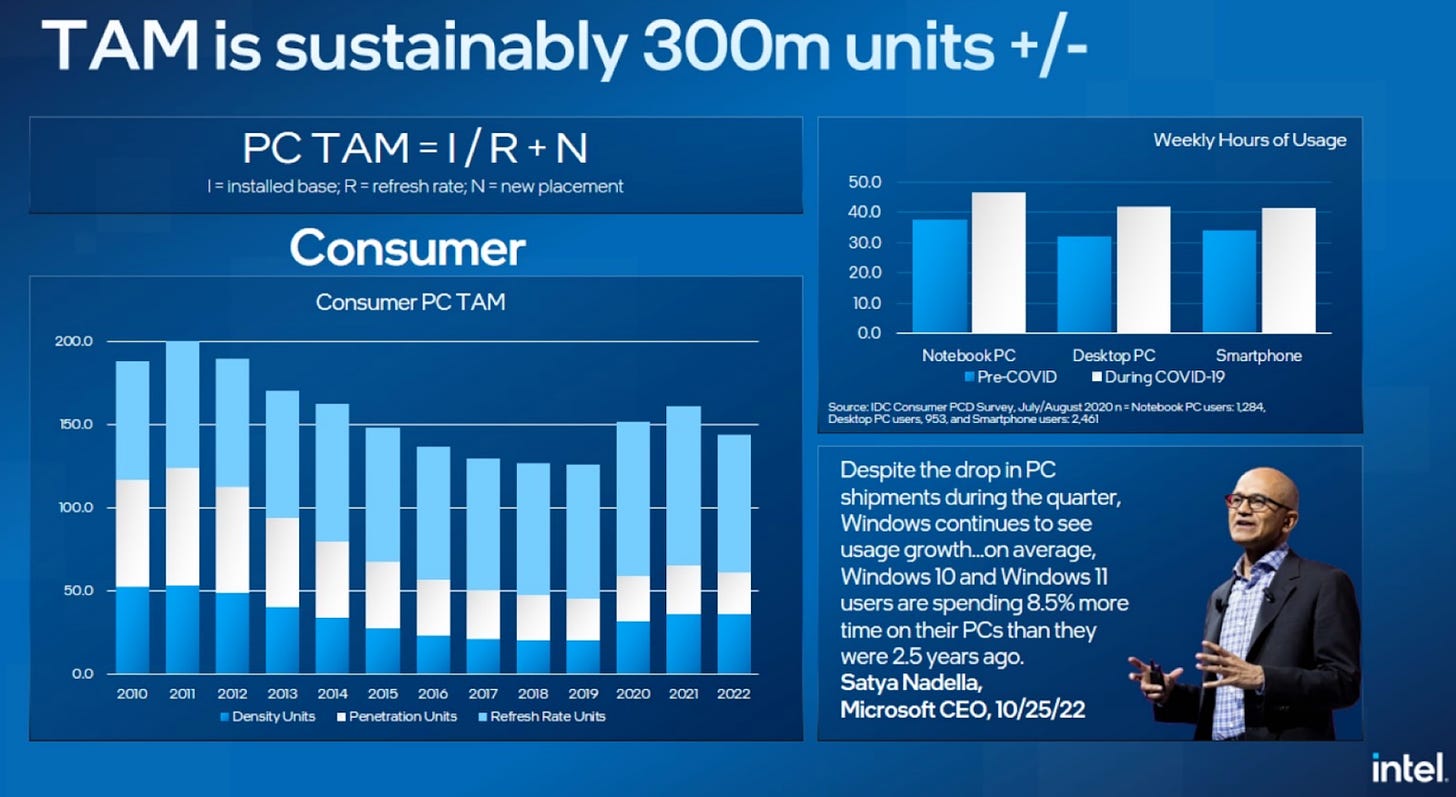

Pat G: We expect full-year 2023 PC consumption to be in line with our Q1 expectations of approximately 270 million units. In the near term, we expect Windows 10 end-of-service to be a tailwind, and we remain positive on the long-term outlook for PC TAM returning to plus or minus 300 million units. Intel continues to be a pioneer in the industry as we ushered in the era of the AI PC In Q3 when we released the Intel Core Ultra processor, codenamed Meteor Lake…

The arrival of the AI PC represents an inflection point in the PC industry not seen since we first introduced Centrino in 2003. Centrino was so successful because of our time-to-market advantage, our embrace of an open ecosystem, strong OEM partnerships, our performance silicon, and our developer scale. Not only are these same advantages in place today, they are even stronger as we enter the age of the AI PC.

We are catalyzing this moment with our AI PC acceleration program with over 100 ISVs already participating, providing access to Intel's deep bench of engineering talent for targeted software optimization, core development tools, and go-to-market opportunities.

Intel convinced their ISVs to participate. This is very important—maybe these software vendors will create meaningful experiences enabled by local AI. Small ISVs and indie developers might actually push forward on-device inference to avoid the cost of inference APIs.

We are encouraged and motivated by our partners and competitors who see the tremendous growth potential of the PC market.

Intel says its channel partners and even competitors believe AI PC TAM expansion.

But what’s the one thing that’s missing from Pat’s earnings conversations? CUSTOMERS.

Ben Reitzes, the analyst with the “explain the Centrino analogy” question, gently forces Pat to talk about customer demand

Ben Reitzes -- Melius Research -- Analyst

Yeah, thanks, John. I'm going to switch gears to PCs. Your comments there, you've talked about inventories normalizing. I just wanted to hear a little bit more detail on your confidence in that, you know, are you -- do you have confidence that the inventory builds are matching demand and how do you see that playing out next year? You know, it sounds like you think the TAM will grow back to 300M. So, just wanted some comments on that. Thanks.

Ben, if you’re reading this — thanks for politely asking if customers actually want these AI PCs.

Pat Gelsinger -- Chief Executive Officer

Yeah, you know, and three quarters in a row we've seen the client business being very healthy. Now, for us, you know our customers inventory levels are, you know, very healthy in that regard. So, we see our sell-in being matched by a sell-out for those customers. As we said, the 270 million consumption TAM is something we indicated earlier in the year.

And now we're seeing that play out exactly and here we are three weeks into the quarter and I'd say it's looking really good. So, our views and forecasts of our client business is very healthy for the fourth quarter.

Pat seems to confuse correlation with causation: sell-through matching forecasts doesn’t imply customers want AI PCs.

And we do think this idea of ushering in the PC generation will be a multi-year cycle, but we do think this will bring excitement into the category even starting with the Meteor Lake or the Core Ultra launch in Q4.

If it ever takes off, a multi-year adoption curve is reasonable. But starting in Q4? I thought it started already…

Then Pat threw in some tailwinds for good measure.

We also have some other incremental tailwinds with the Copilot launch from Microsoft coming up this quarter. We also have the Windows 10 end-of-service support coming up. Those are just incremental tailwinds that just build us more confidence in it.

Copilot is absolutely Intel's best chance to drive local inference use cases. Windows has much broader adoption than any specific app that offloads to the NPU, so improving a core Windows experience could drive much faster growth than a feature in any given app like Adobe Photoshop.

Windows 10 EOS will drive a refresh, but not necessarily an AI PC refresh. Again, the act of buying a new laptop isn’t a sign that the customer is excited about the value of NPU and GPU-enabled generative AI.

Finally, we see Pat’s inner engineer come out when he answers the question, “Do customers want this?” with “Yes, our future chips will be awesome! Customers will want it!” If you build it, they will come!

And finally, our roadmap is great and you know Meteor Lake looking good rapidly ramping what we have coming with Arrow Lake and Lunar Lake, and then Panther Lake in the future. So, you know, every aspect of this business is demonstrated health maturity momentum and great opportunity for tomorrow.

I’ve made the same mistake and learned the hard way: building it doesn’t mean customers will come.

Q4 2023 Earnings Call

It’s January 2024. It’s been six months since the first mention of AI PCs on earnings calls. Pat gives zero evidence of an AI PC supercycle again. But these things take time, right?

In Q4, we ushered in the age of the AI PC with the launch of Intel Core Ultra, representing our largest architectural shift in decades -- the core Ultra is the most AI-capable and power-efficient client processor with dedicated acceleration capabilities across the CPU, GPU, and Neural Processing Unit or NPU. Ultra is the centerpiece of the AI PC systems that are capable of natively running popular 10 billion parameter models and drive superior performance on key AI-enhanced applications like Zoom, Adobe, and Microsoft.

We expect to ship approximately 40 million AI PCs in 2024 alone with more than 230 designs from ultrathin PCs to handheld gaming devices to be delivered this year from OEM partners, Acer, Asus, Dell, HP, Lenovo, LG, MSI, Samsung Electronics, and others.

Selling 40 million PCs with NPUs doesn’t imply 40 million customers wanted local generative AI.

The Core Ultra platform delivers leadership AI performance today. With our next-generation platforms launching later this year, Lunar Lake and Arrow Lake tripling our AI performance. In 2025 with Panther Lake, we will grow AI performance up to an additional 2x. NEX is well-positioned to benefit from the proliferation of AI workloads on the edge where our market-leading hardware and software assets provides improved latency, reliability, and cost.

Ben Reitzes returned with another “Are you sure customers want this?” question.

Ben Reitzes -- Melius Research -- Analyst

Yeah. Thanks, John. Could you talk about a bit more about the client market? There was -- you mentioned that corporate, you said some strength. And Dell had said there was some weakness. And heading into the first quarter, I was -- can you talk about the revenues on client and what makes you so confident that it's really going to pick up? Thanks.

Can you guess Pat’s reply?

Pat Gelsinger -- Chief Executive Officer

Yeah. So, you know, as we look at the market year on year, we expect it to be a few points bigger than it was last year. So, last year, it was 270. This year, a couple of points higher than that.I think that's consistent with the various market forecasters that we have. Our market share position is very stable. We had good execution on market share through last year, and the product line is better this year with a number of tailwinds like we said. So, overall, we think it's going to be a very solid year for us in our client business.

Obviously, as we start the year, everybody is -- I'll say, managing through what their Q1 outlook looks like even as they expect to see stronger business as we go through the year. I'd also comment, Ben, that some of these tailwinds really only start to materialize as you go into second quarter and second half. AI PC is just ramping right now. The Windows 10 EOS goes into effect and customers are starting to look at their post-COVID refreshes.

So, a lot of those benefits materialize as you go through the year, but our position in gaming, commercial, very strong for us overall. And I'll tell you, we're just seeing a lot of excitement for the AI PC. I described this the Centrino moment, the most exciting category-defining moment since WiFi was introduced two-plus decades ago. So, we do think that is going to bring a multiyear cycle of growth.

Great ISVs, great new use cases, and a product line that is clearly leading the industry establishing this category.

Uh-oh. Intel’s channel partners like Dell signal market weakness, but Pat’s answer is still, “The forecasts are good and our products are good, so the AI PC is going to happen!”

And then the Centrino Kool-Aid again.

Maaaaybe this AI PC thing is a mirage?

Q1 2024 Earnings Call

It’s now April 2024. The AI refresh cycle must finally be ramping like Intel predicted, right?

Pat Gelsinger -- Chief Executive Officer

Thanks, John, and welcome, everyone. We've reported solid Q1 results, delivering revenue in line and EPS above our guidance as we continue to focus on operating leverage and expense management. Our results reflect our disciplined approach on reducing costs as well as the steady progress we are making against our long-term priorities. While first-half trends are modestly weaker than we originally anticipated, they are consistent with what others have said and also reflect some of our own near-term supply constraints.

Nope. Q1 is weaker than forecasted. No AI PC boom. Pat says it’s just supply constraints, not a demand problem.

We continue to see Q1 as the bottom, and we expect sequential revenue growth to strengthen throughout the year and into 2025, underpinned by: one, the beginnings of an enterprise refresh cycle and growing momentum for AI PCs; two, a data center recovery with a return to more normal CPU buying patterns and ramping of our accelerator offerings; and three, cyclical recoveries in NEX, Mobileye, and Altera.

Upward and Onward! The AI PC era is definitely happening, and it's starting now!

Within Client, we are defining and leading the AI PC category. IDC indicates the overall PC market is now expanding. And as stated earlier, as standards emerge and applications begin to take advantage of new AI-embedded capabilities we see demand signals improving, especially in second half of the year, helped by a likely corporate refresh.

We aren’t making this up—IDC says the expansion is happening! We foresee apps driving demand soon. If you build it, they will come! Corporate refresh incoming!

Our Core Ultra ramp, led by Meteor Lake, continues to accelerate beyond our original expectation with units expected to double sequentially in Q2, limited only by our supply of wafer-level assembly, improving second half Meteor Lake supply and the addition of Lunar Lake and Arrow Lake later this year will allow us to ship in excess of our original $40 million AI PC CPU target in 2024.

Client revenue weakness is on us. We’re supply-constrained, but we’re going to fix that. Tally ho!

By the way, why all this hype about AI PCs if they’re only forecasting a $40M target in 2024? Intel must be predicting a massive increase in adoption in 2025 to achieve the billions of incremental revenue it needs.

Q2 client revenue is constrained by wafer-level assembly supply, which is impacting our ability to meet demand for our Core Ultra-based AI PCs. We do expect sequential growth from Mobileye, NEX, and foundry services. As we look beyond Q2 guidance, we expect growth across all segments in the second half of the year, led by improved demand for general-purpose servers from both cloud and enterprise customers and increased Core Ultra assembly capacity to support a growing PC TAM driven by enterprise refresh and the AI PC.

Finally, let us remind you of our self-inflicted supply constraint. Don’t forget: The AI PC era is coming, and TAM expansion will follow!

While our boy Ben used his questions to probe Xeon’s continued decline and the Gaudi mirage, Ross Seymore carried the customer demand torch.

Ross Seymore -- Deutsche Bank -- Analyst

… I guess for my first question, I wanted to dive into the demand side of the equation. What was weaker in the near term than you had expected? …

Pat Gelsinger -- Chief Executive Officer

I'll just say the market was weaker. You've seen that in a number of others that have commented as well. So, I'll say somewhat across the board a bit. We've seen that cloud customers, enterprise across geos. So, I'll just say a bit weaker demand, right, we'll just say at the low end of seasonality Q1 to Q2 that we saw. And as we go into the second half of the year, we're engaging deeply with our customers today, our OEM partners, and we just see strength across the board, right? Part of that is driven by our unique product position, some of it driven by the market characteristics and client, AI PC, and a second half Windows upgrade cycle, we believe, underway and Core Ultra is hot.

Pat just shrugs and says “meh, the whole market was weak, but the AI PC is still coming!”

I’m starting to wonder if Intel talks to end consumers. There’s talk of customers, which might mean OEMs and enterprises. But does Pat stop into the laptops section of Best Buy and Costco in non-coastal states like Indiana and Missouri to chat with customers about their purchasing decisions? I’m guessing not.

At this point in the AI PC journey, I’ve personally transitioned from “Good on Pat for trying to create a market” to “Dang. How long will he be distracted by this AI PC mirage?”

I get that Intel needs revenue to fund 5N4Y and they might be getting desperate.

Yet no matter how much time passes, the AI PC relief never materializes. This mirage is stuck on the horizon.

Srini Pajjuri asked a good question during the Q&A: why does AI PC matter to Intel?

Srini Pajjuri -- Raymond James -- Analyst

Thank you. My question is on the client side. I think, Pat, you mentioned something about supply constraints impacting your 2Q outlook. If you could provide some color as to what's causing those supply constraints? And when do you expect those to ease as we, I guess, go into the second half?

And then in terms of your AI PCs, I think you've been talking about $40 million or so potentially shipping this year. Could you maybe put that into some context as to how it actually helps Intel? Is it just higher ASPs? Is it higher margin? I would think that these products also come with higher costs. I just want to understand how we should think about the benefit to Intel as these AI PCs ramp.

Let’s go Srini!

Pat Gelsinger -- Chief Executive Officer

Yeah. Thank you. And overall, as we've seen, this is a hot product. The AI PC category, and we declared this as we finished last year, and we've just been incrementing up our AI PC or the Core Ultra product volumes throughout.We're meeting our customer commitments that we've had, but they've come back and asked for upside on multiple occasions across different markets. And we are racing to catch up to those upside request, and the constraint has been on the back end. Wafer-level assembly, one of the new capabilities that are part of Meteor Lake and our subsequent client products. So, with that, we're working to catch up and build more wafer-level assembly capacity to meet those.

How does it help us? Hey, it's a new category. And that new category of products will generally be at higher ASPs as your question suggests. But we also think it's new use cases and new use cases over time, create a larger TAM that creates an upgrade cycle that we're seeing. It creates new applications, and we're seeing essentially every ISV -- AI behind their app, whether it's the communications, capabilities of Zoom and team for translation and contextualization whether it's new security capabilities with CrowdStrike and others finding new ways to do security on the client or whether it's creators and gamers taking advantage of this.

So, we see that every PC is going to become an AI PC over time. And when you have that kind of cycle underway, Srini, everybody starts to say, "Oh, how do I upgrade my platform?" And we even demonstrated how we're using AI PC in the Intel factories now to improve yields and performance inside of our own factories. And as I've described it, it's like a Centrino moment, right where Centrino ushered in WiFi at scale. We see the AI PC ushering in these new use cases at scale, and that's going to be great for the industry.

But as the unquestioned market leader -- the leader in the category creation, we think we're going to differentially benefit from the emergence of the AI PC.

Well there ya go, Pat laid his cards on the table.

There will definitely be an upgrade cycle with higher ASPs and a larger TAM. Since Revenue = Volume * ASP, the AI PC expansion will cause revenue to explode. Better still, every PC ultimately becomes an AI PC. And Intel will be there to “differentially benefit” from this fount of gushing revenue.

If it materializes.

Surely, someone inside Intel saw through this folly and escalated their concerns up the ladder, right? After a year of AI PC hype but no proof, someone in Intel’s 100,000+ person organization raised their hand and asked some hard questions?

Q2 2024 Earnings Call

August’s Q2 2024 earnings call. It’s been a year since the mention of AI PC. Has Intel come to its senses?

Nope. Oh, and Intel’s wheels fell off.

Pat Gelsinger: Good afternoon, everyone. Q2 profitability was disappointing despite continued progress on product and process roadmaps…

Q2 profitability was below our expectations, due in part by our decision to more quickly ramp Core Ultra AI CPUs, as well as other selective actions we took to better position ourselves for future quarters, which Dave will address fully in his comments. We previously signaled that our investments will define and drive the AI PC category would pressure margins in the near term. We believe the trade-offs are worth it. The AI PC will grow from less than 10% of the market today to greater than 50% in 2026.

Wow. Margins are being destroyed to quickly ramp AI PCs that customers aren’t asking for, on the misguided belief that volume and ASPs will increase?

And what “trade-offs” are worth it?

We know today's investments will accelerate and extend our leadership and drive significant benefits in the years to come. Our efforts will culminate with the introduction of Panther Lake in second half '25. Panther Lake is our first client CPU on Intel 18A, a much more performant and cost-competitive process, which will additionally allow us to bring more of our tiles in-house, meaningfully improving our overall profitability.

Oh, those AI PCs are really expensive because they’re built using TSMC. This must be why Srini asked about AI PC costs on the last call? Wait a minute, Pat never addressed that part of the question…

Anyway, Intel really wants the AI PC wave to happen to drive revenues to the moon and help fund 5N4Y, but margins would be pretty terrible until they reach 18A anyway. I guess Pat wasn’t kidding when he said he bet the company on 18A. Seems that both Foundry AND Product hang in the 18A balance.

Another important driver of improved financial performance is the cost reduction plan we announced today. This plan represents structural improvements enabled by our new operating model, which we are pulling forward to adjust to current business trends. Having separate financial reporting for Intel products and Intel Foundry clarifies and focuses roles and responsibilities across the company. It also enables us to eliminate complexity and maximize the impact of our resources. Taking a clean sheet view of the business is allowing us to take swift and broad-based actions beginning this quarter.

> As a result, we expect to drive a meaningful reduction in our spending and headcount beginning in the second half of this year. We are targeting a headcount reduction of greater than 15% by the end of 2025, with the majority of this action completed by the end of this year. We do not take this lightly, and we have carefully considered the impact this will have on the Intel family. These are hard but necessary decisions.

This is tough news, and I feel for all those who are and will be impacted.

But man, is this the trade-off they were talking about?

The operational and capital improvements we are driving will be especially important as we manage the business through the near term. While we expect to deliver sequential revenue growth through the rest of the year, the pace of the recovery will be slower than expected, which is reflected in our Q3 outlook. Specifically, Q3 will be impacted by a modest inventory digestion in CCG with DCAI, and our more cyclical businesses of NEX, Altera, and Mobileye, trending below our original forecast. Our outlook reflects industrywide conditions without any meaningful change in our market share expectations.

Inventory digestion in client 😬 Intel overhyped AI PCs to their OEMs, but customers weren’t interested in AI PCs.

More concerning is that AMD’s client segment was up, and they think the back half of the year will continue to be positive. AMD mentioned no industrywide market softness, nor did they overhype AI PC. Instead, AMD’s client playbook seems to be the good ol’ focus on performance efficiency and TCO.

Back to Pat,

Let's now turn to Intel products. In our largest and most profitable business, CCG, we continue to strengthen our position and execute well against our roadmap. The AI PC category is transforming every aspect of the compute experience, and Intel is at the forefront of this category-creating moment.

The AI PC category is transforming every aspect of the compute experience?

Intel Core Ultra volume more than doubled sequentially in Q2 and is already powering AI capabilities across more than 300 applications and 500 AI models. This is an ongoing testament to the strong ecosystem we have nurtured through 40 years of consistent investments. We have now shipped more than 15 million Windows AI PCs since our December launch, multiples more than all of our competitors combined.

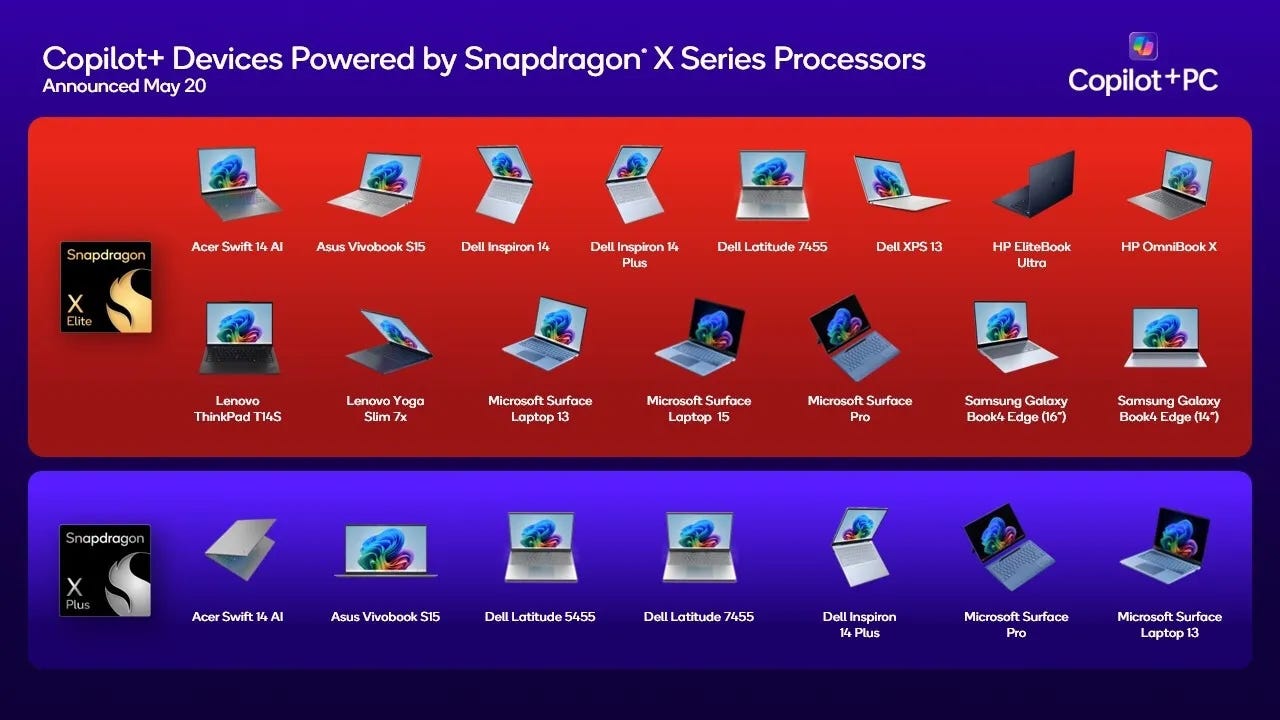

This last sentence is probably in response to Qualcomm beating Intel to the Copilot+ launch back in May. Sure, Intel shipped more Windows PCs with NPUs in it than the competition — as expected.

AMD has an NPU and therefore “AI PC” too, but they are downplaying the AI PC’s importance in their recent client success.

And we remain on track to ship more than 40 million AI PCs by year-end and over 100 million accumulative by the end of 2025.

Intel is undoubtedly shipping PCs with NPUs, but that doesn’t mean customers are running out to buy them for that purpose. (I know, I sound like a broken record.)

Back in January 2023 Intel showed us that annual refresh volume is something like 50 to 70 million units annually. Much respect there, that’s a lot of annual sales!

At the same time, if Intel sells 40 million AI PCs in 2024 and 60 million in 2025, is that any different than what they expected from the normal refresh rate? I think not.

Lunar Lake, our next-generation AI PC, which achieved production release ahead of schedule in July, will be the next industry wide catalyst for device refresh. Lunar Lake delivers superior performance at half the power with 50% better graphics performance and 40% more power efficiency versus the prior generation. Lunar Lake delivers 3x more TOPS gen-on-gen with our enhanced NPU that will be the ultimate AI CPU on the shelf for the holiday cycle. Microsoft has qualified Lunar Lake to power more than 80 new Copilot+ PCs across more than 20 OEMs, which will begin to ship this quarter.

“Lunar Lake… will be the next catalyst for device refresh…” Pat keeps moving the goalposts. AI PC ramp isn’t quite here, but as soon as Lunar Lake launches…

Is the lack of AI PC adoption really because applications just needed 3x more TOPS?

CFO Dave Zisner continued with the concerning remarks.

Dave Zisner: Profitability was significantly more challenged versus our previous expectations with Q2 gross margin of 38.7% and EPS of $0.02 cents. Weaker-than-expected gross margin was due to three main drivers. The largest impact was caused by an accelerated ramp of our AI PC product. In addition to exceeding expectations on Q2 Core Ultra shipments, we made the decision to accelerate transition of Intel 4 and 3 wafers from our development fab in Oregon to our high volume facility in Ireland, where wafer costs are higher in the near term.

I hope the accelerated Intel 4 and 3 ramp was for Xeon competitiveness or something and not simply to quickly ramp up AI PCs…

… The client business grew 9% year over year as the AI PC ramp contributed to higher volume and ASPs, partially offset by export license restrictions communicated during the quarter.

Correlation is not causation. I would love proof that AI PCs caused higher volume and ASPs.

Could it be that Intel could charge higher ASPs due to client brand strength in the premium market? Maybe OEMs were willing to pay a premium for AI PCs and subsequently passed that markup to the customer, but customers just accepted it regardless of any AI features.

… We expect gross margins to be moderately weaker sequentially with modest revenue growth and efficiencies offset by a continued ramp of new manufacturing nodes. While we will continue our work to improve near-term profitability, a heavier dependence on external wafers as we ramp AI PC products over the next several quarters will pressure gross margins. As a result of these factors, we expect revenue of $12.5 billion to $13.5 billion in the third quarter. At the midpoint of $13 billion, we expect gross margin of approximately 38% with a tax rate of 13% and EPS of negative $0.03, all on a non-GAAP basis.

First principles suggests nothing but pain and suffering for the next several quarters: Foundry is still lagging, Intel continues to pay extra for TSMC fabrication, and the AI PC mirage won’t materialize.

As Pat discussed earlier, lower-than-anticipated revenue in the back half of the year is putting pressure on gross margins and earnings. We are taking aggressive actions to significantly reduce spending in response. These actions, while difficult, will help streamline the organization to improve productivity and make better decisions more quickly. Please note that we are likely to have charges associated with these actions, some of which may be included in our non-GAAP results.

The layoffs should have come way sooner. Intel is an IDM that needs to regain manufacturing leadership and maintain x86 share, and they should trim everything non-essential to that mission.

Dave continues,

The AI PC is a big winner for the company, and the early signals on the performance of Lunar Lake are very positive. We therefore intend to ramp that product significantly next year to meet market demand.

Hearing the CFO repeat the unfounded AI PC claims is a bit shocking.

For the umpteenth time, the AI PC is not a big winner for Intel.

The AI PC channel was willing to overpay and overorder next-gen CPUs, and now inventory digestion is occurring. There is no customer-driven AI PC demand.

While the part is great, it was originally a narrowly targeted product using largely external wafers and not optimized for cost. As a result, our gross margins will likely be up only modestly next year. The good news is the follow-on product, Panther Lake, is internally sourced on 18A and has a much improved cost structure.

Yet Intel is going to spend through the nose to ramp a product that customers aren’t demanding?!

They are giving up future margins by relying on TSMC to overbuild an AI PC future that has not and will not materialize soon?

And they’re doing that at the exact same time Intel Foundry is burning through capital to speedrun 5N4Y?

Look. Intel will sell CPUs for one reason: client brand strength. Not their NPUs.

Fortunately, Arrow Lake and Lunar Lake should benefit from TSMC’s process technology. Between brand strength and good performance, sales should be OK.

But let’s not confuse premium client sales with proof of AI PC demand.

Fortunately, on this call analysts continued to poke holes in the AI PC mirage. Matt Ramsay asked why client would be flat to down.

Matt Ramsay -- Analyst

I guess my first question is on the client space. I think, Dave, you might have mentioned client flat to down in September.

I think your primary x86 competitor is going to be up double digits, or I think they mentioned above seasonal, however you quantify seasonal now. Maybe you could give us a little color there. There's lots of maybe noise in the system about ARM coming into the client market. I think that impact would be more modest relative to what you described.

But if you could kind of give us puts and takes there and how the inventory with OEMs might be affecting what you're guiding for us this September. Thanks.

Patrick P. Gelsinger -- Chief Executive Officer

We feel very good with our client position, the momentum we have in AI PC. Here we have a very healthy ecosystem as well. And I'll say as the large market share position that we have, we're very focused on selling and sell-through in the channel.

So, I believe our overall view of inventory levels, you know, where our market share is, we're actually quite comfortable in the indications that we've given. You know, some inventory sell through in the third quarter above seasonal in Q4. Overall, the TAM expansion, you know, is low single digit, even though we're seeing a lot of enthusiasm around the AI PC and further TAM expansion as we go into '25 as expected now broadly…..

So, you know, a lot of reinforcement of the ecosystem, the leadership that we have on AI PC. And as Dave said, you know, Lunar Lake and Panther Lake only make our market position stronger. So, I think we're very comfortable, and every indication so far this quarter is very solid for those outcomes.

Remember how 2024 was the year of the AI PC? Pat admitted that TAM expansion has only been low single digits and pushed back the AI PC rocketship launch to 2025.

Intel shouldn’t be comfortable. They’re chasing a mirage pinned to the horizon, and they’re never going to reach it.

What Should Intel Do?

Intel mistakenly drank its own Kool-Aid. It probably doesn’t have the culture needed for someone to point out these mistakes to leadership.

I get how it would be easy to believe in this mirage, and it would be tough to about-face and admit it won’t happen anytime soon. Still, Intel's early AI PC investment wasn't in vain. It is well-positioned with a capable NPU. Of course, the competition has NPUs, too, and consumers will probably associate Gen AI features with Microsoft or app developers, not Intel.

Going forward, the most important thing Intel Product can do is create enough financial runway to see 18A and 14A to production by:

Ensuring CPUs ship on time and have competitive performance and efficiency

Leveraging client brand strength to maintain and grow ASPs

Carefully reinvesting in marketing and advertising to maintain client brand strength

It’s back to the basics.

Moreover, between now and 14A, Intel cannot bank on significant incremental revenue from

AI PC TAM expansion

AI PC ASP growth

AI head node CPU growth

Gaudi

NEX (Network and Edge)

Foundry

Foundry is the best long term play here, but it’s not going to bridge the gap between now and 2026/2027.

In the near term, there's only one guaranteed way to extend Intel's runway, and it's a painful one: further workforce reductions.

Sadly, I suspect the allure of the AI PC mirage delayed this necessary action.

Cuts are inevitable now as revenue will continue to dwindle in the coming quarters as the mirage stays pinned on the horizon.

Get Creative

If Intel wants to throw a Hail Mary that might generate client revenue ASAP, it has an ace up its sleeve: Intel is the only American company with a leading-edge USA-based foundry.

I’m not talking about asking for more money from the US government, which is slow and complicated.

Nor am I suggesting Intel can quickly create organic revenue through Foundry.

Instead, what if Intel creates an America-centric Foundry brand that appeals directly to end consumers?

Intel Made, Inside America.

Throw it in a Superbowl ad and encourage Americans to go buy a new laptop and support America’s Intel. Play it again during March Madness. And again during the Masters.

Look, I’m clearly not a graphic designer or brand expert. But desperate times call for desperate measures, and maybe launching a Foundry brand is just what the doctor ordered. Nothing is holding Intel back from creating a Foundry brand right now.

A Foundry brand will eventually come in handy anyway, and it can ride the coattails of Intel’s existing “Intel Inside” brand strength.

Sure, the odds of this catalyzing a material PC refresh might be slim, but is that any different than the AI PC mirage?

At least Intel has full control over this one.