Nvidia and Microsoft - The Impact of LLM-Powered Copilots on Microsoft's Hardware Strategy

Running Microsoft 365 Copilot and GitHub Copilot on Nvidia GPUs ain't cheap. CapEx and OpEx will continue to rise, compelling Microsoft to explore a switch from GPUs to AI ASICs.

We’re in a mini-series examining which of Nvidia's biggest customers are most likely to migrate to competitive GPUs or AI ASICs. The first article explored Amazon’s dependence on Nvidia.

We now shift our attention to Microsoft and their significant CapEx and OpEx spend due to LLM inference.

New subscribers can get up to speed with these previous posts: GPUs, ASICs, Nvidia’s Risk, and Amazon + Nvidia.

LLMs Make It Easier To Harness Computing

Computers have long excelled at enhancing human productivity through task automation and complex computation. Yet the rate of productivity increase is hindered by the amount of people who can actually harness these machines to help their daily work.

Said plainly: the majority of people use computers, but few can program them to automate tasks specific to their daily life.

Large Language Models (LLMs) change this. LLMs revolutionize human-computer interaction by giving machines the ability to understand and generate human language, reducing the friction of harnessing computers.

The barrier to entry with LLMs is human language, not computer language.

Microsoft CEO Satya Nadella gave a real example in their recent Q2 FY24 earnings call

Nadella: Chat’s most powerful feature is — you have the most important database in your company, which happens to be the database of your documents and communications, is now queryable by natural language in a powerful way, right? I can go and say, “What are all the things Amy said I should be watching out for next quarter?” and it’ll come out with great detail.

LLMs promise to increase human productivity by making computing accessible, natural, and intuitive.

Appropriately, Microsoft is one the largest buyers of Nvidia AI GPUs, which power modern LLMs:

Reportedly - Nvidia’s biggest H100 customers. Source: Omdia.

Microsoft Wants To Increase Human Productivity

Microsoft aims to amplify human productivity directly by incorporating LLMs into existing consumer products. Microsoft’s tools like Word and Excel are already productivity boosters, and a natural language interface promises to make them even more so. Let’s further look at the benefits of LLMs for these Microsoft products to unpack the impact on Microsoft’s hardware strategy.

GitHub Copilot

GitHub Copilot is Microsoft’s first consumer offering that truly showcases the power of LLMs. Copilot is a code completion tool designed to assist developers by suggesting code, creating code from comments, completing partial snippets, and presenting alternative solutions.

In June 2020, OpenAI released GPT-3, an LLM that sparked intrigue in developer communities and beyond. Over at GitHub, this got the wheels turning for a project our engineers had only talked about before: code generation.

“Every six months or so, someone would ask in our meetings, ‘Should we think about general purpose code generation,’ but the answer was always ‘No, it’s too difficult, the current models just can’t do it,’” says Albert Ziegler, a principal machine learning engineer and member of the GitHub Next research and development team.

But GPT-3 changed all that—suddenly the model was good enough to begin considering how a code generation tool might work.

This code generation idea turned into GitHub Copilot, which quickly gained traction and is still growing. From Microsoft’s recent Q2 FY24 earnings call,

GitHub revenue accelerated to over 40% year-over-year, driven by all-up platform growth and adoption of GitHub Copilot, the world’s most widely deployed AI developer tool.

We now have over 1.3 million paid GitHub Copilot subscribers, up 30% quarter-over-quarter.

And more than 50,000 organizations use GitHub Copilot Business to supercharge the productivity of their developers, from digital natives like Etsy and HelloFresh, to leading enterprises like Autodesk, Dell Technologies, and Goldman Sachs.

Accenture alone will roll out GitHub Copilot to 50,000 of its developers this year.

What's driving this widespread uptake?

Productivity enhancements.

In a controlled experiment with 95 professional developers, GitHub researchers found that those using GitHub Copilot finished a particular task in 1 hour and 11 minutes, compared to 2 hours and 41 minutes for the non-Copilot group.

Furthermore, a global survey of more than 2,000 GitHub Copilot users revealed significant benefits beyond mere coding efficiency: 73% noted an improved ability to stay focused, 87% found repetitive tasks less burdensome, and 60% felt more fulfilled in their job.

Clearly Copilot plays a significant role in boosting both productivity and mental well-being among developers.

Microsoft 365 Copilot

Not long after GitHub Copilot came the announcement of Microsoft 365 Copilot.

Why the same name? It’s admittedly confusing at first until you realize “Copilot” simply means “the ability to understand natural language and help you”.

Or in marketing-speak, “AI Assistant”.

Thus, Microsoft 365 Copilot is an AI assistant that can summarize emails in Outlook, generate content in Word, analyze data in Excel, and create Powerpoint slides. Users direct Copilot with natural language commands, as simple as “This meeting is nearly over and I didn’t pay attention. Did I miss anything important?”

Again, “Copilot” is Microsoft giving Office products the ability to understand and generate natural language to ease the way users engage with these tools.

Can you guess the benefits of Microsoft 365 Copilot?

You’re right — productivity!

70% of Copilot users said they were more productive, and 68% said it improved the quality of their work.

Overall, users were 29% faster in a series of tasks (searching, writing, and summarizing).

Users were able to get caught up on a missed meeting nearly 4x faster.

64% of users said Copilot helps them spend less time processing email.

85% of users said Copilot helps them get to a good first draft faster.

75% of users said Copilot “saves me time by finding whatever I need in my files.”

77% of users said once they used Copilot, they didn’t want to give it up.

Who wouldn’t love to spend less time with email and meetings?

Microsoft’s desire to reduce CapEx and OpEx, and what this means for Nvidia

GitHub Copilot and Microsoft 365 are real examples of consumer products using generative AI to increase global workforce productivity. The scale of this development is significant, as Microsoft is the largest subscription consumer software company in the world with over 400 million paid Office 365 seats. Github and Microsoft 365’s Copilots already account for millions of daily active users, and there’s no sign of slowing down.

What company underpins Copilot?

Nvidia.

Specifically, every interaction with a Microsoft assistant involves LLM inference on Nvidia AI GPUs.

If just 10% of paid Office 365 users (40M) interact with Copilot 25x per day for 20 days, Microsoft must serve 20 billion inferences per month. If it costs $0.005 per inference, that’s $100M in monthly costs or $300M per quarter.

Twenty-five inferences per day might feel high or low depending on the employee; software engineers using GitHub Copilot might trigger inference tens or hundreds of times per hour while coding.

Microsoft is running these massive inference workloads on Nvidia AI GPUs, but as we’ve previously discussed ASICs offer better performance with lower energy use than GPUs. Even if our inference cost guesstimate is high, it still demonstrates that a reduction in inference operating costs per user could seriously add up at Microsoft scale.

Clearly, given Microsoft’s scale and forecasted growth, they have significant incentive to switch from Nvidia GPUs to AI ASICs to reduce upfront capital expenditures (CapEx) and ongoing operational expenses (OpEx).

CapEx and OpEx for Generative AI

We've yet to unpack the terms CapEx and OpEx on Chipstrat, so let’s dig in for a moment to define and illustrate these terms.

📖 CapEx refers to the funds used by a company to acquire, upgrade, and maintain physical assets such as property, industrial buildings, or equipment. This investment is amortized over the equipment's useful life.

When it comes to generative AI workloads using Nvidia AI GPUs, CapEx encompasses the initial costs of acquiring the GPUs and any supporting hardware like server racks and networking equipment. Companies that choose to build or upgrade data centers to accommodate these GPUs would also classify the associated construction or upgrade expenses as CapEx.

CapEx provides an interesting financial maneuver, as a $4 billion AI chip purchase amortized over four years looks like a quarterly $250 million expense over 16 quarters.

📖 OpEx encompasses the ongoing costs for running a business on a day-to-day basis. Unlike CapEx, OpEx expenses are incurred regularly and are deducted in the fiscal period during which they are incurred.

In terms of generative AI workloads, OpEx would include the costs related to powering and cooling the GPUs, software licenses, and maintenance. If the GPUs are rented from a cloud provider instead of owned outright, GPU rental fees are also included in OpEx. Additionally, OpEx covers the expenses associated with the labor needed to manage and operate the AI workloads.

Remember that $300M quarterly inference costs we guesstimated? That’s OpEx, and it hits the books in the quarter it’s incurred. So a $4B AI CapEx expense amortized over 4 years ($250M/quarter) looks the same as the hypothetical inference OpEx costs ($300M/quarter) of Microsoft’s Copilot users. Interesting.

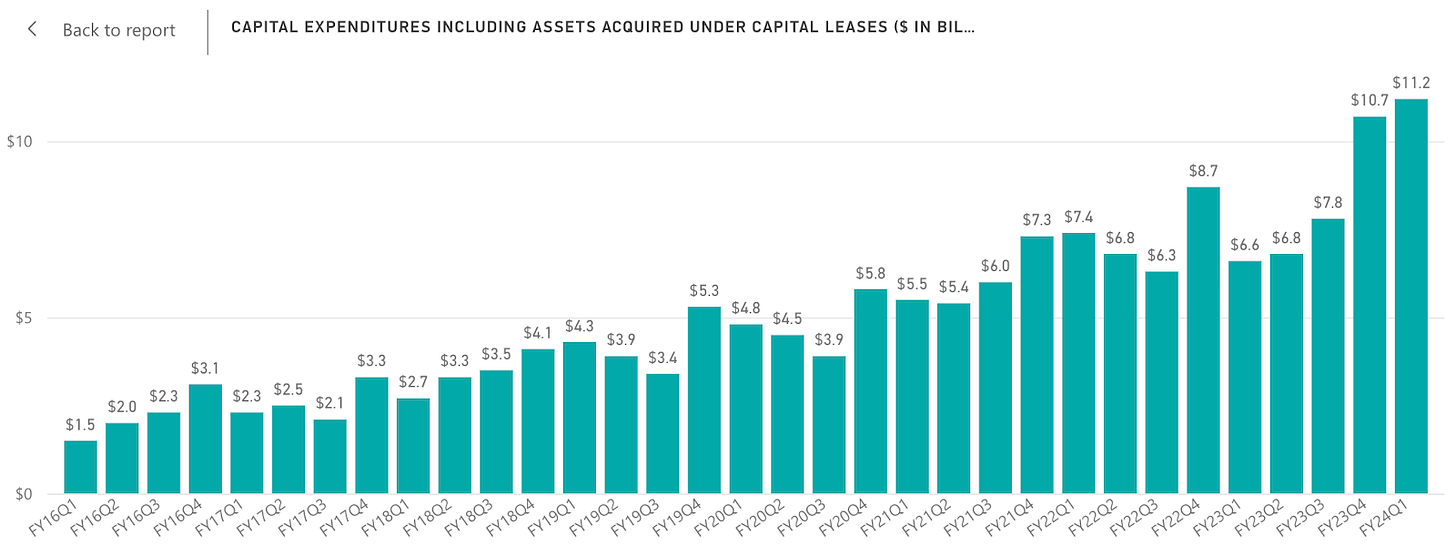

We can see the impact of amortized CapEx spend on Microsoft’s investor relations page, which is growing up-and-to-the-right.

The numbers shown represent the total capital expenditures for the entire company; the increasing expenditure on AI GPUs for Copilot inference workloads constitutes just one component of this overall investment.

What about OpEx? We did some guesstimating earlier, and with some work we could back out OpEx from published financials, but we’ll leave this as an exercise for the reader.

As a related aside, I live near Microsoft’s Iowa data centers that power their LLM inference and they consume a significant and increasing amount of energy.

Clearly, at Microsoft’s scale, infusing products with AI requires significant CapEx and OpEx spend. Given Microsoft's substantial investment of billions of dollars in Nvidia GPUs, it's obvious that the company is motivated to cut down on its Nvidia expenses.

How might Microsoft reduce CapEx? For starters, Nvidia charges a massive premium for their hardware

From ExtremeTech,

it costs Nvidia roughly $3,320 for the chip and the components on the PCB or the bill of materials (BOM) for each unit. It then turns them around and sells to customers for between $25,000 and $30,000, depending on the quantity ordered.

In the near term, it’s possible that Microsoft could reduce their Copilot CapEx costs by switching to a cheaper alternative. AMD GPUs may fit the bill as they are rumored to be lower-priced than Nvidia. Of course AMD must also overcome developer UX challenges to be considered viable.

Switching GPU vendors wouldn’t necessarily decrease operating costs for Microsoft though. For that, Microsoft needs an AI ASIC. These purpose-built chips significantly reduce power consumption by stripping away GPU bloat and would thus reduce Microsoft’s operating costs.

Could AI ASICs be a cheaper CapEx hit than Nvidia GPUs? Yes. ASICs often have a much lower cost than Nvidia’s GPUs, which could put a serious dent in Microsoft’s CapEx spending. From MarketWatch,

But Malik and his team “expect both to coexist,” noting the pluses and minuses of the two. Custom ASICs cost perhaps $5,000, versus $20,000 to $30,000 for GPUs, although GPUs have far more high-bandwidth memory and the ability to be reprogrammed to different workloads.

Or, they could spend the same but get more compute!

Summary (Part 1)

The takeaway so far is that Microsoft’s LLM-powered consumer products are truly productivity enhancing and useful. They have product-market fit and will continue to grow, driving significant inference workloads for Microsoft. This, in turn, contributes to rising capital and operational costs.

The substantial CapEx and OpEx associated with running these AI workloads on Nvidia GPUs presents a strong incentive for Microsoft to switch to AI ASICs for higher throughput and lower power consumption (OpEx) and possibly lower upfront costs (CapEx).

Is Nvidia’s revenue from Microsoft at risk?

Consumer products sprinkled with fairy dust (LLMs) have significant CapEx and OpEx costs, and Microsoft operates at a scale where transitioning from Nvidia to an AI ASIC makes a ton of sense.

Stay tuned for part 2, where we’ll dive into Microsoft’s AI chip named Maia, Microsoft’s Azure platform business and its dependency on Nvidia, and draw further conclusions on Nvidia’s revenue risk.

If you liked this post, please forward it to a friend! Word-of-mouth recommendations are the highest compliment!