CPUs: Hardware, Yet Infinitely Adaptable Through Software

A Beginner's Guide to CPUs and the Top Companies Shaping the Future

This series aims to explain the technical and business fundamentals of semiconductors for a broad audience. My goal is to teach what, why, and how in a way that can be immediately applicable. A successful outcome looks like Chipstrat subscribers who can reason about the impact of semiconductor news on their work or daily life.

Most educators, books, or online sources introduce semiconductors in one of two ways: semiconductor physics or the history of semiconductors. Neither of those sound immediately applicable to me! Rather, the best place to start is with key system building blocks such as CPUs, GPUs, and memory. This level of abstraction is a helpful starting point for understanding the semiconductor business landscape, diving deeper into the technical details, and analyzing current events.

Let’s put the pieces together.

Mental Model

Modern electronic systems like phones and computers have capabilities analogous to people. They can think. They can remember. They can communicate. They can sense the world around them. They regulate the power they needed to function.

We can create a useful mental model by grouping a system’s components accordingly - think, remember, communicate, and so on.

In this series we’ll explain chips that think, including CPUs, GPUs, ASICs, and FPGAs. (Don’t let these acronyms scare you - we will unpack them in a simple manner.)

This mental model is also useful for understanding semiconductor companies — Intel and AMD make logic chips that help your computer or phone think. Samsung and Micron make memory chips that help systems remember. STMicroelectronics makes accelerometers that help phones sense their position in space to orient their screen accordingly.

🤔 A quick aside: in this series I will use terms like semiconductor chip, integrated circuit, chips, and semis interchangeably.

Let’s shine a light on the general purpose thinking powerhouse: the CPU.

Think

The most valuable and differentiating capability of humans is our ability to reason. Likewise, the most valuable integrated circuits in a system are those that help a system think. These chips range from general purpose chips that perform a broad set of tasks to single purpose chips that only execute a certain task.

If you’re wondering why single purpose chips even exist (“why not just use general purpose chips that could do that specific task and many others?”) - you’re asking the right questions! Here’s a hint - there are trade-offs to general-purpose processing.

Keep reading and I’ll explain.

General Purpose Processors

We’ll begin with the workhorse of modern computing - the CPU (Central Processing Unit).

📖 CPU: A semiconductor chip designed to read and follow instructions.

The first insight to understand about CPUs:

💡 CPUs are hardware, yet are endlessly reconfigurable through software

To unpack this, let’s define these terms.

📖 Hardware: circuits that never change

Broadly speaking, semiconductor circuits are fixed. They are unchanging, hence the hard in hardware.

📖 Software: instructions the CPU executes to perform a task

Computer programs or software can be thought of as a list of instructions for a CPU to execute. These programs can easily be changed, hence the soft in software.

Back to our insight -

💡CPUs are hardware, yet are endlessly reconfigurable through software

CPUs are designed for a single job: to run software.

Want to use Microsoft Excel, browse the web, or read this in the Substack app? CPUs are at your service!

This versatility is why computer programming is so fun - if you can dream it, you can describe it in a computer language and the CPU will do it for you!

Software makes CPUs endlessly reconfigurable.

CPUs are a beautiful paradox. They’re designed to do one thing, yet can do everything. They’re fixed hardware, but can be endlessly reconfigured. 🤯

The term of art for this versatility is “general purpose”. On a hardware spectrum ranging from “general purpose” to “single purpose”, CPUs anchor the far end of “general purpose” hardware. This general purpose nature of CPUs is the main reason they are so ubiquitous.

Now let’s get back to our earlier question: if CPUs can do everything, why make single purpose chips that can only do a specific task?

The answer: the general purpose nature of CPUs comes at a cost. There are trade-offs.

CPU trade-offs

📖 Trade-offs: a balancing of factors, all of which are not attainable at the same time. Giving up one thing in return for another. (Merriam-Webster)

Because trade-offs are fundamental to semiconductor engineering and business strategy, let’s run through a quick illustration:

Imagine there are 12 full grocery bags in my car that I need to carry into the house. Do I carry all of them at once in a single trip, or should I carry a few bags at a time over several trips?

This is an exercise in trade-offs. If I want to minimize my time spent on this task, I’ll figure out how to carry all 12 bags at once and accept the increased risk of dropping and damaging the groceries. On the other hand, I can minimize the risk of damage but at the cost of the increased time it takes to make several trips.

CPUs are designed to prioritize versatility above performance and power consumption. Specifically, for most applications, CPUs are slower and less power efficient than a custom hardware solution tailored to that application.

That said, CPUs are generally designed to be as performant and power efficient as possible, especially for a broad variety of common tasks.

Let’s illustrate how CPUs are conceptually more flexible but less performant for a given task:

Imagine you’ve given a list of instructions to a child explaining how to brush their teeth before bed. Every night they pick up the piece of paper, read the instructions, and brush until they’ve cleaned each tooth. One day, you decide to add an important step to the instructions: flossing. (My dentist paid me to say that). Luckily, the child can read the updated instructions and start including flossing in their nightly routine.

Alternatively, imagine you build a robot arm that holds 20 or so mini toothbrushes - one for each tooth. This optimized solution brushes every tooth simultaneously and the entire process takes only 30 seconds. It’s so much faster overall and does a better job on each tooth (no one has the discipline to spend 30 seconds on a tooth, right?) This solution is amazing until the dentist calls; this robot wasn’t built to floss!

As you figured out already, the child represents a CPU and the robot is like a single purpose chip. The child was less performant for the given task, but they had no trouble following updated instructions to add flossing to their routine. Conversely, the robot was designed to take advantage of inherent parallelism in the problem, namely that each tooth could be brushed simultaneously. As a result, the robot was faster than the child and gave each tooth a better cleaning. But the robot couldn’t quickly adapt to the addition of flossing to the routine.

📖 Parallelism: the ability to break down a problem into steps that can be executed simultaneously

The key insight to remember with CPUs:

💡 CPUs are the right choice when a system prioritizes supporting a variety of workloads over power/performance optimization for a particular task

Power - Performance - Area

The next most important insight is that CPUs are governed by a power-performance-area (PPA) trade-off. In short, these attributes are all interdependent; increased performance comes at the cost of increased power and chip area.

The best way to grasp the impact of PPA decisions is to put on our product manager hat and think through the customer impact of these trade-offs.

Imagine we are a CPU product manager and our target customers are professionals with computationally heavy workloads like music or video production. These customers want the highest performance CPU possible to maximize their work productivity. They are willing to pay a premium work from a desktop computer.

Next, we write product requirements that specify how much additional performance we need out of our chip for these users and give it to the engineering team. Our CPU architect comes back and says she can maximize CPU performance for these workloads by adding advanced features to the chip’s design and speeding up the clock. However, these suggestions will increase the physical size and overall power consumption of the CPU by a small amount.

What does this mean for our customers? Expanding the size of the chip will increase the cost of the CPU to the customer, and an increased power consumption will increase electricity costs and fan noise for desktop users and decrease battery life on laptops.

Fortunately, our target customers are desktop PC users who prefer the full power and peak performance of a desktop over the portability of a laptop, and don't mind being plugged into the wall. Additionally, these professional customers are ready to pay more because the productivity benefits are greater than the extra cost of the premium CPU. The performance boost from the powerful CPU is more valuable to customers than the higher price and power use, so we decide to go ahead with our architect's plan. 👍 🚀

This example of a PPA (performance, power, area) trade-off shows how market demands for a product influence technical choices, and how these technical decisions, in turn, affect product requirements.

Clearly, technical trade-offs must be weighed in terms of their impact on the product and consumer. The best teams have a strong relationship between engineering and business stakeholders (like product, marketing, and sales) and communication flows bi-directionally and freely.

The key takeaway:

💡Balancing trade-offs is a crucial skill underlying both semiconductor design and business strategy.

Desktop vs Mobile

We see PPA trade-offs on full display when comparing CPUs designed for desktop systems with those designed for mobile systems like phones and tablets.

As previously discussed, CPUs for desktop computers prioritize performance at the cost of increased power consumption, heat dissipation, and chip size. The desktop form factor has ample space for additional equipment like heat sinks and fans needed to cool larger, hotter CPUs.

On the other hand, mobile CPUs optimize for low power consumption to meet the goal of maximizing battery life. Mobile CPUs are generally smaller, run cooler, and are slower than desktop CPUs. As such, mobile CPU engineers make different power-performance-area tradeoff decisions than desktop CPU designers.

Performance Per Watt

You’re absolutely correct to think “battery life is important, but my phone better be fast too!” If mobile CPU designers only prioritize battery life, they might create a phone with a long battery life but terrible performance. Modern mobile user experience demands both high performance and low power; we all want fast phones that last a long time without needing to charge.

How do engineers keep tabs on the impact of their tradeoff decisions on performance and power consumption? And how can we compare two different CPUs to understand which better optimizes across both power and performance?

The answer? A metric known as performance per watt.

📖 Performance per watt: a metric that measures energy efficiency of a system

This metric, performance divided by power, is a simple and intuitive way to keep an eye on both performance and power. If CPUs A and B have the same performance, but B requires less power, then B is more energy efficient and will have a higher performance per watt.

Performance per watt is a useful metric when making tradeoffs during system design and when comparing systems. Although we introduced it in the context of power-constrained systems like phones and tablets, energy efficiency matters in all systems including high performance systems like those used in data centers.

AMD’s Sam Naffgizer explains it very well in this Moore’s Lobby podcast as they are discussing the Green 500 list of the world’s most energy-efficient supercomputers.

“Arguably, the performance per watt is more important than the raw performance because we’re all limited by power consumption going forward. If we don’t improve on that performance per watt metric, performance doesn’t go up either. It doesn’t matter how good your theoretical performance is - if you can’t power the thing, it’s not helping. So Green 500 tracks that performance per watt.”

Performance per watt has significant business implications, especially for large data centers owned by hyperscalers like Amazon, Microsoft, and Google. It’s estimated that data centers consumed 1.8% of total US electricity consumption in 2014. (Surprisingly, I can’t find newer data on this important subject!) Recent generative AI innovations like ChatGPT are transforming the way we work, and those innovations are extremely computationally expensive. Accounting for generative AI, some forecasts predict data centers could consume up to 21% of the world’s electricity supply by 2030. Clearly, data center energy consumption significantly impacts hyperscalers’ operating costs and impacts local and global energy supply and demand. It’s imperative that we all keep an eye on performance per watt and energy-efficient computing going forward.

📖 Hyperscalers: large companies that provide cloud, networking, and internet services at a massive scale including Amazon (AWS), Microsoft (Azure), and Google (GCP).

CPU Cores

When you explore modern CPUs from leading manufacturers, you'll invariably encounter the term "cores" highlighted as a key feature. A core can be thought of as a self-sufficient CPU designed to execute computer programs independently. Multi-core processors therefore are akin to embedding several independent CPUs onto one chip, working in tandem. The evolution from single-core to multi-core has an insightful history that we will explore in a future post.

The main takeaway is that multi-core CPUs have a greater capacity to handle multiple computing tasks simultaneously which increases system throughput. Not all tasks benefit from parallel processing; some tasks are inherently sequential and cannot be effectively broken down into parallel components. Therefore CPU performance doesn’t necessarily scale linearly with the number of cores.

💡Multicore CPUs can be thought of as a parallel processing system

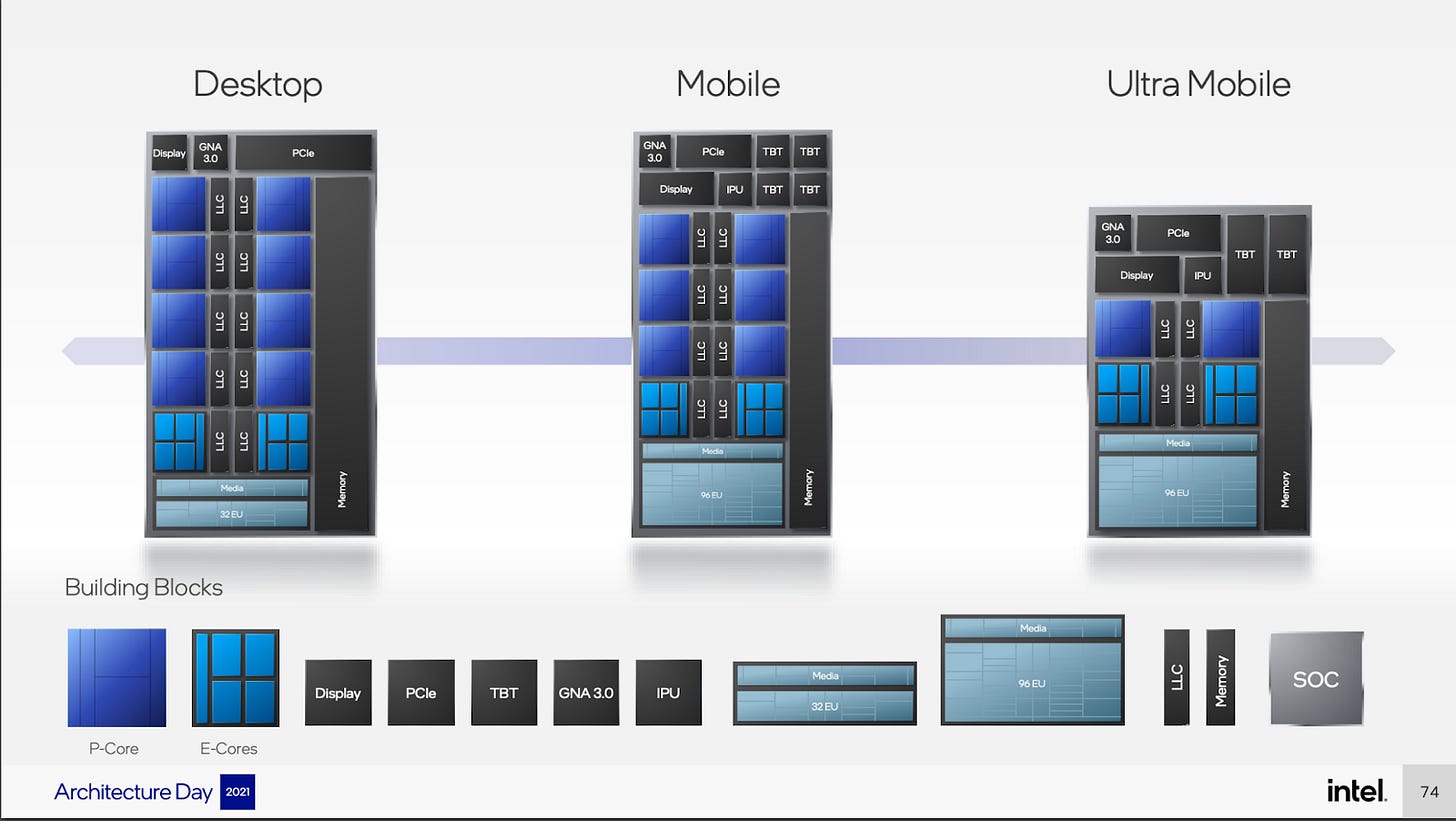

The second interesting takeaway is that multi-core CPUs can be composed of high-performance AND low performance cores.

Imagine a CPU like a team with two types of players: performance cores and efficiency cores. Performance cores are like strong players who handle tough tasks quickly and powerfully, but they use more energy, need more space, and produce more heat. Efficiency cores, on the other hand, are like players who use less energy while still performing well.

Blending power (P) and efficiency (E) cores is where the magic happens. Even in CPUs designed for high performance, you'll find efficiency cores adeptly managing less intensive tasks. This strategy is all about smart power usage – not every job requires the mightiest processor. The operating system and CPU collaborate to orchestrate the workload across P and E cores, taking into consideration which core type is best suited for each task.

💡Modern multicore CPUs combine high-performance and low-performance cores to efficiently handle multiple tasks simultaneously

Application time

At the beginning of this article, I stated

My goal is to teach what, why, and how in a way that can be immediately applicable. A successful outcome looks like Chipstrat subscribers who can reason about the impact of semiconductor news on their work or daily life.

Let’s see how I did!

By now you should know

What a CPU is

Why CPUs are important

Key tradeoffs impacting CPUs (power, performance, area) and how those decision impact product and business strategy

Let’s see if you can reason through some questions about modern CPUs with your new understanding!

Take a look at the following image from the Intel 2021 Architecture Day presentation, and note the legend in the bottom left showing P-cores and E-cores.

Desktop CPU

How many P cores and E cores does the desktop CPU diagram show?

Why are there so many P cores in a desktop CPU?

Why does the desktop CPU also have E cores?

Ultra Mobile

How many P and E cores for ultra mobile CPUs?

Why are there fewer P cores than a desktop?

Why is the chip area smaller on ultra mobile than desktop?

What might be examples of “ultra mobile” systems?

How did you do?

The rest of this article, accessible to paid subscribers, will discuss CPU market segments and their competitors. It aims to provide a basic understanding of the industry alongside our earlier overview of the core technology involved. Market segments discussed include mobile, consumer, enterprise & cloud, and professional & high-performance computing. Companies discussed include ARM, Apple, Qualcomm, Samsung, Intel, AMD, NVIDIA, Ampere, Tenstorrent, and more.

Free subscribers can skip ahead to GPUs: