Lisa Su - Steady As She Goes

Lisa Su's Recent Stratechery Interview And Computex Keynote

This post discusses insights from Lisa Su's interview with Ben Thompson and the Computex keynote.

We’ll also cover the one head-scratcher that AMD must improve going forward.

Along the way, we’ll see an argument advocating for Nvidia to acquire Ampere.

Modularity

Lisa believes the future of AI hardware is customer choice enabled by modular designs.

From Stratechery’s recent interview, Lisa claims the market will demand more choice at lower cost and less power than Nvidia can provide:

LS: When you look at what the market will look like five years from now, what I see is a world where you have multiple solutions. I’m not a believer in one-size-fits-all, and from that standpoint, the beauty of open and modular is that you are able to, I don’t want to use the word customize here because they may not all be custom, but you are able to .… tailor the solutions for different workloads, and my belief is that there’s no one company who’s going to come up with every possible solution for every possible workload.

By the way, I am a big believer that these big GPUs that we’re going to build are going to continue to be the center of the universe for a while, and yes, you’re going to need the entire network system and reference system together. The point of what we’re doing is, all of those pieces are going to be in reference architectures going forward, so I think architecturally that’s going to be very important.

My only point is, there is no one size that’s going to fit all and so the modularity and the openness will allow the ecosystem to innovate in the places that they want to innovate. The solution that you want for hyperscaler 1 may not be the same as a solution you want for hyperscaler 2, or 3.

Lisa argues that, over time, companies will stop buying solely based on performance and begin optimizing their data center footprint for both power and cost.

LS: At some point, you’re going to get to the place where, “Hey, it’s a bit more stable, it’s a little bit more clear”, and at the types of volumes that we’re talking about, there is significant benefit you can get not just from a cost standpoint, but from a power standpoint. People talk about chip efficiency, system efficiency now being as important if not more important than performance, and for all of those reasons, I think you’re going to see multiple solutions.

Accordingly, AMD is building a modular platform with a broad portfolio.

From her introduction at Computex,

LS: AMD is uniquely positioned to power the end-to-end infrastructure that will define AI computing. First it's delivering a broad portfolio of high-performance energy-efficient compute engines for AI training and inference including CPUs, GPUs and NPUs.

Software Focus

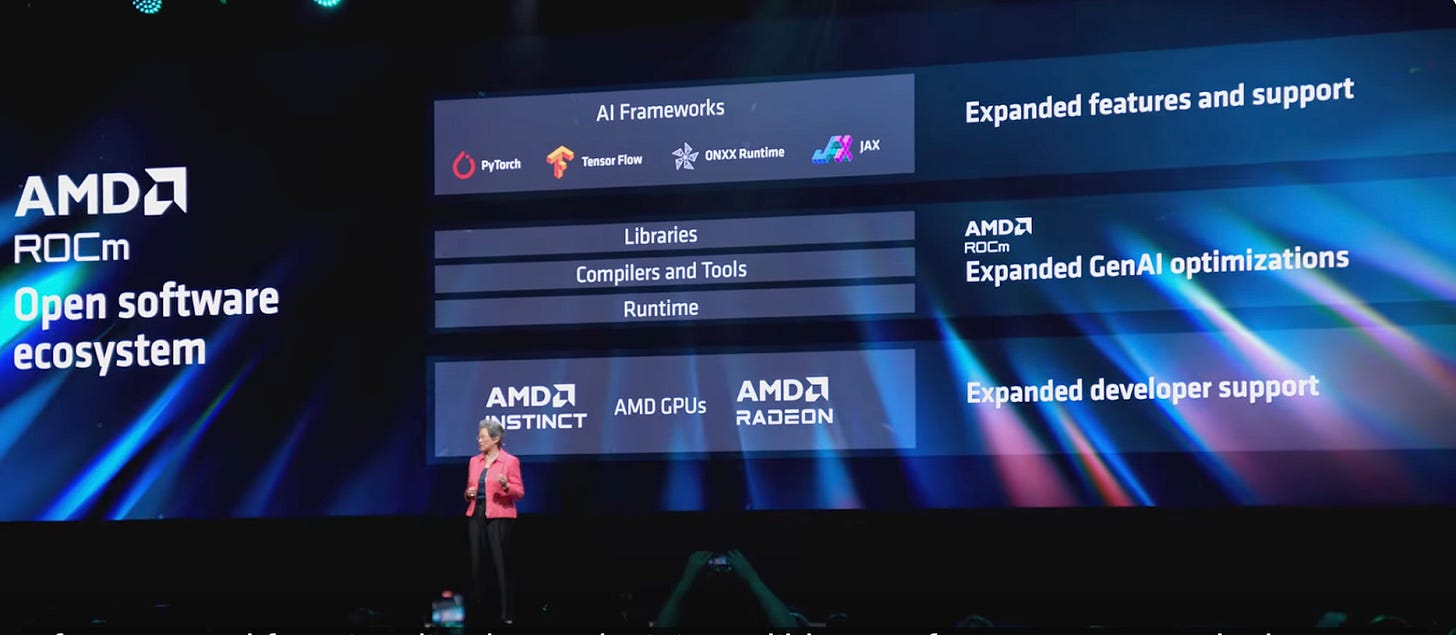

AMD’s success in Su’s open and modular future requires flawless AI software support.

Historically, AMD’s ROCm ecosystem has a poor reputation:

The software is called ROCm, it’s open source, and supposedly it works with PyTorch. Though I’ve tried 3 times in the last couple years to build it, and every time it didn’t build out of the box, I struggled to fix it, got it built, and it either segfaulted or returned the wrong answer. In comparison, I have probably built CUDA PyTorch 10 times and never had a single issue.

Lisa clearly understands the significance of software and the need for AMD to improve. She hit on this topic right out of the gate in the Computex keynote introduction and later gave abundant detail:

We've made so much progress in the last year on our ROCm software stack, working very closely with the open source community at every layer of the stack, while adding new features and functionality that make it incredibly easy for customers to deploy AMD Instinct in their software environment.

Over the last six months, we've added support for more AMD AI hardware and operating systems. We've integrated open source libraries like vLLM and frameworks like JAX. We've enabled support for state-of-the-art attention algorithms. We've improved computation and communication libraries, all of which have contributed to significant increases in the gen AI performance for MI300.

Now with all of these latest ROCm updates, MI300X delivers significantly better inferencing performance compared to the competition on some of the industry's most demanding and popular models. That is, we are 1.3 times more performance on Meta's latest Llama 3 70B model compared to H100, and we're 1.2 times more performance on Mistral's 7B model.

We've also expanded our work with the open source AI community. More than 700,000 Hugging Face models now run out of the box using ROCm on MI300X. This is a direct result of all of our investments in development and test environments that ensure a broad range of models work on Instinct.

The industry is also making significant progress at raising the level of abstraction at which developers code to GPUs. We want to do this because people want choice in the industry, and we're really happy to say that we've made significant progress with our partners to enable this.

For example, our close collaboration with OpenAI is ensuring full support of MI300X with Triton, providing a vendor agnostic option to rapidly develop highly performant LLM kernels.

And we've also continued to make excellent progress adding support for AMD AI hardware into the leading frameworks like PyTorch, TensorFlow, and JAX. We're also working very closely with the leading AI developers to optimize their models for MI300.

Ben Thompson also brought the software topic to Lisa’s attention,

BT: … it strikes me that one of the ongoing critiques of AMD is the software needs to be better. Where is the software piece of this? You can’t just be a hardware cowboy. When you joined in, was there a sense of, “Look, we had this opportunity, we could have built on this over time”. What is the reticence to software at AMD and how have you worked to change that?

LS: Well, let me be clear, there’s no reticence at all.

BT: Is that a change though?

LS: No, not at all. I think we’ve always believed in the importance of the hardware-software linkage and really, the key thing about software is, we’re supposed to make it easy for customers to use all of the incredible capability that we’re putting in these chips, there is complete clarity on that.

…

LS: One of the things that you mentioned earlier on software, very, very clear on how do we make that transition super easy for developers, and one of the great things about our acquisition of Xilinx is we acquired a phenomenal team of 5,000 people that included a tremendous software talent that is right now working on making AMD AI as easy to use as possible.

Timing - Just the Beginning

Lisa told us she is playing the long game.

With Ben Thompson, she encouraged a 10-year mindset:

LS: I would say it a different way, we are at the beginning of what AI is all about. One of the things that I find curious is when people think about technology in short spurts. Technology is not a short-spurt kind of sport. This is like a 10-year arc we’re on. We’re through maybe the first 18 months. From that standpoint, I think we’re very clear on where we need to go and what the roadmap needs to look like.

Lisa is communicating that she isn’t fazed by Nvidia’s vast head start but is patiently playing the long game.

Trust

Finally, Lisa discussed AMD’s playbook of building trust by delivering on their roadmap.

She explained AMD’s playbook to Ben:

BT: You have to win the roadmap.

LS: You have to win the roadmap and that was very much what we did in that particular point in time.

BT: And so when you come in 2014, you’re like, “Look, I can see a roadmap where we can actually win”.

LS: That’s right.

BT: And there are customers coming along that actually will buy on the roadmap.

LS: That’s right and by the way, they’ll ask you to prove it. In Zen 1, they were like, “Okay, that’s pretty good”, Zen 2 was better, Zen 3 was much, much better.

That roadmap execution has put us in the spot where now we are very much deep partners with all the hyperscalers, which we really appreciate and as you think about, again, the AI journey, it is a similar journey.

This playbook worked for AMD in the x86 server competition, where they gained share slowly and steadily. Lisa sees the same opportunity in cloud AI computing.

Takeaway

If you and I were at a bar, and you asked me to give a pitch for AMD based on Lisa’s message, I'd probably tell it like this.

Dudes, chill. It’s early innings.

Nvidia showed up first and gets to charge whatever they want — good on them. AMD is in the game now too.

And let’s be honest—most enterprises won’t see a financial return on GenAI investments for some time anyway. Heck, they’ll probably struggle even to quantify the ROI.

Anyway, have you ever met a patient CFO? “The easiest way to increase margins,” the CFO will say, “is to reduce expenses!” Ambitious individuals seeking a raise will come back with a proposal to switch away from Nvidia and reduce expenses. Some of those dollars go to AMD Instinct. Some of it will go to AMD Epyc.

Can AMD compete? Sure. Most enterprises require only “good enough” performance anyway.

And don’t worry about the ROCm complaints — AMD listened and is responding. Rumor has it Lisa gave everyone a ROCm shirt that said: “The best time to plant a tree was 20 years ago; the second best time is now.”

Yes, Nvidia upped the cadence ante to a one-year cycle, but AMD called. Lisa’s launches will probably always lag Jensen’s, but does it even matter if you can’t get your hands on Jensen’s chips anyway? A bird in the hand is worth two in the bush.

I see you reaching for that old CUDA moat argument. Yes, there will always be software switching costs, but they are diminishing as developers move up the abstraction layer from CUDA.

Why bet on Lisa? Recall the lesson from the x86 server market: Lisa knows how to make something from nothing. Jensen is a magician too, I know, I know.

But who will sleep better when the data center AI bubble deflates? Jensen or his first cousin once removed? Lisa knows not to put all her eggs in one basket.

Let’s see what happens in the coming years.

One Head Scratcher

Lisa started the keynote with a strong “AI is our #1 priority” message and then transitioned from out of the introduction like this:

So let's go ahead and get started with gaming PCs.

Gaming PCs?

Lisa spent the next 5 minutes talking about Ryzen 9000 series CPU specs with no mention of AI. 🤔

The sudden pivot from an AI-focused opening to an AI-absent PC discussion was jarring and confusing.

Worse, AMD has only been a few weeks removed from Qualcomm‘s major Microsoft Copilot+ PC coup; recall the 20+ Copilot+ PCs announced recently that were exclusively powered by Qualcomm.

After Lisa’s solid intro, she should have immediately returned fire by jumping straight into the “World’s best processor for Copilot+ PCs” discussion.

Including the gaming business in the keynote illustrates AMD’s tension between its future and past. Of AMD’s four business segments, gaming has no clear AI strategy or offerings articulated yet. (There are use cases for LLMs to improve gaming, but AMD hasn’t discussed any yet.)

As I mention in Rethinking AMD,

The vibe I get from AMD’s current approach is “one foot in the future, one foot in the past”, which comes across in their public communications too.

I’m still getting that vibe.

Fact-Checking Lisa’s Portfolio Claims

Finally, how does Lisa’s broad portfolio claim hold up?

Does AMD actually have a broad AI compute portfolio, or is that just lip service?

If we look at their compute engines across the cloud and the edge, AMD currently has the broadest AI compute portfolio.

In a future where GPU stockpiling settles down and enterprises shift to TCO and power optimization across the data center, AMD is well-positioned with their EPYC CPUs and Instinct GPUs.

As we’ll discuss later, they are well-positioned at the edge with their newly announced Copilot+ PCs. AMD’s only edge gap is the lack of smartphones and hands-free form factors, where they currently choose not to compete. From Qualcomm’s Opportunity,

I’ve been noodling on a weakness of AMD’s AI portfolio, namely the lack of AI hardware for consumer devices.

Sure, laptops will always have a slice of the consumer market. But a significant share of inference will occur on smartphones, increasingly with hands-free devices like glasses (e.g., Meta’s Ray-Bans), headphones, smart speakers, and more.

AMD’s current portfolio is not positioned to ride the incoming consumer inference wave.

Competition

Nvidia is, of course, all about those GPUs.

That said, they do have the Grace CPU Superchip, a high-performance CPU server offering.

If datacenter workload mix continues to spread across traditional and accelerated compute, and Lisa’s cost and power optimization future comes true, Nvidia could use more diversity in its CPU server portfolio offerings. They could quickly fill out their CPU server portfolio by acquiring Ampere. Sure, Nvidia and ARM could build anything, but this would be a speed to market play. Of course, there are many counterarguments why Nvidia would never opt for a CPU-only server offering, including the space being competitive and arguably commoditized.

Intel matched AMD’s breadth, although they are spinning out Altera, so FPGA offerings are moving away from the Intel Product group.

Can AMD’s EPYC and Instinct beat out Intel in a modular data center future? If early innings matter, AMD’s MI300 customers include Meta and Microsoft, whereas Intel’s Gaudi customers include OEMs like Dell and enterprises like Bosch and IBM.

My hot take is that the market is interested in AMD, whereas Intel is interested in the market. Said another way: customer pull (AMD) vs customer push (Intel).

Qualcomm will clearly compete with AMD in Copilot+ laptops from now on.

Qualcomm isn’t positioned to participate in a modular data center future. They have an AI accelerator called Cloud AI 100, but like Intel’s Gaudi, it’s unclear how many enterprises are using it.

Now What?

If Lisa is right, this is a marathon and not a sprint. I guess we sit back and enjoy the show!