The Future of Edge Inference Hardware

NPUs, GPUs, ASICs at the edge. LLMs, iOT. Apple, Qualcomm, AMD, Intel, Google, Hailo, and others.

Two quick shout-outs:

The previous FPGA post was surprisingly popular. Thanks to everyone who read and shared it!

I’ve seen a recent uptick in annual subscribers. Thanks for your long-term partnership!

Onto the article.

The Generative AI boom has semiconductor companies scrambling to tout their products as the ideal solution for AI inference, leading to a flood of competing claims:

Intel says laptops and desktops have a big role to play in the future of AI.

But Nvidia points to GPUs as the answer for inference, suggesting CPU inference is a thing of the past.

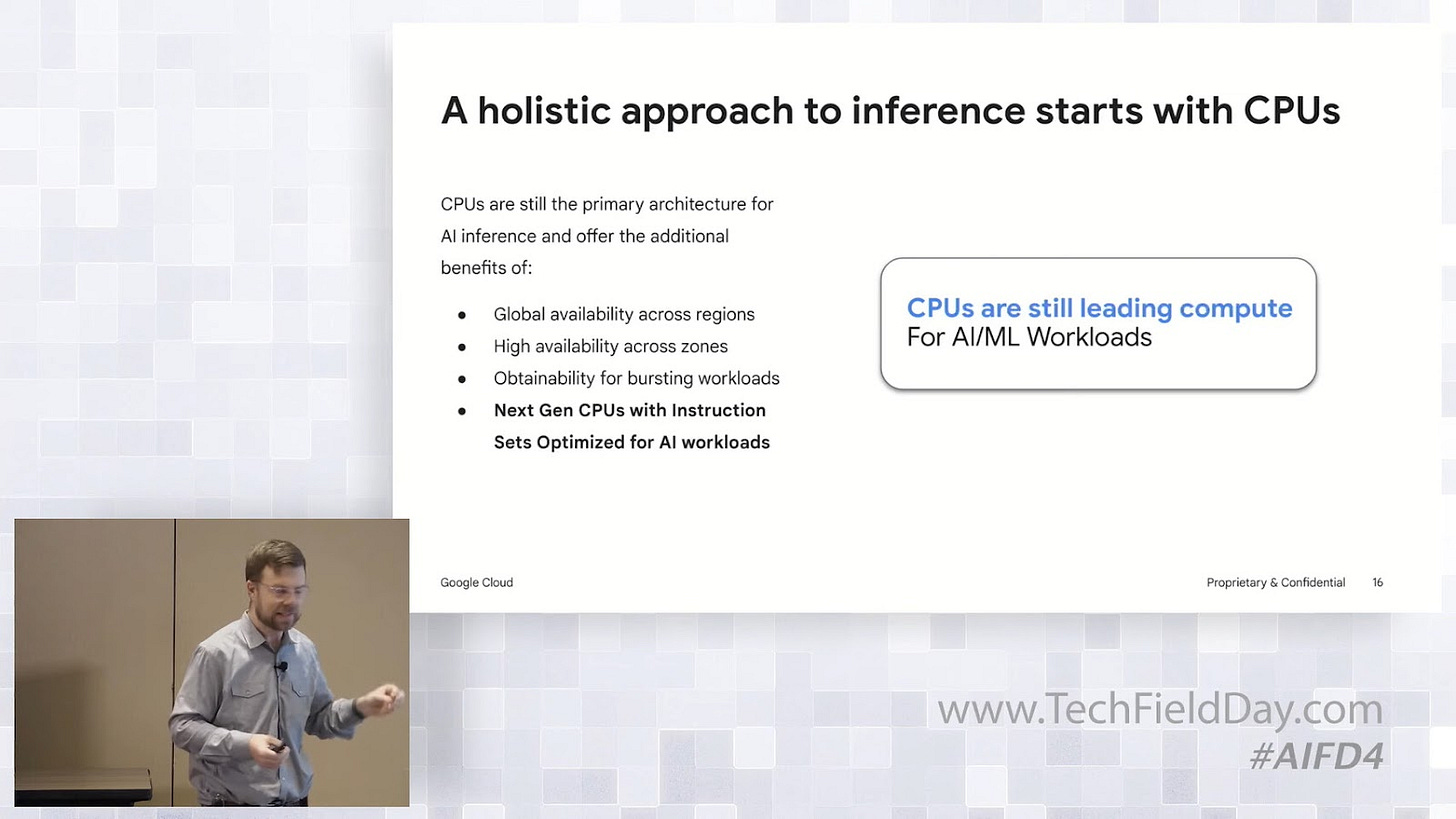

On the contrary, this Product Manager at Google Cloud suggests data center CPUs are the majority of the present for inference workloads.

Ampere agrees that data center CPUs make sense for inference, especially from an affordability and utilization point of view.

Yet Qualcomm says cloud-centric inference is a thing of the past and edge inference is where it’s at (centered around mobile, of course).

To which Nvidia points out “we do edge too” (GPU-centric edge that is).

Whew. What to make of all of this?

Let’s compare and contrast AI inference hardware so we can sort this out.

This article will focus on edge inference, and we’ll compare and contrast cloud inference in a subsequent post.

AI Inference Landscape

First, if you’re new to AI, it’s important to understand there are different types of AI workloads. This is a very common cause of confusion, as some people mistakenly interpret “inference” as Large Language Model (LLM) inference.

For example, these use cases all involve AI inference:

Facial recognition to unlock your phone

Predictive text in your email editor (Gmail’s “Smart Compose”)

Camera app making your selfies look better

Spotify generating your Discover Weekly playlist overnight

Home assistant waking up after you say “OK Google” or “Hey Alexa”

Credit card company denying your transaction when you’re on vacation in a different location

GitHub Copilot

Generating a photo with DALL-E

These are all AI, but they aren’t all LLMs. The details of the particular algorithms are for another post, but these takeaway is that these tasks are enabled by variety of different machine learning algorithms and models (GANs, LSTMs, CNNs, RNNs, Diffusion Models, Collaborative Filtering, etc).

While LLMs are getting a lot of attention, it's important to remember they exist within a broader hierarchy of Artificial Intelligence algorithms and models.

The following image attempts to illustrate that LLMs are just one type of neural networks (NNs), and NNs are just one type of machine learning (ML) workloads.

Inference is the process of applying data to any type of trained machine learning model in order to generate predictions, classifications, or insights — it could be a diffusion model, LLM, convolutional NNs, etc.

Appreciating the algorithmic variety within AI applications is key, as each family of algorithms has unique memory and compute needs that impact the choice of underlying hardware.

The resource demands of different AI models vary greatly. For instance, top-performing LLMs require vast memory and compute, often requiring GPU clusters. In contrast, traditional CNNs are less intensive and can run on single GPUs, FPGAs, or even CPUs. Naturally, the ideal tool depends heavily on the specific problem you aim to solve.

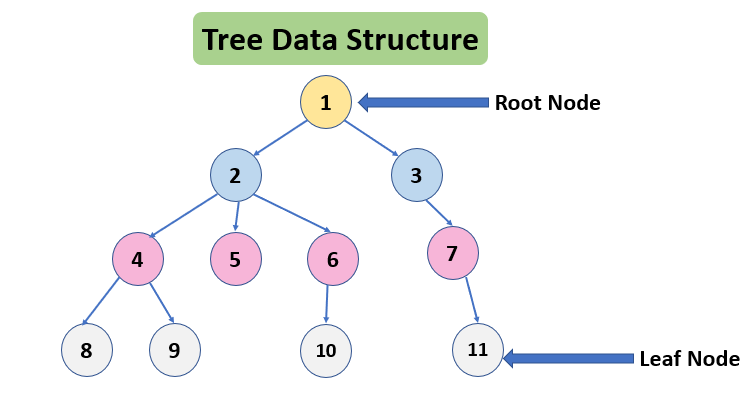

As an aside: after drawing the above clunky illustration, I realized it might have been better to represent my idea in a tree format, with Artificial Intelligence as the root node, three children (ML, Expert Systems, Search Algorithms) beneath it, and so on. Then I could have colored the path all the way down to the LLM leaf node to better illustrate the LLM family tree lineage.

I didn’t want to spend the 20+ minutes to draw this illustration, so I gave a screenshot of my clunky attempt to both Gemini Advanced and Dall-E and asked them to redraw it; both models are multi-modal and could therefore extract meaning from my original graphic!

Because Gemini is an LLM, it can only produce text output, yet came up with this illustration which is not bad!

DALL-E understandably struggled as it lacks the capability to analyze and correct text in its visual output.

Why am I taking us on this detour?

First, for the skeptics, I want to demonstrate that Generative AI can accelerate the creative process and thereby increase productivity. Again, I had an idea that would have taken me 20+ minutes, but with GenAI tools I created a new visualization in a fraction of the time. While the results aren't ideal yet, there’s clearly potential for solving these kind of problems with visually appealing AI-generated output.

It’s hard to be a GenAI skeptic if you continuously try new use cases with the tools; doing so helps to see faint paths to a more productive future.

Secondly, I included this exercise as it is pertinent to our AI hardware conversation. Where might these type of useful GenAI workloads run in the future? Today we need huge GPU data centers to give me a simple text visualization. Will that always be the case? Let’s circle back on this later.

Which AI HW is needed for today’s inference workloads?

Selecting the right hardware for an AI use case goes beyond just the ML algorithm. One must also consider user expectations for responsiveness, whether the task can be performed on an edge device, and the overall feasibility of the hardware solution. Does the use case permit local inference, or is the cloud a necessity?

For example, certain use cases have very specific requirements, like the ability to run inference locally, offline, with low-latency – think safety-critical systems. In this case, inference must happen at the edge, and both the cost expectations and performance needs can shape the choice between low power or high performance hardware.

Conversely, tasks with high computational and memory demands like the most powerful LLMs might be impossible to run on edge devices like smartphones, making cloud inference the only option.

Which AI HW is needed in the future?

Let's explore where AI inference is likely to occur in the future. This understanding can help gauge the potential size of various hardware markets, such as edge devices, datacenter CPUs, and more.

We’ll take a systematic look at AI inference hardware, evaluating trade-offs and finding the best use cases for each. This will help us cut through marketing claims and determine which semiconductor solutions genuinely excel at specific AI workloads.

We'll focus on edge inference, including NPUs, GPUs, and AI ASICs, and explore how these technologies could bring more AI to the edge and unlock new user experiences.

Companies explored include Apple, Qualcomm, AMD, Intel, Google, Hailo, and others.

The remaining 75% of this article is beyond the paywall - if you’ve made it this far, consider joining, as the best insights are below.