The Power of Nvidia's Systems Thinking Approach

Systems Thinking, AI Engineers as the Primary Customer, Vertical Integration, Beating Competition

Happy New Year! We rounded out 2024 with thoughts on Nvidia’s edge AI news and a concerned take on AMD.

Today we’re going to dive into Nvidia’s strongest competitive advantage: a systems-thinking mindset and focus on the AI engineer.

Nvidia’s Systems Thinking

If you’ve been following Nvidia over the past year, you’ve probably heard Jensen or others mention that Nvidia is no longer a GPU company but an accelerated compute company.

Here’s a YouTube clip with Groq’s Sunny Madra on the BG2 podcast saying as much. And Sunny points out another Jensen colloquialism: the datacenter is the new unit of compute.

Before 2022, Nvidia’s revenue profile suggested it was primarily a GPU company catering to gamers and Bitcoin miners.

Yet Nvidia has always had a systems mindset. Historically, the “system” was a graphics card with accompanying drivers and a supporting software stack, which was an expansive system compared to competitors.

However, Nvidia also has a long history of helping develop GPU-accelerated supercomputing, for example way back in 2009.

Supercomputing gave Nvidia insight into bigger systems beyond personal computers.

With the advent of the transformer architecture in 2017 and scaling laws in 2020, generative AI came into focus. Jensen seized the moment to expand Nvidia’s systems-thinking to cloud AI systems designed for AI engineers.

The beauty of founder-led companies is that they can change their company mindset quickly.

From The New Yorker,

“He sent out an e-mail on Friday evening saying everything is going to deep learning, and that we were no longer a graphics company,” Greg Estes, a vice-president at Nvidia, told me. “By Monday morning, we were an A.I. company. Literally, it was that fast.”

Brad Gerstner recounts how the ecosystem quickly saw that Jensen was right:

BG: We got so excited in November ‘22 because we had folks like Mustafa from Inflection and Noam from Character.AI coming into our office talking about investing in their companies. And they said, well, if you can't pencil out investing in our companies, then buy Nvidia, because everybody in the world is trying to get Nvidia chips to build these applications that are going to change the world.

Nvidia’s system focus zoomed out from GPU systems in PCs to cloud-based AI systems. Nvidia’s primary customer persona flipped too, from Bitcoin miners and gamers to ML engineers training large generative AI models.

Jensen Huang’s choice to vertically integrate compute, networking, and software mirrors Apple’s vertical integration model. Apple’s approach optimizes the customer experience on consumer devices, while Nvidia’s ensures a superior experience for AI engineers on massive AI clusters.

Nvidia’s AI Systems, Simply Put

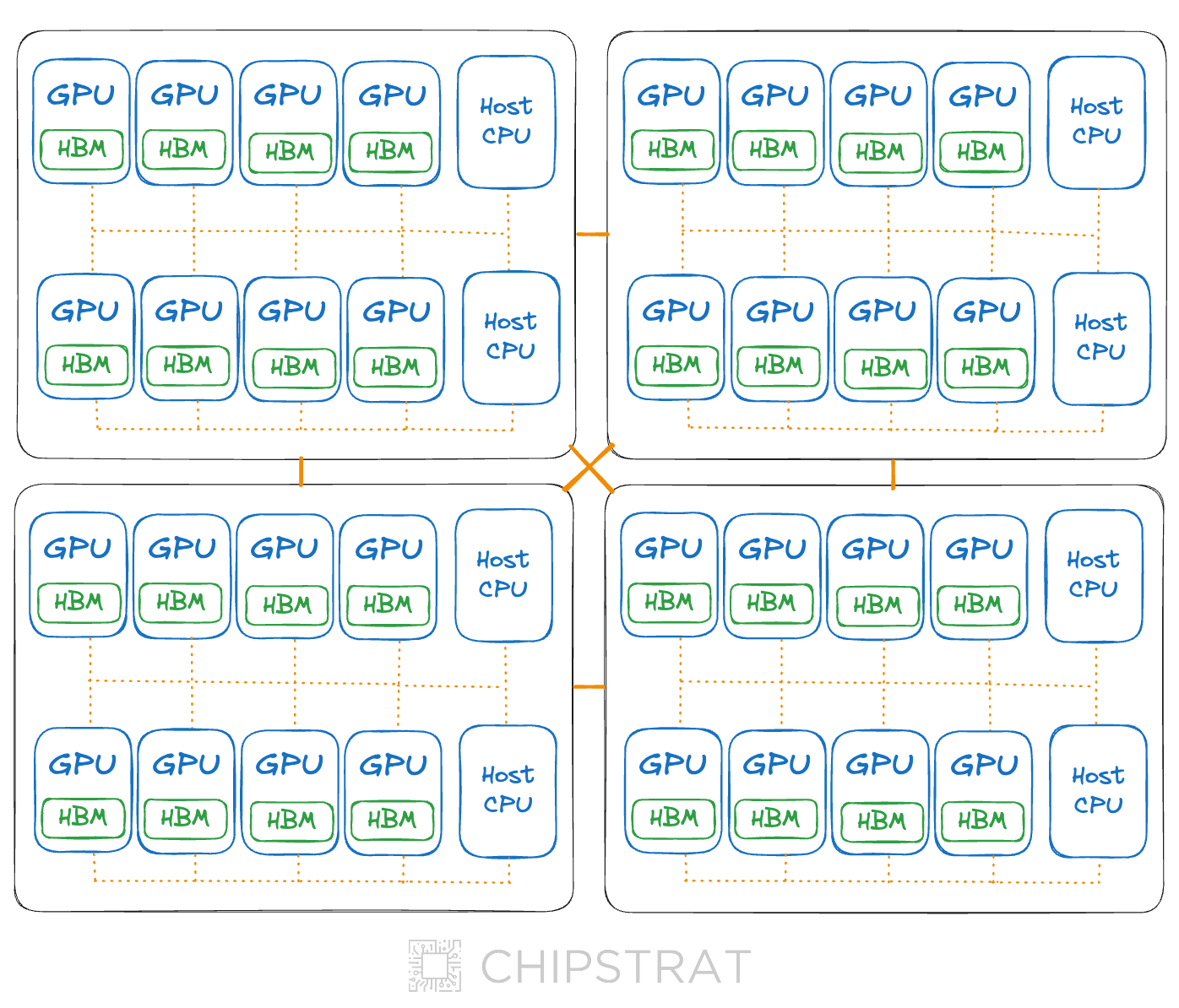

In the simplest sense, we can think of a single node of this AI system as follows:

And these nodes can be chained together to get really big AI clusters, think 10,000s to 100,000 GPUs.

This is systems thinking now!

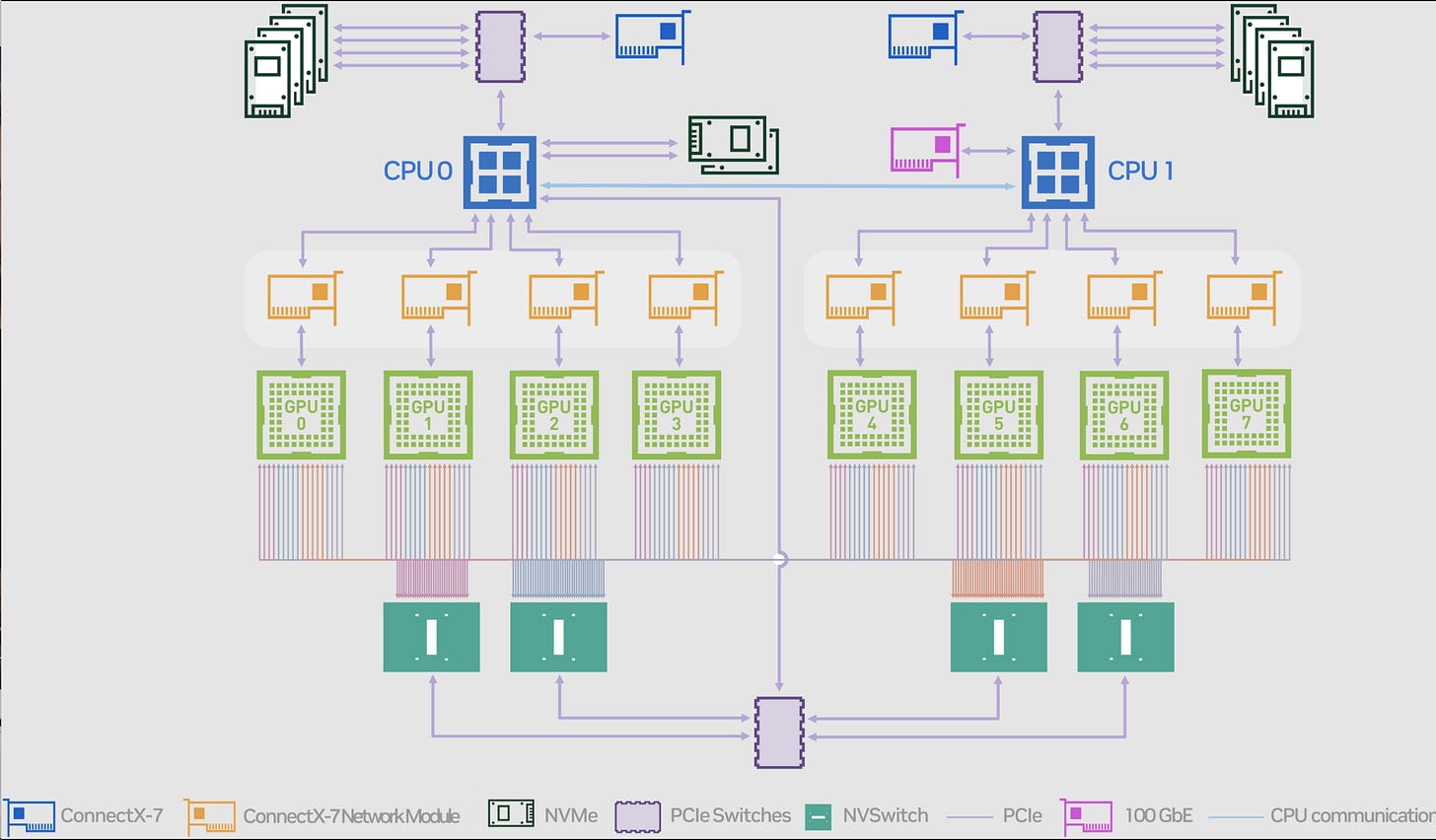

There’s way more complexity than I conceptually illustrated above. Think switches to connect all the things, wires galore, additional system memory, and solid-state storage.

But let’s not get hung up on all the precise technical details for now.

Just know that my illustration is a basic conceptual understanding of a single Nvidia DGX H100 server, and this is what Jensen’s talking about when he mentions a computer with 35,000 parts, for example in this Acquired Podcast:

David: When OpenAI was founded in 2015, that was such an important moment. Were you involved in it at all?

Jensen: I wasn’t involved in the founding of it, but I knew a lot of the people there. Elon, of course, I knew. Peter Thiel was there and Ilya was there. We have some great employees today that were there in the beginning.

I knew that they needed this amazing computer that we were building, and we’re building the first version of the DGX, which today when you see a Hopper, it’s 70 pounds, 35,000 parts, 10,000 amps. But DGX, the first version that we built was used internally and I delivered the first one to OpenAI. That was a fun day.

Nvidia’s systems thinking expands further; the DGX is just a subsystem within a large GPU cluster. You’ll hear Jensen call this AI datacenter an AI factory, for example, in this Aug 2024 earnings call where he explains Nvidia’s full-stack integration benefits and expansive systems thinking:

We have the ability, fairly uniquely, to design an AI factory because we have all the parts. It's not possible to come up with a new AI factory every year unless you have all the parts. And so, we have -- next year, we're going to ship a lot more CPUs than we've ever had in the history of our company, more GPUs, of course, but also NVLink switches, CX DPUs, ConnectX for East and West, BlueField DPUs for North and South, and data and storage processing to InfiniBand for supercomputing centers, to Ethernet, which is a brand-new product for us, which is well on its way to becoming a multibillion-dollar business to bring AI to Ethernet. And so, the fact that we have access to all of this, we have one architectural stack, it allows us to introduce new capabilities to the market as we complete it.

Nvidia’s control over the entire AI system—from GPUs to networking—allows it to deliver a superior end-to-end experience and innovate with fewer dependencies on third parties. Competitors, by contrast, build only GPUs and rely on external vendors for components like networking and final system integration, limiting their ability to optimize and control the user experience.

This is why AMD recently bought ZT Systems — to bring system design in-house and enhance AMD’s systems thinking mindset.

Innovations for the AI Engineer, all the way down

Nvidia’s systems approach allows it to innovate for AI engineers at every layer, from hardware to software.

Hardware

Generative AI model training demands high-performance computing systems with massively parallel processing capabilities optimized for matrix and vector operations. These systems require vast memory and high bandwidth to efficiently handle the model's weights, activations, and gradients during training.

Modern GenAI models have grown so massive that a single GPU’s memory cannot hold all this data. Training these models now requires clusters of GPUs pooling their memory and computational power to form a supercomputer capable of handling the workload. Data exchange across nodes within the supercomputer must be ultra-fast and low-latency to ensure efficient training and enable rapid experimentation for AI engineers.

Of course, Nvidia’s on it — Nvidia’s NVLink enables fast GPU-to-GPU communication within a server, and InfiniBand (Mellanox acquisition) enables fast communication across multiple servers.

Want Ethernet instead of Infiniband? Nvidia’s got you with Spectrum-X too.

Software

The crazy thing about these AI factories is that AI engineers need to harness all the complexity to experiment and train AI models. Engineers must write software that runs across all nodes in a cluster and manages communication between them. For example, during model training, nodes must share updates to stay synchronized.

Nvidia’s end-to-end approach allows it to design systems with user-friendly developer experience. Nvidia’s internal dogfooding undoubtedly shapes this, as it has been using these AI systems internally to train state-of-the-art foundation models with Megatron. The best way to inform the design of the system you’re building is to have internal customers using it!

A great developer experience for the AI engineer requires optimized software up and down the stack, from low-level GPU drivers to high-level open-source AI frameworks.

Consider inter-node GPUs pooling memory and compute—this feature requires software that manages memory allocation and data consistency. Similarly, intra-node communication for synchronizing gradients and updates during backpropagation relies on software like Nvidia’s NCCL.

Nvidia doesn’t need to build all the software in-house. For workload distribution across nodes, open-source tools like Slurm are used, and libraries like PyTorch are essential for building and training neural networks.

Nvidia ensures that all software, including third-party tools, works efficiently on its hardware.

Competitive Advantage

Contrast Nvidia’s systems thinking approach and AI engineer focus with the myopic approach from competitors. For example, we’ve recently seen AMD struggle with software that is painful to use and doesn’t fully express the hardware’s potential:

AI accelerator startups Etched and MatX were founded with a systems mindset focused on AI engineers from day one.

However, Nvidia’s vertical integration has increased the surface area of competition. Competing on AI cluster performance requires startups to think beyond chips but also networking, memory, and software.

Moreover, Nvidia has a mature customer base that gives regular feedback and the resources to continuously innovate across AI system components.

Meanwhile, newcomers start from rest and must acquire customers and ramp them up before getting helpful feedback. Fortunately, most startups have AI engineers dogfooding their systems internally. This is a bit harder before tape out, but startups can (slowly) run software on top of system emulations to get early feedback.

Jensen summarizes Nvidia’s competitive advantages from their systems-thinking and vertical integration in this May 2024 earnings call:

AI is not a chip problem only. It starts, of course, with very good chips and we build a whole bunch of chips for our AI factories, but it's a systems problem. In fact, even AI is now a systems problem.

The fact that NVIDIA builds this system causes us to optimize all of our chips to work together as a system, to be able to have software that operates as a system, and to be able to optimize across the system.

We know where all the bottlenecks are. We're not guessing about it. We're not putting up PowerPoint slides that look good. We're actually delivering systems that perform at scale. And the reason why we know they perform at scale is because we built it all here.

Now, one of the things that we do that's a bit of a miracle is that we build entire AI infrastructure here but then we disaggregate it and integrate it into our customers' data centers however they like. But we know how it's going to perform, and we know where the bottlenecks are. We know where we need to optimize with them, and we know where we have to help them improve their infrastructure to achieve the most performance.

This deep intimate knowledge at the entire data center scale is fundamentally what sets us apart today. We build every single chip from the ground up. We know exactly how processing is done across the entire system. And so, we understand exactly how it's going to perform and how to get the most out of it with every single generation.

Nvidia arguably has no vertically integrated competitors at datacenter scale yet.

Why?

Perhaps Nvidia’s real moat isn’t technology, but rather culture — a founder-led, systems-thinking mindset with a zealous focus on the AI engineer.

After all, isn’t this mindset what led to CUDA and the acquisition of Mellanox?

Jensen’s mindset is reminiscent of that of successful founders like Steve Jobs, Jeff Bezos, Elon Musk, Mark Zuckerberg, and even Walt Disney.

Of course, mention of these founders draws out the elephant in the room: what happens to Nvidia after Jensen? After all, Amazon, Apple, and Disney aren’t the same without their founders.

That’s a topic for another time, but some companies still invoke the founder ethos after succession; TSMC comes to mind.