Understanding Intel, Part 2

Foundry Significance, Intel's Future, Hardware's Bitter Lesson, Does x86 Matter, Are CPUs Dead, What About Gaudi, Client Brand Strength.

Thank you to everyone who shared their thoughts on my Understanding Intel post. This follow-up will address that feedback and cover a range of interesting topics that surfaced during the discussion.

Does x86 Lock-In Exist?

Historically, x86 has been a competitive advantage for Intel. Some readers pushed back, claiming that x86 is no longer a competitive advantage and pointed to Windows on Arm as proof.

Windows on Arm is willing to challenge the Intel-AMD duopoly, but it hasn't yet made a significant dent. The core issue is that software developers lack the motivation to port and maintain two separate versions of their applications without widespread Arm PC adoption. It’s a chicken-and-egg problem.

Interestingly, this reality places the growth trajectory of Arm PCs squarely in the hands of Windows app developers.

To boost Arm adoption, Arm and Qualcomm should brainstorm creative incentive programs targeted at Windows developers. Imagine a summer internship program embedding students at major app companies (Adobe, Spotify, etc.) to focus on porting and supporting Arm.

I mention interns because porting an app to Arm is not fundamentally hard; in the best-case scenario, port and support just works. Set the compilation target to Arm64, hit build, and🤞. Yet developers are wary because they may need to fix upstream dependencies, which can be a time-consuming endeavor. This open-source library needs work, I hope *it* doesn’t have upstream dependencies that need work too…

The x86/Arm situation echoes the iOS/Android dynamic but with a key distinction: modern mobile developers never had to overcome the chicken-and-egg adoption hurdle.

Current x86 erosion

Readers aren’t wrong about the x86 stronghold weakening. The proliferation of web apps (Office 365, Canva, etc.) means less Windows app usage, and thus fewer Arm ports. Just ensure the browser works on Arm! Smartphones are also pulling users away from Windows altogether. The long tail of Windows apps that needed to be ported will continue to shrink, leaving most PC usage concentrated on a smaller set of apps like games, password managers, cloud storage, and browsers.

Isn’t Design a Competitive Advantage for Intel?

Core Competency But Not Competitive Advantage

As expected, I heard from some folks who felt the design team was way more critical than I seemingly gave them credit for. Intel’s designs must have contributed to its rise and fall, right?

My take: design is a core competency but not a competitive advantage. Intel’s design team didn’t drive market share gains or losses — the fab team did.

Winning the race to the next node matters most, and design only needs to be competitive enough.

Don’t get me wrong, though—in this duopoly, conpetitive enough is still a very high bar.

Illustrative Thought Experiment

As a quick thought experiment to emphasize the value of a process node advantage over a design advantage:

Imagine a famous billionaire is up late tweeting one weekend and gets the wild idea to build a new x86 CPU competitor to Intel and AMD. The billionaire is willing to spend significantly to fund this endeavor, including hiring the best computer architects and sales team. They even managed to secure an x86 license.

Yet even with immense wealth and influence, this billionaire can't secure cutting-edge foundry capacity. Industry incumbents have already snapped up all the next-gen capacity. The startup would build its new design using larger, slower, and less power-efficient transistors.

The entrepreneur must decide: Can their new CPU architecture, even with fewer and slower transistors, deliver such a dramatic performance leap that it compels OEMs to adopt it and consumers to try it? Or should the startup abandon the idea?

If competing on an old process is a fool’s errand for a startup, is it any different for an incumbent?

AI’s Bitter Lesson

A bit of a tangent: This “prioritize scaling over architecture” lesson echoes the central thesis of AI’s Bitter Lesson.

The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin… researchers seek to leverage their human knowledge of the domain, but the only thing that matters in the long run is the leveraging of computation.

The lesson is “bitter” because the AI research community was reluctant to acknowledge: Don’t be clever. Just scale it.

We see proof of the Bitter Lesson in Generative AI. Significant progress occurs by simply throwing more computation and data at these models.

The amount of training computation increased by orders of magnitude, and as we’ve all experienced, the usefulness of these models has vastly improved.

Plotting Artificial Analysis’ quality metric for the Llama models clearly illustrates that more compute (and data) yields better models.

Note that the same transformer architecture powers every GPT and Llama model above. So far, the transformer architecture scales and is seemingly good enough.

Hardware’s Bitter Lesson

Do general-purpose logic chips have a similar lesson? If so, it might state:

Architectural gains are incremental. Real system performance gains come from scaling compute, which is made possible by advancing to the next process node.

Looking back on history we’ve seen this play out with CPUs; the industry stuck with the von Neumann architecture and transistor shrinking to unlock more computation for decades. It’s not as clear cut anymore, as system performance gains increasingly come from leveraging parallelism via incremental cores.

In our current AI era, the additional transistors gained with each node shrink will be increasingly allocated to specialized compute blocks like NPUs and GPUs within client SoCs. This will lead to a boost in overall computational capacity, even if CPU improvements are marginal. Transistor allocation across heterogeneous SoCs underscores the growing complexity of measuring, comparing, and marketing performance.

Yet, it again emphasizes the importance of racing to the next node to unlock additional (accelerated) computation.

How Fast Can Intel Run?

Another reader question: Can Intel’s foundry move fast enough to catch up while simultaneously generating enough profit to fund the next fab?

Financial prudence suggests that Intel should squeeze as many chips as possible out of a fab before it sunsets, seemingly at odds with sprinting to the next node.

It's helpful to examine Intel's historical cadence and fab strategy to understand how it managed this “run fast but make money” tension.

Tick Tock

For roughly a decade in the late aughts (~2007 onward), Intel followed a "tick-tock" cadence with a rapid advance to a new process node (tick) followed by architectural refinement at that node (tock).

For example, Intel raced from 45nm to 32nm, then released another 32nm chip with an architecture optimized to fully utilize the higher transistor count.

Winning the tick secured Intel's lead, while the tock optimized costs and maintained an edge even as competitors closed the gap.

Win, optimize, win, optimize.

The finance-minded reader might ask: can we further amortize those fab costs with a third production run? It’s tempting, particularly if the next shrink is stalling.

Intel did just that, traveling in the wrong strategic direction by expanding to a three-step process illustrated in its FY2015 10-K.

Was this strategy intentional, or was Intel simply trying to put a positive spin on their node struggles? Yeah, we’re purposefully hanging on this node, it’s financially savvy…

I'm not sure, but I’d wager on the latter.

Sadly, Intel hasn’t won a node race since.

Tick, Tick, Tick?

Under Pat Gelsinger’s leadership, Intel realized that owning a fab as an IDM is only a competitive advantage if it's the best fab in the world. But they were far behind.

Intel launched a Five Nodes in Four Years (5N4Y) strategy to catch up.

The naming convention of these five nodes—Intel 7, 4, 3, 20A, and 18A—makes it seem like Intel is ticking impossibly fast.

As a reminder, these aren’t each a tick in the traditional sense; some steps are incremental improvements on a tick that allow Intel to derisk new technology along the way. For example, Intel Foundry explains that Intel 3 is a tweak of Intel 4 and not an entirely new step.

Intel 3: Evolution of Intel 4 with 1.08x chip density and 18% performance per watt improvement. Adds denser library, improved drive current and interconnect while benefiting from Intel 4 > learnings for faster yield ramp.

These incremental derisking steps are a good idea. Foundry uses these steps to introduce advanced packaging features like Through Silicon Vias (TSV) and hybrid bonding, and Product can create a small portfolio of optimized products at each waypoint.

We can surmise that Intel 4 and Intel 20A, in particular, would be considered a tick because they each introduce new transistor technology.

Winning Back Share during 5N4Y?

One key point many seem to miss or be surprised by is that Intel will not win back CPU market share during the 5N4Y journey.

Intel already told us they expect to lose the 5N4Y races until possibly 18A.

How could they win back share if they’re losing the process technology race?

Intel will not regain CPU market share and pricing power until 18A at the earliest, and that’s only if they execute flawlessly.

Do Tocks Matter In The Meantime?

Digging into Intel’s 5Y4N roadmap, we see lots of new microarchitecture names across P and E cores:

Architectural innovations matter more now than ever in this post-Moore era, but I would be surprised if Intel takes on significant design innovation risk during 5N4Y to eke out more performance, especially while Foundry lags. (If you want to investigate, Chips and Cheese is an excellent resource for the nitty gritty architectural details.)

I wouldn’t expect these 5N4Y design updates to move the needle for Intel’s market share.

More Pain To Come

OK, so the intermediate ticks and tocks won’t regain competitiveness, but all is well when we get to 18A, right?

18A may not be the silver bullet it’s made out to be.

Intel suggests they’ll be competitive again in the data center on 18A.

Data center competitiveness is a draw for potential Intel Foundry customers, and securing those external partnerships is vital for the Foundry's success. But converting those wins into significant revenue will take time. We're likely looking at 2026 at the earliest, potentially even longer.

There's also uncertainty around the nature of these engagements; will customers utilize Intel Foundry for full chip manufacturing (wafers), or are they primarily interested in advanced packaging while sourcing wafers from TSMC or Samsung?

From Intel’s recent earnings:

Pat Gelsinger: I am pleased to announce that this quarter, we signed another meaningful customer on Intel 18A bringing our total to 6, a leader in the aerospace and defense industry… More broadly, we are seeing growing interest in Intel 18A and we continue to have a strong pipeline of nearly 50 test chips. The near-term interest in Intel foundry continues to be strongest with advanced packaging, which now includes engagements with nearly every foundry customer in the industry, including five design awards.

Intel’s server CPUs will also benefit from 18A. Yet Intel’s data center revenue last quarter contributed much less than client (24% vs 58%), so will 18A’s competitiveness materially impact Intel’s top line? Again, the best thing Intel can do to get the most from 18A is to get it into the customer’s hands sooner than EPYC on TSMC’s competitive node.

Intel claims client will be competitive at 14A. But when will 14A launch? This roadmap suggests sometime in 2026.

Pat seems to say the same, from Intel’s Q2 2024 Earnings Call:

Pat Gelsinger: And clearly, as a lot of tiles externally [built by TSMC] in '25, you know, we bring those home in '26. That's when we'll start to really, as Dave said, you'll see the benefits of the model that we've put in place. Tiles coming home, leadership process technology, leadership products starts in '25, deliver big time in '26 and beyond.

Intel is firmly convinced of 18A and 14A’s competitiveness, but there’s no guarantee they’ll win the race to unlock the node first.

If Intel has lost several straight races, how can they be so confident they’ll win this time?

Competition Isn’t Backing Down

The competition will not sit idly by, either. From TSMC’s recent earnings call:

Gokul Hariharan - JPMorgan Chase and Company

… Intel seems to think that they will be getting into technology or process technology leadership in 2025. Just wanted to hear what does TSMC think of Intel's claim? … do you feel you will lose a little bit of market share to Intel when it comes to the N2 or the first generation of nanosheet transistors? Or you think you're very high market share in N3 will continue into N2?

C.C. Wei - TSMC CEO

Let me answer your question with a very simple answer – no. But, well, I will state it a little bit long.Actually, we do not underestimate any of our competitors or take them lightly. Having said that, our internal assessment shows that our N3P -- now, I'll repeat again, N3P technology, demonstrated comparable PPA to 18A, my competitors' technology, but with an earlier time to market, better technology maturity, and much better cost. In fact, let me repeat again, our 2-nanometer technology without backside power is more advanced than both N3P and 18A, and is the most advanced technology when it is introduced in 2025.

TSMC is also in it to win it.

Are CPUs Dead?

I received reader feedback that CPUs no longer matter—the future is accelerated computing, and Intel has been left behind.

Undoubtedly, we’re seeing a significant shift in workloads from CPUs to better platforms like GPUs or AI accelerators. And yes, during this AI bubble, IT budget is pulling back from CPU servers and spending on parallel platforms instead.

However, this doesn’t imply that CPUs are dead. The client segment already uses parallelism, whether across multiple CPU cores or offloading to GPUs and NPUs. CPUs will never die in client.

Data center spending is all-in on AI accelerators right now, but CPUs will always have a place in the enterprise. Arguably, most existing data center workloads will continue to run on CPUs in the near term. For example, every mobile and web app built in the past decade relies on API servers and databases running on CPUs. Mobile banking runs on server CPUs. Office 365 might incorporate increasing amounts of GenAI, but it’s mostly just workloads on server CPUs.

While the continued decline of client CPUs seems plausible, the disappearance of server CPUs, even amidst the AI boom, is much harder to envision.

Foundry Has Never Mattered More

So far, we’ve established that Intel’s primary business is CPUs and that the most critical strategy for CPUs is to win the race to the next node. But then we noted that Intel will lose the intermediate races to 18A, and winning on 18A and 14A isn’t guaranteed. We concluded that Intel will likely continue to lose market share until mid-2025 to 2026 in the best-case scenario.

Yet Intel will continue to share positive progress updates to keep employees, customers, and investors engaged. This will be dizzying. We’ll hear that Intel is making significant 18A progress and feel hopeful, and the next week, we might get more lousy results and another layoff.

To keep steady during these ups and downs, this is how I’ll be processing Intel’s news:

This decision-flow diagram makes it easy: if it’s not about Foundry during 5N4Y, it probably doesn’t matter.

There are hundreds of ways for Intel to lose, but there is only one way to win.

Are CPUs and Foundry Enough For Now?

Another reader question: Doesn’t Gaudi matter? The AI accelerator market is huge right now.

My initial reaction: isn’t that a bit much right now?

Why should we think Intel can simultaneously

build a competitive AI accelerator business

focus on 5N4Y execution to revive their core CPU business

build a world-class foundry business?

Trying all three at once feels like an excellent way to lose them all.

A different approach is to focus on Foundry and CPUs now, then seriously participate in AI later via Foundry. This approach might have a higher revenue ceiling for Intel in the long run.

Might Nvidia be happy to have Intel as another supplier, especially if Gaudi is not a threat? And if Foundry can become a second supplier for Nvidia, could they eventually earn a shot as a primary supplier?

In the best case long run, Foundry could expand Intel’s reach across all logic chip markets:

As an added benefit, Intel can hedge the slow decline of x86 to Arm and smartphones via Foundry.

Of course, as Ben Thompson continues to point out, the actual obstacle to realizing this future may not be Intel Foundry’s abilities, but rather Intel’s culture.

I have continuously highlighted the fact that succeeding as a foundry business means overhauling Intel’s culture from one that dictates terms to one that is customer-centric; that is exactly why I thought the Tower acquisition was a good idea, and why it was such a problem that China blocked it.

Client Brand Strength Is Keeping Intel Alive

Our last topic.

Given that Intel will be uncompetitive until at least 18A, the question arises: where might Intel be hit the hardest over the coming quarters — client or data center?

Let's approach this from a first-principles perspective.

Business hardware decisions are typically driven by cold, hard numbers: total cost of ownership, performance benchmarks, and memory capacity. This contrasts with consumer purchases, which can be swayed by emotions or brand loyalty. As Intel falls behind in performance, it's reasonable to expect a corresponding decrease in data center spending and business laptop purchases.

Despite the competitive landscape, Intel continues to command a significant share of the consumer PC market, a testament to the enduring brand strength it built during the "Intel Inside" era.

My hypothesis is as follows: Intel will feel more pressure in data center than in client.

Let's turn to recent data to test this. After all, given that Intel's strategic differentiators aren't undergoing a major transformation during the 5N4Y plan, recent trends will likely persist until 18A/14A barring unforeseen external shocks.

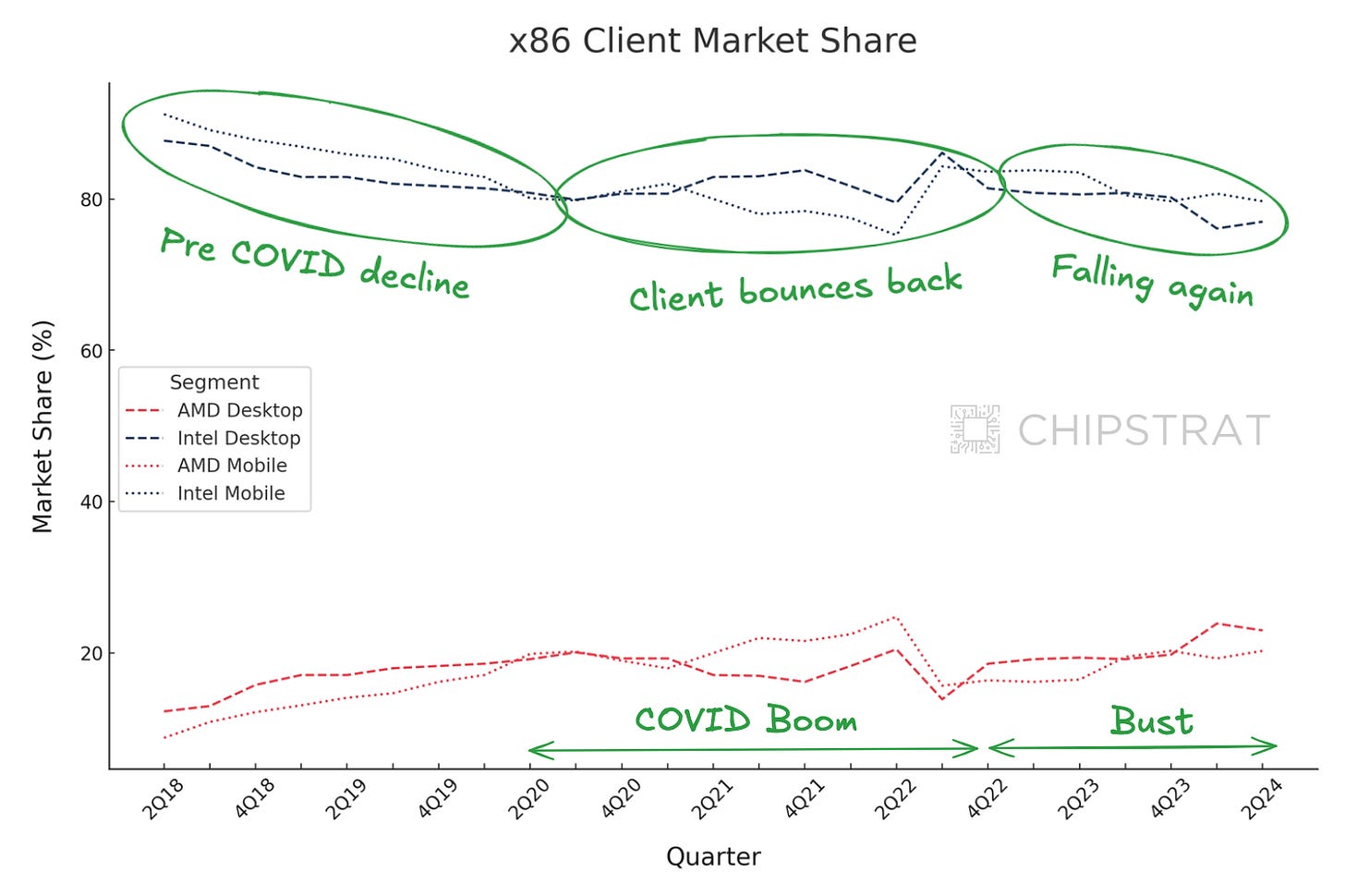

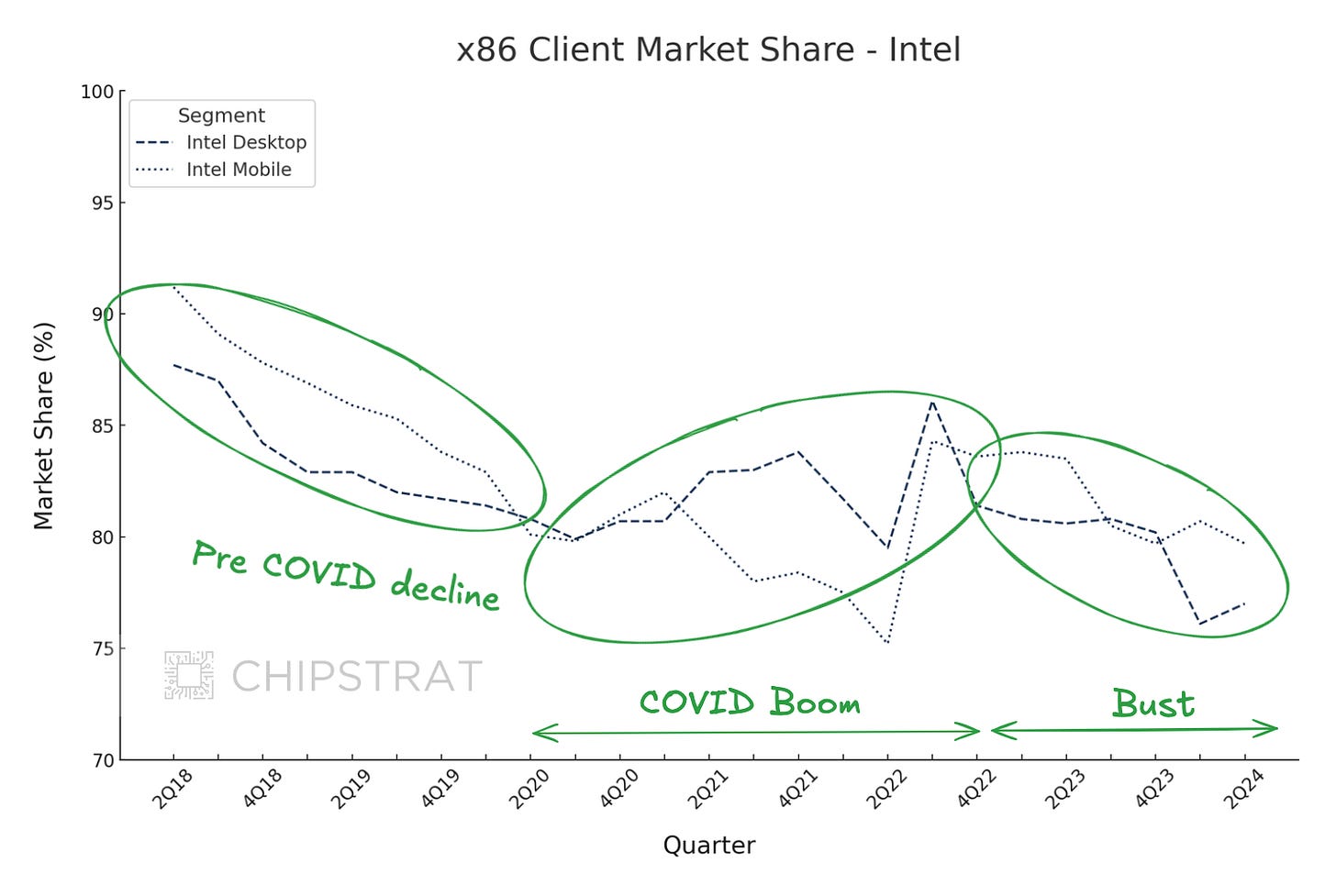

Mercury Research data (via Tom's Hardware) shows Intel's x86 market share is eroding. Servers are hit hardest, but client share is also down.

However, there’s an interesting positive spike for Intel in client, and it looks related to the COVID PC boom and subsequent bust.

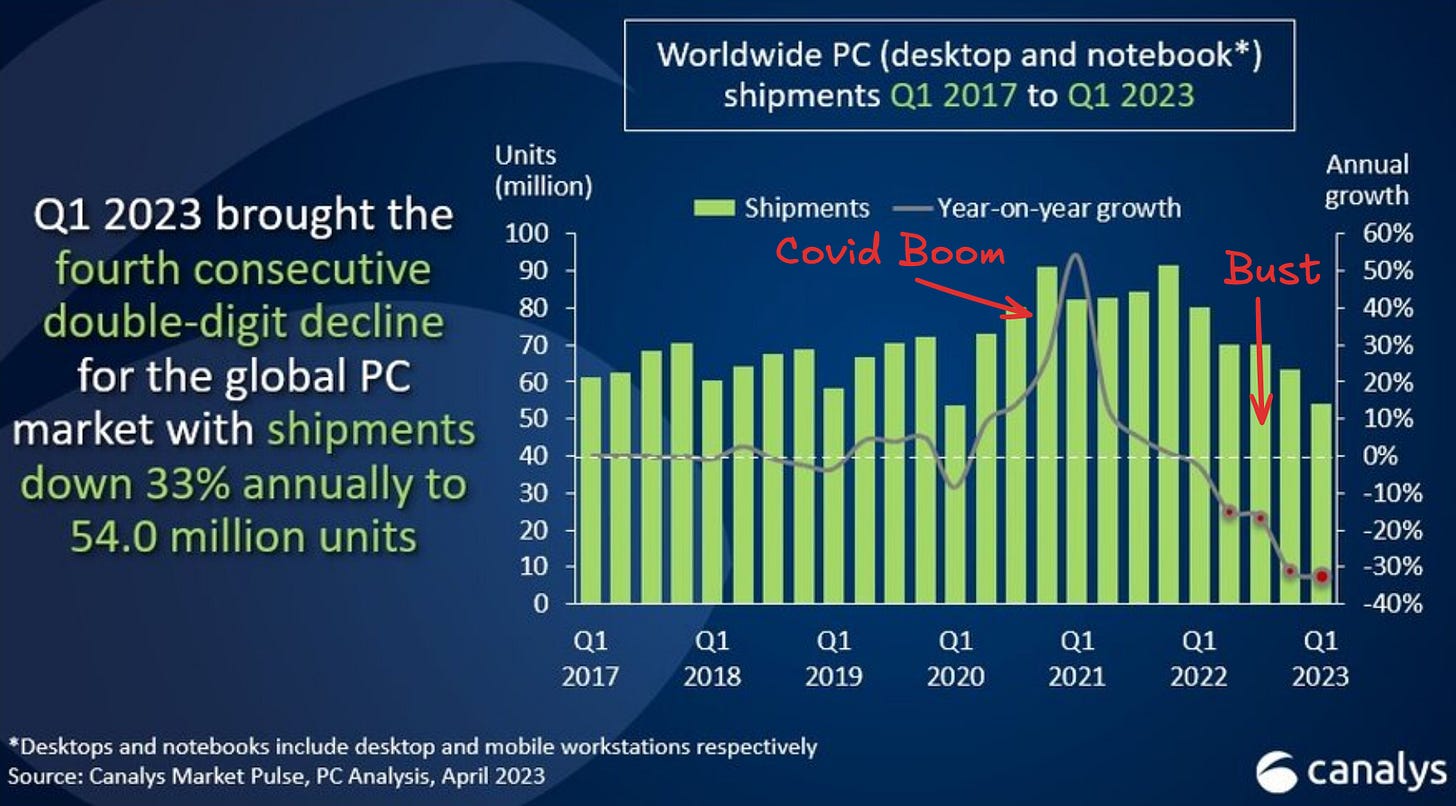

As a reminder, COVID triggered a surge in PC buying as people transitioned to working and learning from home.

If we look at Intel’s laptops and desktops, we can see a slight downward trend before the COVID-19 PC boom. Interestingly, this market share trend seems to have reverted throughout the boom but then resumed downward.

This is strategically interesting—what caused the uptick, and was it anything Intel can recreate?

If we zoom in on just the Intel data, we see the COVID-19 boom results were choppy. Regardless, desktop and mobile exit the boom with a higher share than when they entered:

In normal times, purchase decisions are highly driven by cost. What’s the best laptop I can get under $700? But recall that many US consumers also received stimulus money during COVID, and some businesses were paying for employee’s laptops. I bet total cost was less of a factor than usual for many purchases. Furthermore, given the indeterminate nature of working from home, I wonder if perceived quality may have mattered more than normal.

Thus, I wonder if the uptick in sales during COVID was caused by Intel’s brand strength in the client premium segment.

If true, that suggests that when willingness to pay and the need for perceived quality increase, customers choose Intel over AMD. Conversely, value seekers choose AMD over Intel when demand is more elastic.

Of course, this is just a hypothesis. Maybe the explanation is different, like OEM inventory or supply chain dynamics. Access to finer-grained PC sales data would help test this hypothesis. Did the percentage of premium laptops increase during this time, and did Intel outperform? (I’d love to know if anyone has relevant data!)

To Be Continued 😅

I went down several rabbit holes responding to reader questions and ran out of time. We haven’t yet addressed Intel’s lousy quarter. There’s been great coverage already, and we’ll add to it next time by looking at how the AI PC mirage may have contributed to Intel’s surprisingly bad results.