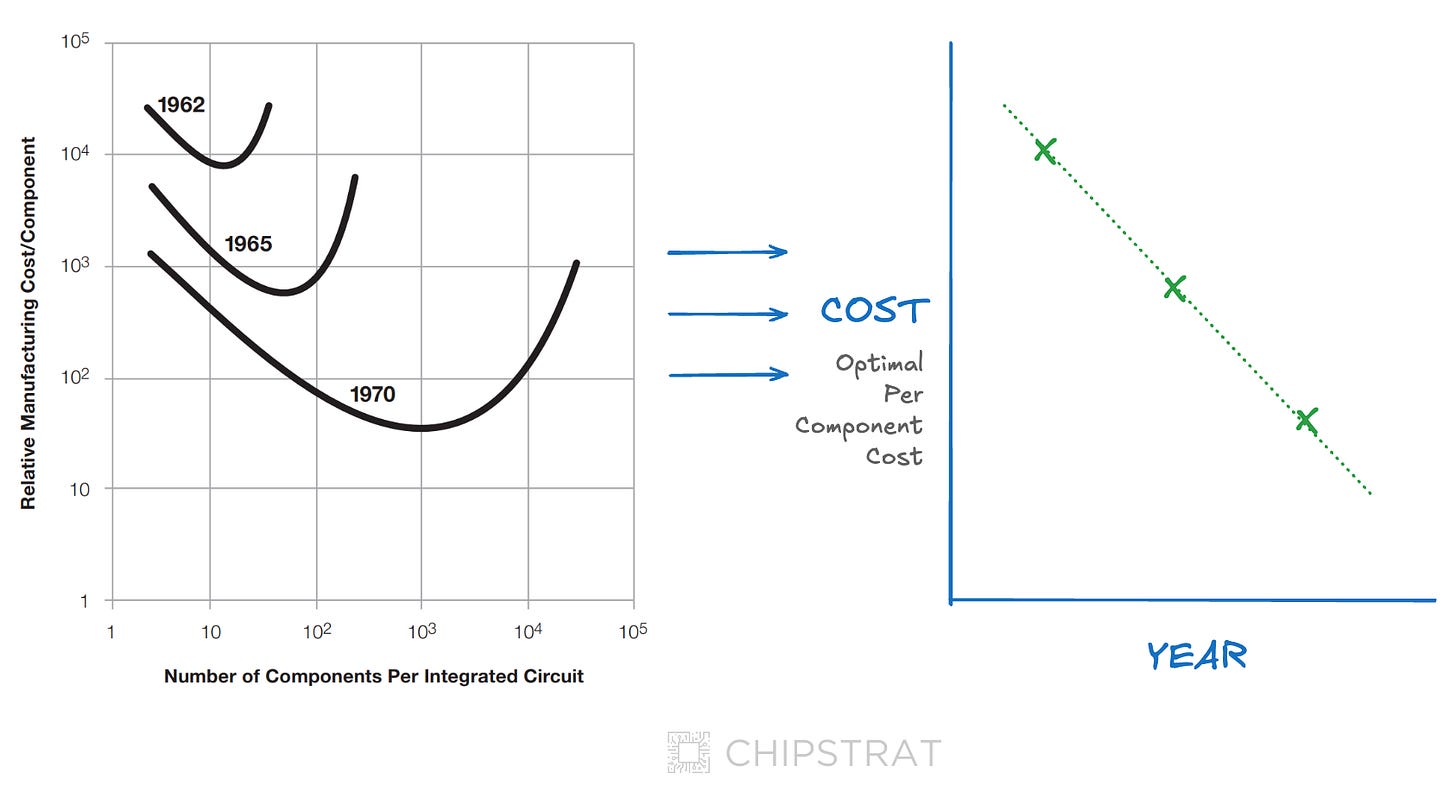

Computing power keeps growing, but its declining cost is often overlooked. Over the last six decades, the semiconductor industry has relentlessly driven down transistor costs:

The decline in per-transistor cost has helped fuel technology innovation and adoption, impacting labor productivity; not many businesses could afford a mainframe, but some could afford a minicomputer and most could afford a PC.

More recently, though, the cost decline has started to taper off.

This might seem alarming, but for 60 years the industry has collectively innovated past these challenges, and I expect it will continue to do the same. (After all, intelligence is more accessible than ever!)

My real concern is that a single company, acting rationally within a capitalist framework, could jeopardize decades of collective progress.

Cost Per Transistor

Moore’s Law states that transistor density doubles rapidly. Moore first observed yearly transistor doubling but later revised it to every two years.

The choice of the word “law” in Moore’s Law is a misnomer; Moore’s observation is best understood as an aspirational goal that the semiconductor industry has tirelessly pursued.

I think of it as a sort of self-fulfilling prophecy. Even after 60 years, transistor density still doubles regularly, albeit more slowly than before. (For the interested reader, Pushkar Ranade’s wonderful post details various technical innovations Intel pioneered that contribute to fulfilling Moore’s Prophecy, especially in the post-Dennard scaling era.)

History overlooks Moore’s focus on the declining cost per transistor. From his famous 1965 article, we can see Moore’s correct prediction of falling costs with increasing density:

Why?

The cost per transistor is equal to the cost of fabricating a wafer divided by the number of transistors that fit on the wafer.

The industry must increase transistor density faster than wafer cost to sustain Moore’s economic observation of a decreasing cost per transistor.

The cost to fabricate a wafer is dominated mainly by fixed costs like facilities, equipment, depreciation, and R&D. Fortunately, these costs have historically risen slower than transistor density—approximately every four years for a fab, according to Rock’s Law.

Transistor density must outpace wafer costs to undergird Moore’s Precept, as put forth by Ben Thompson:

In practice, though, Moore’s Law has become something more akin to a fundamental precept underlying the tech industry: computing power will both increase and get cheaper over time. This precept — which I will call Moore’s Precept, for clarity — is connected to Moore’s technical prediction: smaller transistors can switch faster, and use less energy in the switching, even as more of them fit on a single wafer; this means that you can either get more chips per wafer or larger chips, either decreasing price or increasing power for the same price. In practice we got both.

However, Ben points out that the costs of wafers significantly increased with the introduction of EUV:

with TSMC’s 5 nm process the price per transistor increased — and it increased a lot (20%). The reason was obvious: 5 nm was the first process that required ASML’s extreme ultraviolet (EUV) lithography, and EUV machines were hugely expensive — around $150 million each.

This wafer cost increase outpaced transistor density improvements, leading to a per-unit transistor cost increase.

By the way, the future of EUV is even more expensive — high-NA EUV machines reportedly cost $380M each.

Fortunately, this rapid cost inflation only occurred when EUV was introduced, and subsequent nodes returned to a typical wafer cost trajectory:

What happened at 5 nm was similar to what happened at 20 nm, the last time the price/transistor increased: that was the node where TSMC started to use double-patterning, which means they had to do every lithography step twice; that both doubled the utilization of lithography equipment per wafer and also decreased yield. For that node, at least, the gains from making smaller transistors were outweighed by the costs. A year later, though, and TSMC launched the 16 nm node that re-united Moore’s Law with Moore’s Precept. That is exactly what seems to have happened with 3 nm — the gains of EUV are now significantly outweighing the costs — and early rumors about 2 nm density and price points suggests the gains should continue for another node.

Note that this historical analysis is in the context of foundry competition, so prices are reflective of costs; yes, whoever wins the race to the next node can take more margin and will win massive volume to boot, but the threat of competition at future process nodes keeps prices honest. In that sense, TSMC, Intel Foundry, and Samsung are somewhat of an oligopoly.

As long as Intel Foundry and Samsung are viable alternatives, the transistor cost curve will be driven by Moore’s Law (transistor density) versus Rock’s Law (wafer costs), and it’s up to the collective industry to ensure transistor density improvements outpace wafer cost inflation.

But, as Jay Goldberg astutely points out in The Real End of Moore’s Law, this all goes out the window if Intel Foundry and Samsung drop out of the foundry business:

Put simply, absent any viable competition TSMC goes from ‘effective’ leading-edge monopolist, to true monopolist. They can raise prices as high as they want.

Absent the threat of competition, TSMC can shift from a cost-plus pricing model to a value-based pricing model. In turn, wafer prices will increase faster than transistor density causing the transistor price cost curve to rise.

Don’t blame TSMC, though — this is capitalism is functioning as expected. Any rational, profit-driven company will optimize for its self-interest, even at the expense of broader systemic innovation. It’s not fair to ask TSMC shareholders to leave money on the table for the greater good, is it?

Yet the death of cost-per-transistor scaling would profoundly handicap the tech industry specifically, and global productivity broadly.

As Thompson points out:

What is critical is that the rest of the tech industry didn’t need to understand the technical or economic details of Moore’s Law: for 60 years it has been safe to simply assume that computers would get faster, which meant the optimal approach was always to build for the cutting edge or just beyond, and trust that processor speed would catch up to your use case.

When transistor costs increase for several process nodes in a row, out goes the old: Developers: We’re running out of compute headroom, but if we can make it another year until the next chip ships we’ll be OK.

And in with the new:

Developers: we’re running out of compute headroom, I guess we’ll need a second processor.

Consumers, too.

From: I should upgrade my laptop; this one is pretty slow.

To: the new Macbook isn’t faster. I’ll pass.

This will hurt the global economy. And what about productivity gains?

Moreover, given the lack of foundry competition, what incentive does TSMC have to even ship new process nodes every two years? Why not let that slide to three or more years?

(And don’t forget, Intel contributes significantly to semiconductor R&D innovation; can imec and TSMC succesfully innovate fast enough to keep this every-two-years cadence?)

We must avoid this future; we can’t afford a true foundry monopoly.

As Goldberg aptly sums up:

And this is why Intel Foundry matters. Today, people can credibly argue that there is no commercial need for Intel Foundry in the industry, that customers do not need a second source to TSMC. But look ahead a few years, to a world in which TSMC can raise prices freely. At that time, everyone will be desperately looking for that alternative.

We need foundry competition.

We need Intel Foundry.